Dear NVIDIANS and Stakeholders, Accelerated computing is the best way to build sustainable data centers. When the cost of a fundamental resource, like computing, improves by orders of magnitude, new methods are invented, and new utilities are discovered. /NVIDIA Corporation/

🟩 SP5 > Nvidia > Latest > NVDA 0.00%↑

☑️ #100 Jun 7, 2024

Accelerated computing is the best way to build sustainable data centers

@The_AI_Investor: $NVDA's platform is sustainable computing. Most of us don't appreciate the magnitude of this. When the cost of a fundamental resource improves by orders of magnitude, new methods are invented and new utilities are discovered

🙂

☑️ #99 Jun 5, 2024

Over the past 32 trading days, NVDA has gained more than $1 trillion in market cap

@jessefelder: Over the past 32 trading days, NVDA has gained more than $1 trillion in market cap. To put that into some sort of perspective, the 6-week gain is greater than the total market cap of BRKA, which Warren Buffett has spent 6 decades in building.

🙂

☑️ #98 Jun 5, 2024

Photo Book

@PeterBerezinBCA: Prediction: This photo will be remembered as marking the top in Nvidia’s stock.

🙂

☑️ #97 Jun 4, 2024

This is wild

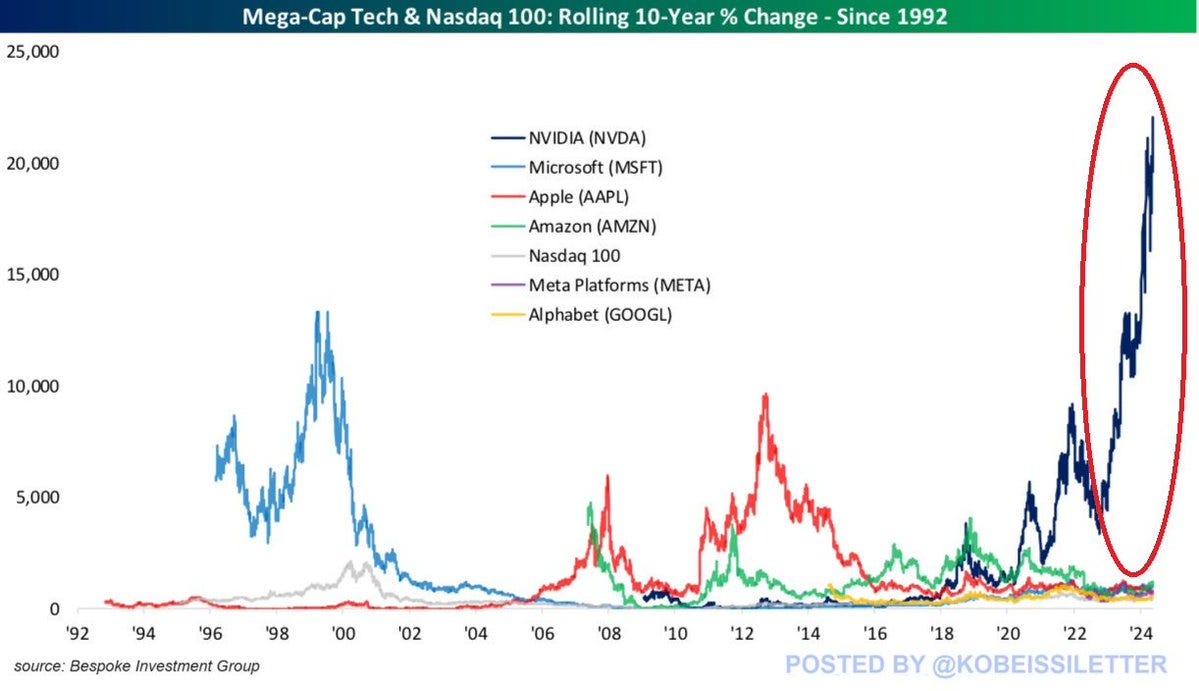

@KobeissiLetter: This is wild: Nvidia, $NVDA, has seen a massive +22,080% gain over the last 10 years. Historically speaking, no other mega-cap technology company has ever had such a large run. The 2nd largest big tech rally was recorded by Microsoft, $MSFT, during the 2000 Dot-com bubble when its shares skyrocketed +13,300%. By comparison, Apple and Amazon's best 10-year gains were +9,650% and +4,730%, respectively. Nvidia has now accounted for ~43% of the S&P 500's year-to-date market cap gain. How high can Nvidia go?<>

🙂

☑️ #96 Jun 4, 2024

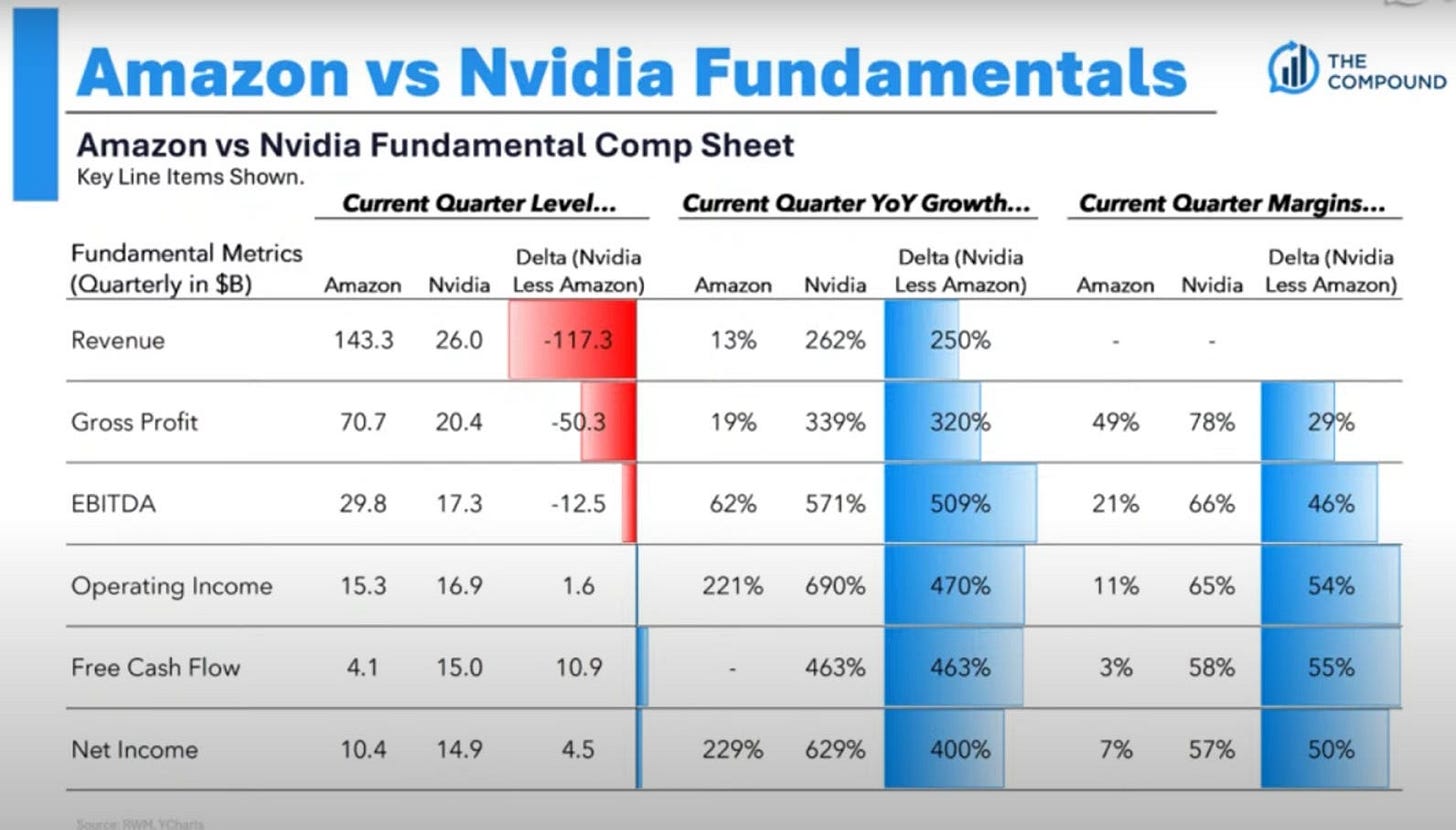

Numbers don’t lie

@simplyfinance: The next time someone says NVDA shouldn’t be worth more than AMZN you can show them this chart.

Numbers don’t lie

🙂

☑️ #95 Jun 3, 2024

NVIDIA—Our Story, Built on Taiwan

@NVIDIA: NVIDIA pays homage to our ecosystem of partners in Taiwan who, over the past 30 years, have helped us ignite the accelerated computing revolution and the next era of AI. Thank you, Taiwan.

🙂

☑️ #94 Jun 2, 2024

Good look at NVDA

@thexcapitalist: Good look at $NVDA by Kroker Equity Research!

🙂

☑️ #93 May 30, 2024

18 months since ChatGPT

@dailychartbook: "When ChatGPT launched, the Santa Clara-based chipmaker was worth more than $2 trillion less than Apple. Now, only 18 months later, it has almost closed that gap."

- John Authers

🙂

☑️ #92 May 29, 2024

Probably one of the most powerful demonstration of a GPU vs. a CPU

@amritaroy: Probably one of the most powerful demonstration of a GPU vs. a CPU. Check out our latest post, where we dive into Nvidia as it embarks on leading the way for accelerated computing in the fourth industrial revolution.

🙂

☑️ #91 May 28, 2024

$3T

@thecreditstrategist: $NVDA approaching $3bn market cap & 70x earnings which is reflection of AI bubble. Investors may make money buying the stock now but the valuation is stretched & they are counting on AI hype that has a long way to go to prove itself. Lot of things have to go right for company to maintain this growth rate.

🙂

☑️ #90 May 25, 2024

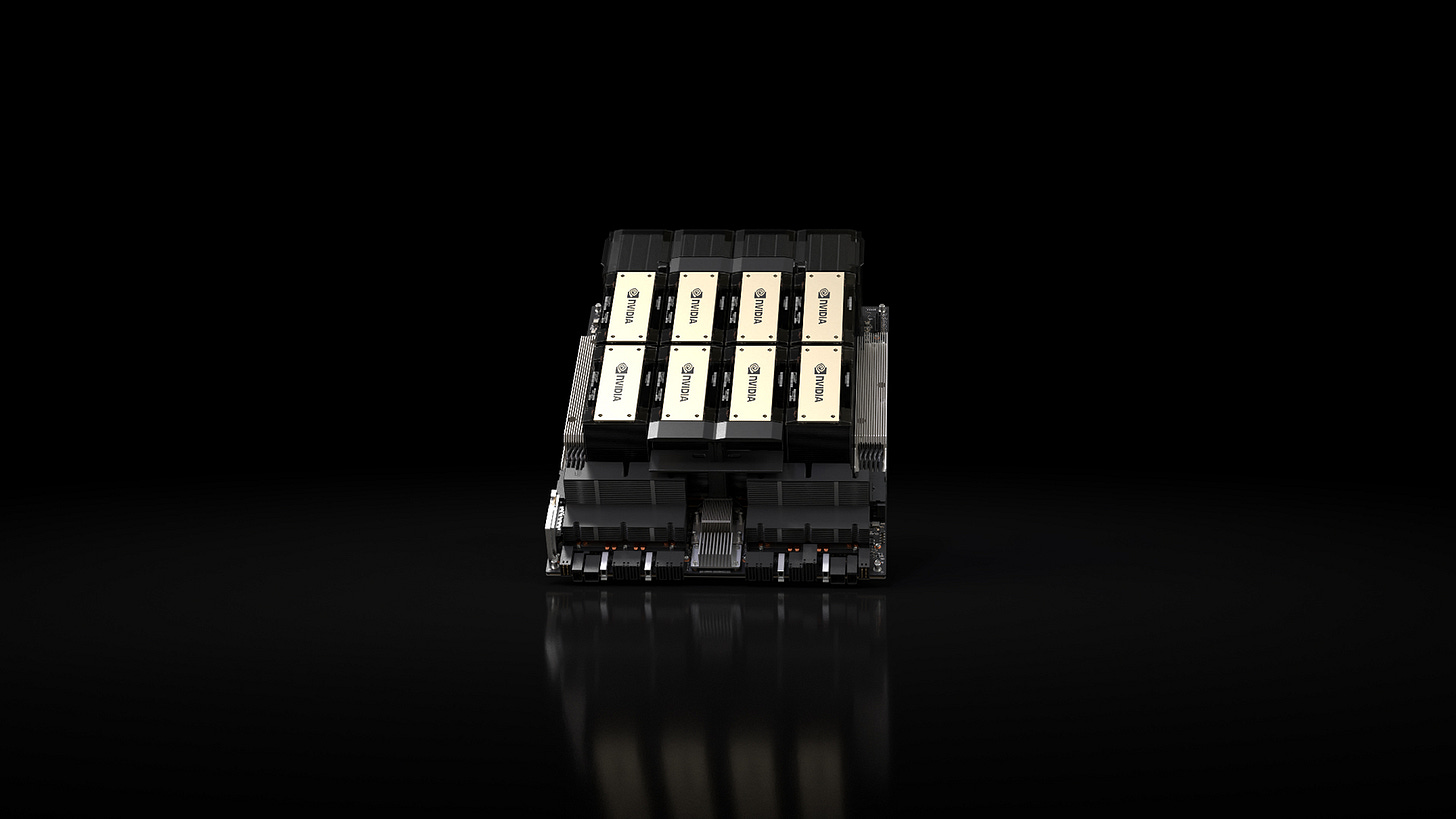

Gigactory of Compute: X.AI Corp.’s supercomputer made up of Nvidia's flagship H100 GPUs

theinformation.com: [Excerpt] In a May presentation to investors, Musk said he wants to get the supercomputer running by the fall of 2025 and will hold himself personally responsible for delivering it on time. When completed, the connected groups of chips—Nvidia’s flagship H100 graphics processing units—would be at least four times the size of the biggest GPU clusters that exist today, such as those built by Meta Platforms to train its AI models, he told investors.

🔹Related content:

@paulbram: H100 is not flagship anymore.

@RemyPrice: I think for exascale it would still be considered flagship, since the A40 can only link two and the Blackwell isn’t shipping for a while.

🙂

☑️ #89 May 24, 2024

Huawei AI Chips

@sinocism: From my thursday newsletter:

⚡️

@WeightyThoughts: It’s still non-trivial to switch from NVIDIA’s ecosystem. Those with access to NVIDIA’s best GPUs don’t have this problem, but now there’s a trade-off between programmer productivity (NVIDIA) and performance (Huawei). There’s a reason why China keeps topping supercomputer rankings with machines that no one actually uses.

It’ll be interesting to see if NVIDIA can get around some of the chip bans on interconnects—which is really the bigger killer of NVIDIA in the China ecosystem. If they can, cheap enough NVIDIA GPUs can be scaled out horizontally.

🙂

☑️ #88 May 23, 2024

Basically one stock controlling the entire market

@daxtradingideas: Justo to put NVDA into perspective: basically one stock controlling the entire market. Insane!

🙂

☑️ #87 May 23, 2024

The next industrial revolution has begun

@thetranscript: $NVDA CFO: "Data center revenue of $22.6B was a record, +23% QoQ & +427% YoY, driven by continued strong demand for the NVIDIA Hopper GPU computing platform. Compute revenue grew more than 5x and networking revenue more than 3x. Strong sequential data center growth was driven by all customer types, led by enterprise and consumer Internet companies"

⚡️

@thetranscript: $NVDA CEO: "The next industrial revolution has begun..Our data center growth was fueled by strong & accelerating demand for Gen AI training & inference on the Hopper platform...Blackwell platform is in full production & forms the foundation for trillion-parameter-scale Gen AI"

r

💲 EARNINGS ANNOUNCEMENT: May 22, 2024

NVIDIA Announces Financial Results for First Quarter Fiscal 2025

NVDIA announces 10 for 1 stock split, dividend boost of 150%

Cubic Analytics: NVDA Just Did It Again

☑️ #86 May 20, 2024

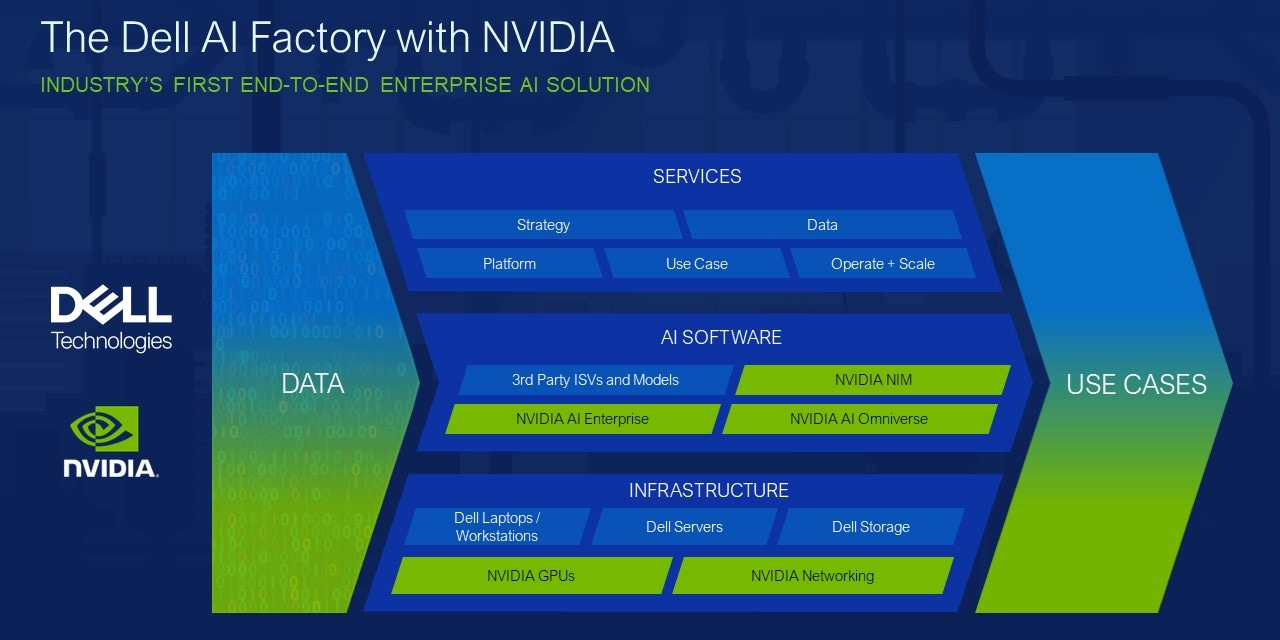

Transform Innovation into Value: The Dell AI Factory with NVIDIA

dell.com: [Excerpt] The Dell AI Factory with NVIDIA turbocharges AI adoption to simplify development, automate workflows and deliver up to 86% faster business outcomes.

The Dell AI Factory, introduced at Dell Technologies World this week, addresses organizations’ most pressing challenges with a portfolio of AI technologies, an open ecosystem of partners, validated and integrated solutions and expert services to help you achieve AI outcomes faster. In March, at NVIDIA’s GTC conference, we announced the Dell AI Factory with NVIDIA, the industry’s first end-to-end enterprise AI solution, as a proven example of the Dell AI Factory, and designed to simplify and accelerate AI adoption within organizations. In fact, the Dell AI Factory with NVIDIA reduces setup time by up to 86% over doing it yourself.¹

🙂

☑️ #85 May 15, 2024

NVDIA Santa Clara

sfstandard.com: [Excerpt] Nvidia drops $374M to become its own landlord in Santa Clara. The most valuable office tenant in the country ended a quiet bidding war.

Between 2018 and 2022, Nvidia built and opened two nearby office buildings, totaling over 1.2 million square feet. Those buildings, named Voyager and Endeavor (the former a reference to Star Trek and the latter a NASA space shuttle), were developed and owned by Nvidia and were not part of this recent sale.

Despite what one might think, given the size of its structures, Nvidia doesn’t actually manufacture any of its signature chips on-site. Rather, its headquarters is a combination of office, lab and data center space connected by sprawling parks and a network of overhead bridges. Of note, the company still does not require its employees to work in-person, according to reports.

🔹Related content:

2788 San Tomas Expressway

Santa Clara, CA 95051

View Directions Map

View Campus Map (pdf)

🙂

☑️ #84 May 14, 2024

Google I/O: NVDIA Blackwell

@StockMKTNewz: Google $GOOGL CEO Sundar Pichai just said it will be one of the first companies with Nvidia's $NVDA Blackwell GPUs which will be available in early 2025

🔹Related content:

io.google/2024 > Google Keynote (Google I/O ‘24)

🙂

☑️ #83 May 7, 2024

Federal AI Sandbox powered by NVDIA

@MITRECorp: U.S. agencies often struggle to find the computing infrastructure to conduct meaningful #AI research. Our new Federal AI Sandbox—powered by an @nvidia DGX SuperPOD—will be capable of training cutting-edge AI models for all of our federal sponsors.

⚡️

@MITRECorp: We're building a supercomputer with @nvidia to speed #AI deployment capabilities across the U.S. Our Federal AI Sandbox will allow federal agencies from the Pentagon to the IRS to test cutting-edge technologies. Read the full article on @washingtonpost

washingtonpost.com/technology/2024/05/07/mitre-nvidia-ai-supercomputer-sandbox

🔹Related content:

MITRE Impact Report: MITRE Connects

🙂

☑️ #82 Apr 25, 2024

AI Factory for the New Industrial Revolution | NVIDIA GTC24

@NVIDIA: Discover how NVIDIA technologies are being used to build the AI factories that power the new era of accelerated computing and real-time #generativeAI. https://www.nvidia.com/en-us/data-center/technologies/blackwell-architecture/

🙂

☑️ #81 Apr 24, 2024

Nvidia CEO hand-delivers world's fastest AI system to OpenAI, again — first DGX H200 given to Sam Altman and Greg Brockman

tomshardware.com: [Excerpt] Talk about a special delivery.

Huang signed the supercomputer with the epithet "to advance AI, computing, and humanity". The signature and photo op bring to mind a very similar scene from 2016 when Huang had a very similar delivery for OpenAI - the world's first DGX-1 server handed off to an excited Elon Musk. Back when Musk was a proud member and co-founder of OpenAI, he happily received the DGX-1, also signed with Huang's cheers "to the future of computing and humanity". The gift of the DGX-1 was hailed by Elon and many members of the OpenAI team as a boon that accelerated their research by weeks, and the astronomical leap in performance up to the DGX H200 could have a similar impact.

🔹Related content:

@gdb: First @NVIDIA DGX H200 in the world, hand-delivered to OpenAI and dedicated by Jensen "to advance AI, computing, and humanity"

🙂

☑️ #80 Apr 24, 2024

NVIDIA to acquire GPU Orchestration software provider Run:ai

blogs.nvidia.com: [Transcription] [Excerpt] Israeli startup promotes efficient cluster resource utilization for AI workloads across shared accelerated computing infrastructure.

Managing and orchestrating generative AI, recommender systems, search engines and other workloads requires sophisticated scheduling to optimize performance at the system level and on the underlying infrastructure.

Run:ai enables enterprise customers to manage and optimize their compute infrastructure, whether on premises, in the cloud or in hybrid environments.

🔹Related content:

Make the Most Out of Your NVIDIA AI Investment with Run:ai: From DGX BasePOD to high-end DGX SuperPODs-Run:ai is the ideal platform to train and deploy your models and to operate your DGX infrastructure

🙂

☑️ #79 Mar 26, 2024

The Historic Rise of the Chip Sector... in pictures

StreetSmarts: Plus: Timeline of Boeing's disgrace, the choc-apocalypse in Cocoa prices; and much more.

🙂

☑️ #78 Mar 19, 2024

NVIDIA Waves and Moats

stratechery.com: [Transcription] [Excerpt] What is interesting to a once-and-future old fogey like myself, who has watched multiple Huang keynotes, is how relatively focused this event was: yes, Huang talked about things like weather and robotics and Omniverse and cars, but this was, first-and-foremost, a chip launch — the Blackwell B200 generation of GPUs — with a huge chunk of the keynote talking about its various features and permutations, performance, partnerships, etc.

🙂

☑️ #77 Mar 18, 2024

A New Class of AI Superchip: B200

nvidianews.nvidia.com: [Transcription] [Excerpt] GTC—Powering a new era of computing, NVIDIA today announced that the NVIDIA Blackwell platform has arrived — enabling organizations everywhere to build and run real-time generative AI on trillion-parameter large language models at up to 25x less cost and energy consumption than its predecessor.

🔹Related content:

NVIDIA GTC 2024 Keynote with NVIDIA CEO Jensen Huang

World’s Most Powerful Chip

Packed with 208 billion transistors, Blackwell-architecture GPUs are manufactured using a custom-built 4NP TSMC process with two-reticle limit GPU dies connected by 10 TB/second chip-to-chip link into a single, unified GPU.

🙂

☑️ #76 Mar 18, 2024

NVIDIA Unveils 6G Research Cloud Platform to Advance Wireless Communications With AI

nvidianews.nvidia.com: [Transcription] [Excerpt] Ansys, Keysight, Nokia, Samsung Among First to Use NVIDIA Aerial Omniverse Digital Twin, Aerial CUDA-Accelerated RAN and Sionna Neural Radio Framework to Help Realize the Future of Telecommunications.

GTC—NVIDIA today announced a 6G research platform that empowers researchers with a novel approach to develop the next phase of wireless technology.

The NVIDIA 6G Research Cloud platform is open, flexible and interconnected, offering researchers a comprehensive suite to advance AI for radio access network (RAN) technology. The platform allows organizations to accelerate the development of 6G technologies that will connect trillions of devices with the cloud infrastructures, laying the foundation for a hyper-intelligent world supported by autonomous vehicles, smart spaces and a wide range of extended reality and immersive education experiences and collaborative robots.

Ansys, Arm, ETH Zurich, Fujitsu, Keysight, Nokia, Northeastern University, Rohde & Schwarz, Samsung, SoftBank Corp. and Viavi are among its first adopters and ecosystem partners.

🔹Continue reading | NVIDIA 6G Developer Program

🙂

☑️ #75 Mar 18, 2024

AWS and NVIDIA Extend Collaboration to Advance Generative AI Innovation

press.aboutamazon.com: [Transcription] [Excerpt] SAN JOSE, Calif.--(BUSINESS WIRE)-- GTC—Amazon Web Services (AWS), an Amazon.com company (NASDAQ: AMZN), and NVIDIA (NASDAQ: NVDA) today announced that the new NVIDIA Blackwell GPU platform—unveiled by NVIDIA at GTC 2024—is coming to AWS. AWS will offer the NVIDIA GB200 Grace Blackwell Superchip and B100 Tensor Core GPUs, extending the companies’ longstanding strategic collaboration to deliver the most secure and advanced infrastructure, software, and services to help customers unlock new generative artificial intelligence (AI) capabilities.

🙂

☑️ #74 Mar 18, 2024

Jensen Huang: ‘We Created a Processor for the Generative AI Era’

@NVIDIA: Watch NVIDIA CEO Jensen Huang’s GTC keynote to catch all the announcements on AI advances that are shaping our future.

GTC March 2024 Keynote with NVIDIA CEO Jensen Huang

🙂

☑️ #73 Mar 8, 2024

Authors Sue NVIDIA Over NeMo AI’s Copying Of Copyrighted Works

cand.uscourts.gov: [Summarizer] Nazemian et al v. NVIDIA Corporation.

NVIDIA is facing a lawsuit because it allegedly used copyrighted content without permission to train its artificial intelligence systems. Three authors brought the case in a California court, claiming the tech giant included their copyrighted works in the training dataset of its NeMo AI platform.

Case Number: 3:2024cv01454

Court: U.S. District Court for the Northern District of California

Represented by: Joseph R. Savieri

Screenshot. Source: United States District Court. Northern District of California (pdf)

🔹Related content:

cand.uscourts.gov: Cases of Interest

dockets.justia.com: Dockets & Filings

unicourt.com: Court Documents

🙂

☑️ #72 Feb 22, 2024

Nvidia Market Cap Surpasses Size of Canada's Economy

@DeItaone: Nvidia Market Cap Surpasses Size of Canada's Economy.

Chip maker Nvidia's latest jump in share price now gives it a market capitalization that now surpasses the size of the Canadian economy. Seasonally-adjusted real GDP for Canada, a Group of Seven economy, was C$2.349 trillion, or the equivalent of $1.74 trillion, as of 3Q. Data for 4Q data is scheduled for release next week. In Thursday morning trading, Nvidia's market capitalization rose $238 billion to $1.925 trillion.

🙂

☑️ #71 Feb 22, 2024

Top 1 Biggest Single-Day Market Cap Gains

@AlphaPicks: Nvidia has done it!

💲 EARNINGS ANNOUNCEMENT: Feb 21, 2024

NVIDIA Announces Financial Results for Fourth Quarter and Fiscal 2024

☑️ #70 Feb 20, 2024

Nokia, Nvidia reach deal to focus on AI-based telecoms solutions

nokia.com: [Transcription] [Excerpt] Nokia to Revolutionize Mobile Networks with Cloud RAN and AI Powered by NVIDIA.

Espoo, Finland – Nokia today announced that it is collaborating with NVIDIA to revolutionize the future of AI-ready radio access network (RAN) solutions. The collaboration, which further enhances Nokia’s anyRAN approach, aims to position AI as fundamental to transforming the future of the telecommunications network business. As AI is poised to change the landscape of telecommunications infrastructure and services within the mobile operator sector, this collaboration aims to deliver incremental value to end users through the introduction of innovative telco AI services.

🙂

☑️ #69 Feb 14, 2024

Introducing Chat with RTX

@NVIDIAGeForce: Create A Personalized AI Chatbot with Chat With RTX.

Create a personalized chatbot with the Chat with RTX tech demo. Accelerated by TensorRT-LLM and Tensor Cores, you can quickly get tailored info from your files and content. Just connect your data to an LLM on RTX-Powered PCs for local, fast, generative AI.

🙂

☑️ #68 Feb 6, 2024

Top NVDA customers by revenue

@SupBagholder: $NVDA's top 4 customers account for 40% of revenues, and every one of them is actively working on their own custom AI silicon AI capex will keep flowing to NVDA in the short run, but what will happen when initial training is done and inference is done locally.

🙂

☑️ #67 Jan 29, 2024

Kore.ai Secures $150 Million Strategic Growth Investment to Drive AI-powered Customer and Employee Experiences for Global Brands

kore.ai: [Transcription] [Excerpt] Led by FTV Capital, with participation from NVIDIA and existing investors, funding will further solidify Kore.ai’s leading position in explosive advanced AI market

ORLANDO, Fla., January 30, 2024 — Kore.ai, a leader in enterprise conversational and generative AI platform technology, today announced $150 million in funding. The strategic growth investment was led by FTV Capital, a sector-focused growth equity investor with a successful 25+ year track record investing across enterprise technology, along with participation from NVIDIA and existing investors such as Vistara Growth, Sweetwater PE, NextEquity, Nicola and Beedie. The new funding will accelerate Kore.ai’s market expansion and continuous innovation in AI to deliver tangible business and human value at scale.

🙂

☑️ #66 Jan 29, 2024

98% market share in data center GPUs

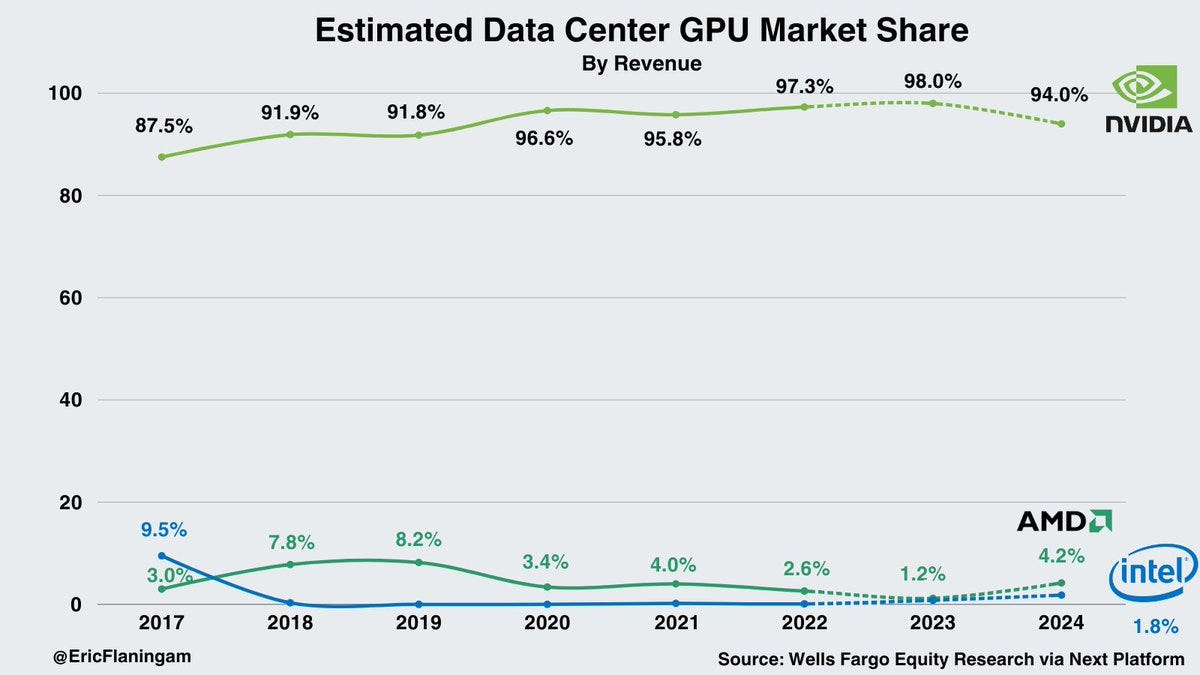

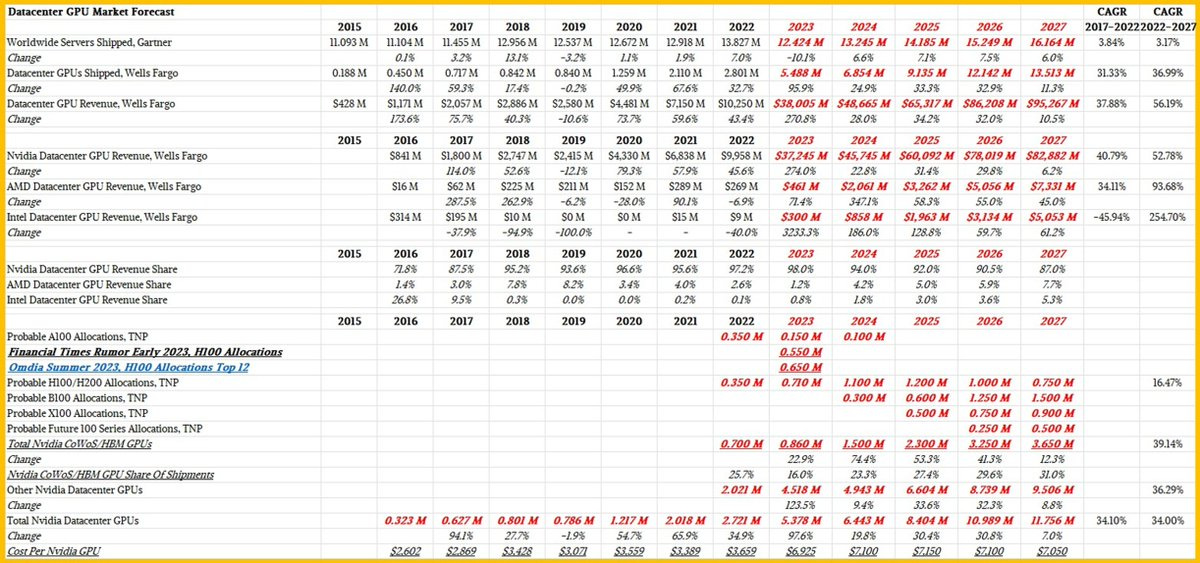

@EricFlaningam: Estimated Data Center GPU Market Share:

There’s a lot of talk about the future of data center GPUs and competition for $NVDA.

Based on estimates from Wells Fargo Equity Research, $NVDA currently has a 98% market share in data center GPUs.

That number is projected to drop to 94-96% in 2024.

Nvidia is projected to earn $37.2B and $45.7B in data center GPU revenue for 2023 and 2024.

For AMD, those numbers are $461M and $2.1B.

Note: the full year 2023 and 2024 data is an estimate from analysts and will likely come down to how much capacity $NVDA $AMD and $INTC can acquire. The actual numbers should be considered with a margin of error.

⚡️

@EricFlaningam: It’s remarkable what Jensen has built with Nvidia’s software and networking ecosystem around the GPUs.

Data from Wells Fargo Equity Research via Next Platform shown below

🙂

☑️ #65 Jan 19, 2023

Meta to Buy 350k NVIDIA GPUs to Train AI Models Like Llama 3 for AGI

Synthedia: More models are coming and they will be integrated with smart glasses.

🙂

☑️ #64 Jan 4, 2023

2023 Year in Review: The Great GPU Shortage and the GPU Rich/Poor

datagravity.dev: NVIDIA becomes the 5th hyperscaler to join the $T+ market capitalization club driven by surging demand from clouds, GPU clouds, big tech and AI startups.

🙂

☑️ #63 Dec 18, 2023 🔴 rumor

NVIDIA GeForce RTX 4090 D China-Exclusive GPU Rumored To Launch On 28th December

expreview.com: [Transcription] [Excerpt] [Translated] Nvidia RTX 4090 D will be released at 10 p.m. on the 28th of this month.

In order to adapt to the new export control of the U.S. government on cutting-edge artificial intelligence (AI) chips, Nvidia will launch a special Chinese version of GeForce RTX 4090 D equipped with AD102-250 to replace the flagship product GeForce RTX 409 on the restricted list. 0.

According to reliable information, the release time of Nvidia GeForce RTX 4090 D will be set at 10 p.m. Beijing time on December 28, 2023.

🙂

☑️ #62 Dec 4, 2023

Modular partners with NVIDIA to bring GPUs to the MAX Platform

modular.com: [Transcription] [Excerpt] Today, Modular is excited to announce an exclusive technology partnership with NVIDIA, the world leader in AI accelerated compute to bring the power of Modular Accelerated Execution (MAX) Platform to their hardware. This will enable developers and enterprises to build and ship AI into production like never before, by unifying and simplifying the AI software stack through MAX, enabling unparalleled performance, usability, and extensibility to make scalable AI a reality.

🔹Continue reading | Max Platform

Available in Q1 2024

- Expanding our partnership to bring MiAX to NVIDIA customers.

- Targeting NVIDIA A100's, H1O's & H200's, L40's & many more.

🙂

☑️ #61 Dec 1, 2023

One tiny country drove 15% of Nvidia’s revenue – here’s why it needs so many chips

cnbc.com: [Transcription] [Excerpt] About 15% or $2.7 billion of Nvidia’s revenue for the quarter ended October came from Singapore, a U.S. Securities and Exchange Commission filing showed. Revenue coming from Singapore in the third quarter jumped 404.1% from the $562 million in revenue recorded in the same period a year ago. This outpaced Nvidia’s overall revenue growth of 205.5% from a year ago.

🔹Continue reading | Related content:

🙂

☑️ #60 Nov 29, 2023

DealBook Summit 2023 - The New York Times Events

cnbc.com: [Transcription] [Excerpt] Nvidia CEO: U.S. chipmakers at least a decade away from China supply chain independence.

Key Points:

U.S. chipmakers are at least a decade away from “supply chain independence” in China, Nvidia CEO Jensen Huang thinks.

Huang described it as an “absolutely” necessary effort for U.S. national security.

Nvidia has been the subject of increasingly stricter export controls, limiting its ability to send its most advanced chips to China.

🔹Continue reading | Related content:

🙂

☑️ #59 Nov 28, 2023

AWS and NVIDIA Announce Strategic Collaboration to Offer New Supercomputing Infrastructure, Software, and Services for Generative AI

nvidianews.nvidia.com: [Transcription] [Excerpt] LAS VEGAS--(BUSINESS WIRE)--At AWS re:Invent, Amazon Web Services, Inc. (AWS), an Amazon.com, Inc. company (NASDAQ: AMZN), and NVIDIA (NASDAQ: NVDA) today announced an expansion of their strategic collaboration to deliver the most advanced infrastructure, software, and services to power customers’ generative artificial intelligence (AI) innovations.

The companies will bring together the best of NVIDIA and AWS technologies—from NVIDIA’s newest multi-node systems featuring next-generation GPUs, CPUs, and AI software, to AWS Nitro System advanced virtualization and security, Elastic Fabric Adapter (EFA) interconnect, and UltraCluster scalability—that are ideal for training foundation models and building generative AI applications.

AWS to offer first cloud AI supercomputer with NVIDIA Grace Hopper Superchip and AWS UltraCluster scalability.

💲 EARNINGS ANNOUNCEMENT: Nov 21, 2023

🔹Related content:

☑️ #58 Nov 13, 2023 (archives)

NVIDIA Supercharges Hopper (H200), the World’s Leading AI Computing Platform

nvidianews.nvidia.com: [Transcription] [Excerpts] HGX H200 Systems and Cloud Instances Coming Soon From World’s Top Server Manufacturers and Cloud Service Providers.

The NVIDIA H200 is the first GPU to offer HBM3e — faster, larger memory to fuel the acceleration of generative AI and large language models, while advancing scientific computing for HPC workloads. With HBM3e, the NVIDIA H200 delivers 141GB of memory at 4.8 terabytes per second, nearly double the capacity and 2.4x more bandwidth compared with its predecessor, the NVIDIA A100

🔹Related content:

news.ycombinator.com (123 comments)

A GPU that may speed up ChatGPT: more memory capacity and bandwidth.

- Smaller batch sizes (i.e. inference) > will be faster.

- Larger batch sizes (i.e. training) will be possible (?)☑️ #57 Oct 30, 2023 🔴 rumor (archives)

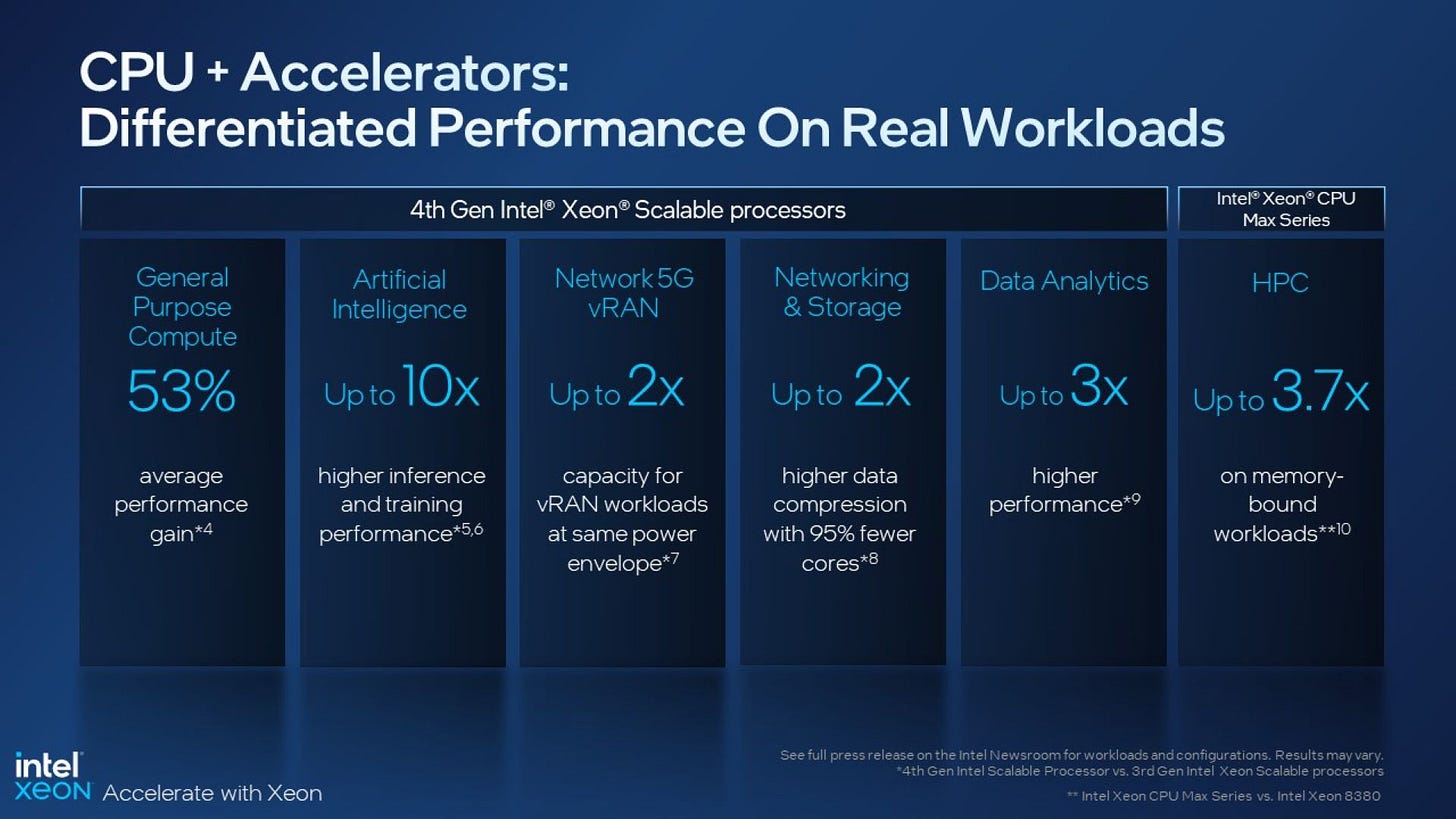

Naver replaces Nvidia GPU with Intel CPU for its AI map app server

kedglobal.com: [Transcription] [Excerpts] The Naver-Intel tie-up will likely diminish Nvidia’s clout in the AI processor market, analysts say

South Korea’s top web portal giant Naver Corp. has replaced the main chip supplier of its artificial intelligence server for its map service, Naver Place, from Nvidia Corp. to Intel Corp.

Naver has so far used Nvidia’s graphic processing unit (GPU)-based server to run its AI-powered location information provision service but recently replaced it with Intel’s central processing unit (CPU)-based server*, people familiar with the matter said on Monday.

🔹Related content:

*Based on the formerly codenamed Sapphire Rapids Architecture

🙂

☑️ #56 Oct 30, 2023 (archives)

Silicon Volley: Designers Tap Generative AI for a Chip Assist

blogs.nvidia.com: [Transcription] [Excerpts] Semiconductor engineers show how a specialized industry can customize large language models to gain an edge using NVIDIA NeMo.

Few pursuits are as challenging as semiconductor design. Under a microscope, a state-of-the-art chip like an NVIDIA H100 Tensor Core GPU (above) looks like a well-planned metropolis, built with tens of billions of transistors, connected on streets 10,000x thinner than a human hair.🔹Related content:

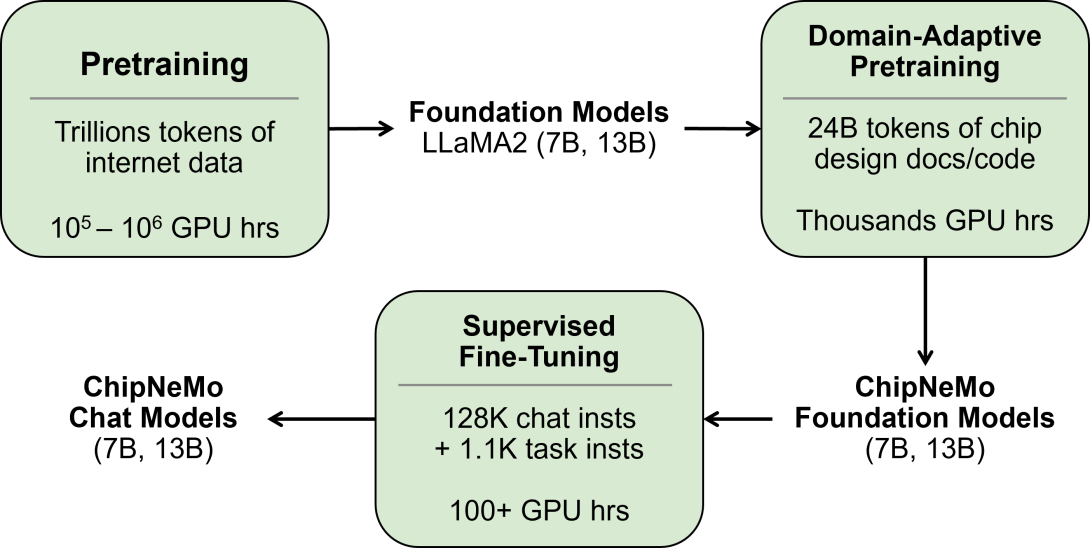

ChipNeMo: Domain-Adapted LLMs for Chip Design: [Transcription] [Abstract] ChipNeMo aims to explore the applications of large language models (LLMs) for industrial chip design. Instead of directly deploying off-the-shelf commercial or open-source LLMs, we instead adopt the following domain adaptation techniques: custom tokenizers, domain-adaptive continued pretraining, supervised fine-tuning (SFT) with domain-specific instructions, and domain-adapted retrieval models. We evaluate these methods on three selected LLM applications for chip design: an engineering assistant chatbot, EDA script generation, and bug summarization and analysis. Our results show that these domain adaptation techniques enable significant LLM performance improvements over general-purpose base models across the three evaluated applications, enabling up to 5x model size reduction with similar or better performance on a range of design tasks. Our findings also indicate that there’s still room for improvement between our current results and ideal outcomes. We believe that further investigation of domain-adapted LLM approaches will help close this gap in the future.

🙂

☑️ #55 Oct 19, 2023 (archives)

Bringing Generative AI to Life with NVIDIA Jetson

developer.nvidia.com: [Transcription] [Excerpts] Recently, NVIDIA unveiled Jetson Generative AI Lab, which empowers developers to explore the limitless possibilities of generative AI in a real-world setting with NVIDIA Jetson edge devices. Unlike other embedded platforms, Jetson is capable of running large language models (LLMs), vision transformers, and stable diffusion locally.

🔹Related content:

NVDIA Jetson Generative AI Lab:

Text Generation: Run LLM-based chat bot on Jetson.

Text + Vision: Run multimodal Vision-Language models to give your AI access to vision.

Image Generation: Run diffusion models to generate stunning images interactively on Jetson.

Distillation: Learn a technique to bring the power of a large foundation model to Jetson by knowledge distilation.

NanoSAM: SAM (Segment Anything Model) and other Vision Transformers optimized to run in realtime.

NanoDB: Multimodal vector database that uses embeddings for txt2img and img2img similarity search.

NVIDIA Webinar: Bringing Generative Al to Life with NVIDIA Jetson. NVIDIA Webinar Nov 7, 2023

🙂

☑️ #54 Oct 19, 2023 (archives)

Optimizing Inference on Large Language Models with NVIDIA TensorRT-LLM, Now Publicly Available

developer.nvidia.com: [Transcription] [Excerpts] Put simply, LLMs are large. That fact can make them expensive and slow to run without the right techniques.

Many optimization techniques have risen to deal with this, from model optimizations like kernel fusion and quantization to runtime optimizations like C++ implementations, KV caching, continuous in-flight batching, and paged attention. It can be difficult to decide which of these are right for your use case, and to navigate the interactions between these techniques and their sometimes-incompatible implementations.

That’s why NVIDIA introduced TensorRT-LLM, a comprehensive library for compiling and optimizing LLMs for inference. TensorRT-LLM incorporates all of those optimizations and more while providing an intuitive Python API for defining and building new models.

🙂

☑️ #53 Oct 17, 2023 (archives)

AMD, Arm, Intel, Meta, Microsoft, NVIDIA, and Qualcomm Standardize Next-Generation Narrow Precision Data Formats for AI

opencompute.org: [Transcription] [Excerpts] Realizing the full potential of next-generation deep learning requires highly efficient AI infrastructure. For a computing platform to be scalable and cost efficient, optimizing every layer of the AI stack, from algorithms to hardware, is essential. Advances in narrow-precision AI data formats and associated optimized algorithms have been pivotal to this journey, allowing the industry to transition from traditional 32-bit floating point precision to presently only 8 bits of precision (i.e. OCP FP8).

Narrower formats allow silicon to execute more efficient AI calculations per clock cycle, which accelerates model training and inference times. AI models take up less space, which means they require fewer data fetches from memory, and can run with better performance and efficiency. Additionally, fewer bit transfers reduces data movement over the interconnect, which can enhance application performance or cut network costs.

🔹Continue reading | Related content:

azure.microsoft.com: Fostering AI infrastructure advancements through standardization

Microscaling Formats (MX) Alliance

Earlier this year, AMD, Arm, Intel, Meta, Microsoft, NVIDIA, and Qualcomm Technologies, Inc. formed the Microscaling Formats (MX) Alliance with the goal of creating and standardizing next-generation 6- and 4-bit data types for AI training and inferencing.

🙂

☑️ #52 Oct 15, 2023 🔴 rumor (archives)

NVIDIA Blackwell B100 GPUs To Feature SK Hynix HBM3e Memory, Launches In Q2 2024 Due To Rise In AI Demand

wcctech.com: [Transcription] [Excerpts] NVIDIA has reportedly moved the launch of its next-gen Blackwell B100 GPUs up from Q4 to Q2 2024 following a huge surge in AI demand. The company is also expected to utilize HBM3e DRAM from SK Hynix for its latest chips.

SK Hynix Reportedly Secures HBM3e Deal With NVIDIA, Will Be Used To Power Next-Gen Blackwell GPUs Coming In Q2 2024

According to a report by a South Korean media outlet, MT.co,kr, it is reported that SK Hynix has secured a deal to exclusively supply NVIDIA its latest HBM3e memory that will be used to power next-generation Blackwell GPUs. This will help SK Hynix become a lead semiconductor supplier in the AI industry.

NVIDIA already accounts for over 90% of the AI GPU market with its Ampere A100 and Hopper H100 GPUs and Blackwell B100 is going to further cement the green team as the undisputed leader of the AI world.🔹Continue reading | Related content:

🙂

☑️ #51 Sep 9, 2023 (archives)

NVIDIA TensorRT-LLM Supercharges Large Language Model Inference on NVIDIA H100 GPUs

developer.nvidia.com: [Transcription] [Excerpts] Large language models offer incredible new capabilities, expanding the frontier of what is possible with AI. But their large size and unique execution characteristics can make them difficult to use in cost-effective ways.

NVIDIA has been working closely with leading companies, including Meta, Anyscale, Cohere, Deci, Grammarly, Mistral AI, MosaicML, now a part of Databricks, OctoML, Tabnine, and Together AI, to accelerate and optimize LLM inference.

Those innovations have been integrated into the open-source NVIDIA TensorRT-LLM software, available for Ampere, Lovelace, and Hopper GPUs and set for release in the coming weeks.

🟩 SP5 > Nvidia > Latest > NVDA 0.00%↑