💲NVDA

A comprehensive snapshot of NVIDIA’s major recent developments

🟩 SP5 > Nvidia > Latest > NVDA 0.00%↑

☑️ #272 Nov 9, 2025

[Topic: Dysprosium] US curbs block Nvidia’s best AI chips from China (requiring export licenses for even downgraded H20s). China counters by weaponizing its rare-earth chokehold, potentially spiking costs or halting advanced GPU output worldwide

grok.com (Expert) > [Sources not verified. Do your own research] TSMC needs export licenses from China to make Nvidia chips. China produces 99% of Dysprosium used in Nvidia chips. Please, could you offer a concise POV about it?

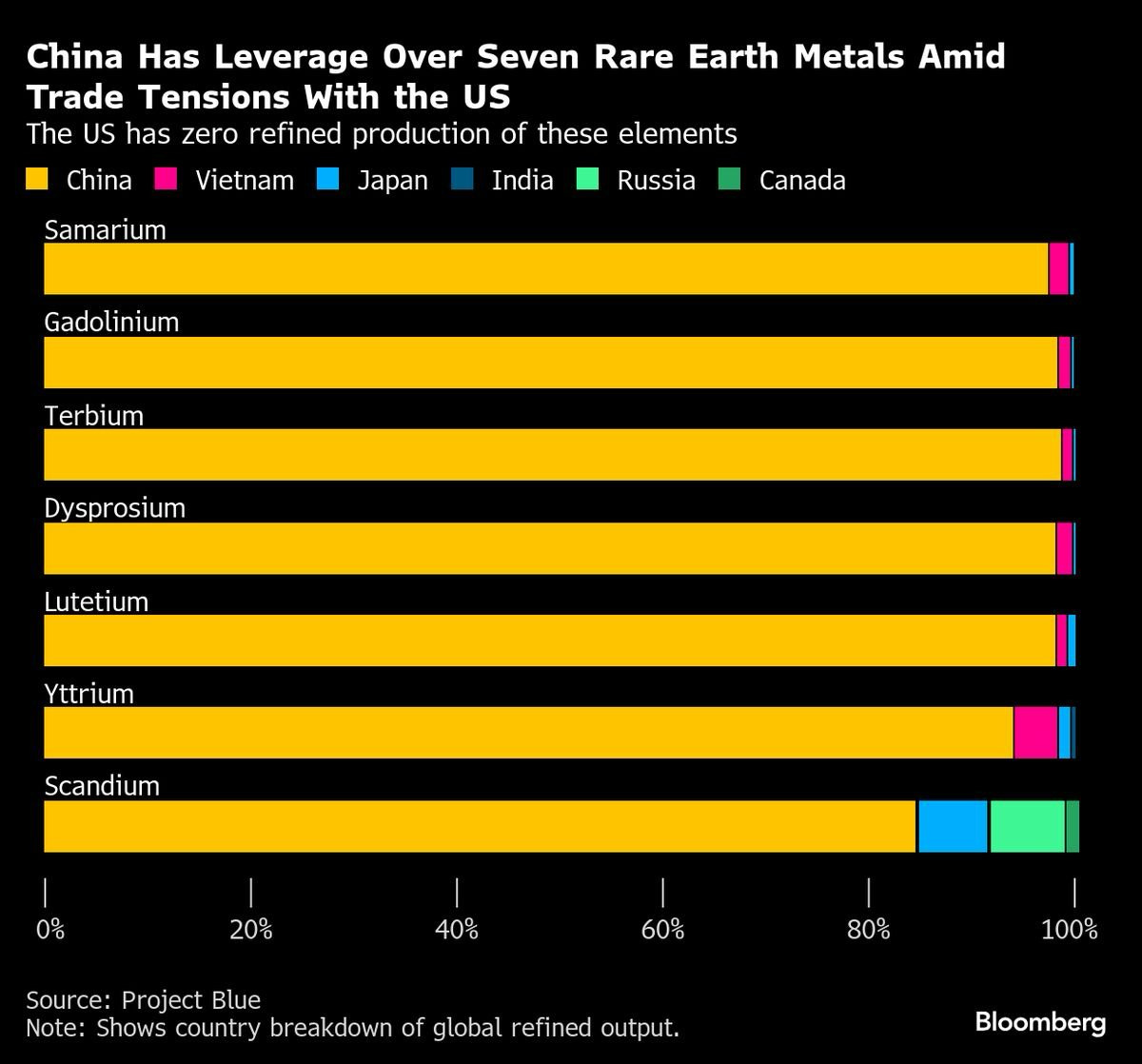

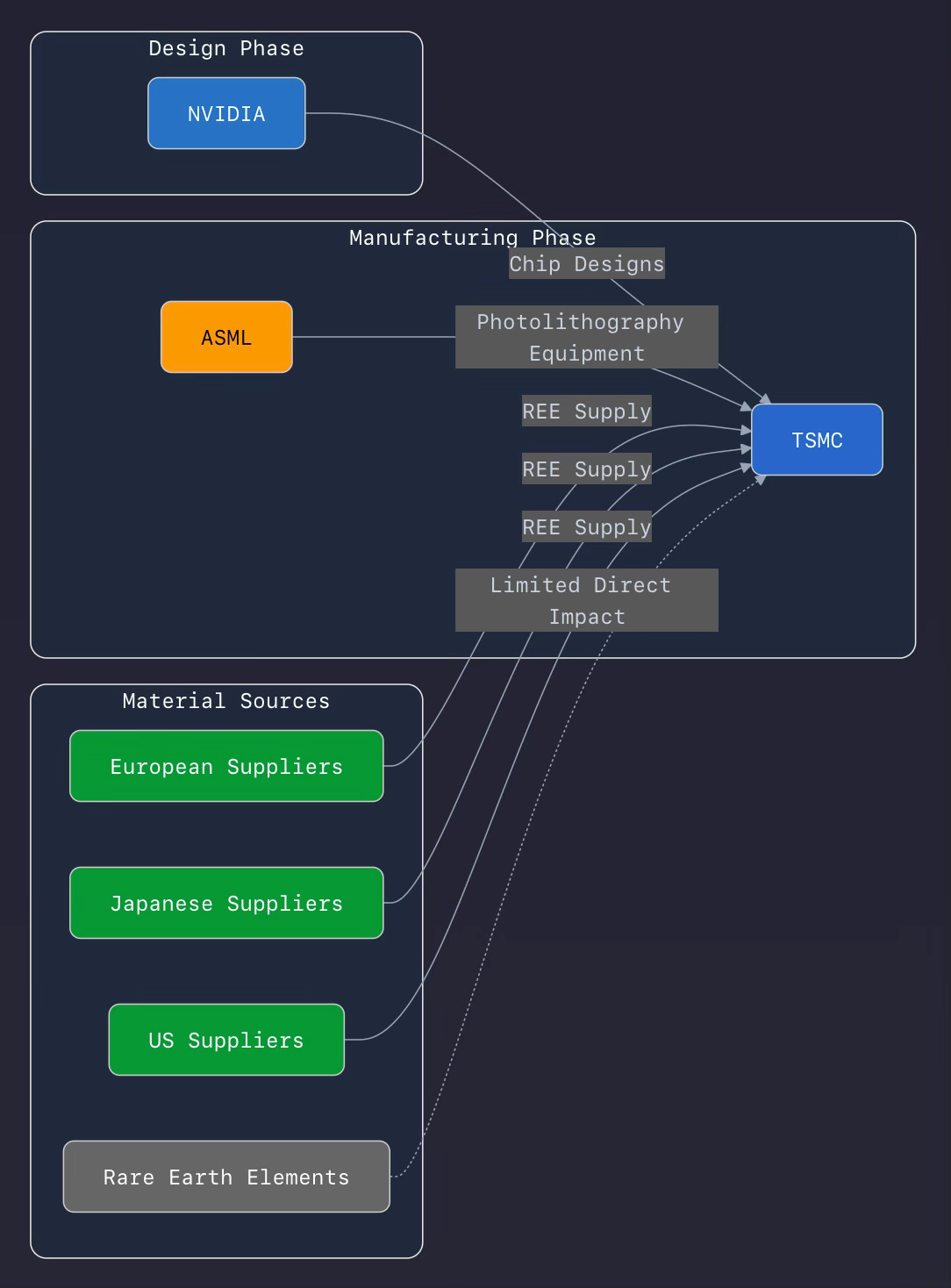

China processes ~99% of global dysprosium. This heavy rare earth element is now critical for ultrapure capacitors in advanced semiconductors (including Nvidia’s AI GPUs), due to its heat-resistant properties in high-performance chips.

TSMC doesn’t directly need Chinese licenses “to make” Nvidia chips—manufacturing occurs in Taiwan—but recent Chinese export controls (effective November 8, 2025, on dysprosium and other rare earths) mean global suppliers require Beijing’s approval to ship these materials outside China. Without them, TSMC’s supply chain for cutting-edge nodes could face disruptions, indirectly giving China veto power over Nvidia/TSMC production.

+ Related content:

@thevalueist (10/11/25): $NVDA $AMD $ASML $TSM The Bloomberg chart underscores that China controls a near-monopoly share of refined output for 7 heavy rare earths that are disproportionately used to raise magnet coercivity and temperature tolerance—dysprosium, terbium, gadolinium, samarium, lutetium, yttrium, and scandium—while the U.S. has 0% refined production for these elements. On magnets, China’s market power is even greater at the conversion step: recent IEA data and industry trackers place China’s share of rare-earth magnet output around 85–90%, reflecting choke points at separation and sintered NdFeB magnet manufacturing. This concentration makes any licensing regime or port-of-exit friction function like a quantity restraint on precision motion systems and magnetron assemblies across semiconductor equipment, even when mining tonnage is not the constraint. The combination of functional dominance in magnet manufacturing and zero U.S. refined output for these 7 elements is exactly the profile that gives Beijing maximal leverage over high-spec magnets and sputtering target alloys used in fabs.

China’s latest rules move the rare earths dispute from symbolic export signaling to a compliance-heavy system with extraterritorial reach. Beijing now requires licenses not just for Chinese-origin exports but for foreign-made items that contain Chinese rare earths or were produced using Chinese rare-earth technology, with defense uses denied and semiconductor uses reviewed case by case. Several reports and translations of MOFCOM’s notice describe a de minimis trigger at 0.1% of product value, which is intentionally low for complex assemblies and places the burden of proof on exporters; enforcement uncertainty notwithstanding, noncompliance carries the commercial risk of being cut off by Chinese suppliers. This architecture is designed to insert time, documentation risk, and legal uncertainty into multi-tier supply chains, and it explicitly names semiconductor and advanced AI applications as sensitive, raising the probability of selective, time-variable denials.

Semiconductor equipment is unusually exposed because it embeds rare-earth magnets and related materials in multiple subsystems: high-precision wafer and reticle stages driven by permanent-magnet linear motors; turbomolecular and dry vacuum pumps whose motors and magnetic bearings use rare earths; laser subsystems and magnetron sputtering sources where yttrium, terbium, and gadolinium appear as dopants or target constituents; and numerous ancillary actuators. ASML has already indicated it is preparing for weeks-long shipment delays under the new regime, and Reuters reporting confirms that semiconductor-linked applications will face case-by-case licensing, with blanket denials for defense users. Even short licensing delays propagate as installation and qualification slippage and can push out foundry capacity ramps by 2–8 weeks, particularly for new scanner placements needed to extend 3–5 nm output and to ready 2 nm and High-NA nodes. The core point is not outright scarcity today but the creation of a “shadow quota” via licensing lead-times on parts that lack easy substitution, which raises working-capital needs and the effective cost of time in capex schedules.

The direct implications for Nvidia operate through 4 channels: wafer supply, packaging and memory availability, China market access, and U.S. tariff exposure on China-origin content. First, Nvidia’s flagship data center GPUs ride TSMC advanced nodes that depend on EUV and deep-UV tool availability; if Chinese-origin magnets or target materials in scanners or deposition tools require new licenses, incremental tool shipments and spares to Taiwan, Korea, the U.S., and Japan can be delayed, slowing incremental wafer capacity adds. Weeks of slippage at ASML translate into later scanner arrival, later installation, and later release-to-production dates at foundries, which in turn shift out Nvidia’s volume ramps on new SKUs at the margin. While today’s supply is already tightly allocated, added frictions extend backlog realization and flatten the near-term slope of unit growth.

Second, Nvidia’s output is now co-limited by advanced packaging and HBM supply. CoWoS tooling suites and HBM back-end equipment also use precision stages, motors, and vacuum systems with rare-earth content; if license reviews touch these sub-systems or the sputtering targets used in redistribution and passivation steps, packaging capacity adds at TSMC and OSATs can slip. On the memory side, HBM availability is a hard bottleneck for Nvidia module shipments; any licensing drag on tool deliveries or magnet-bearing drive systems for vacuum pumps at SK Hynix, Samsung, or Micron slows HBM ramps. That combination sustains elevated average selling prices but caps near-term shipment upside; revenue becomes more back-half weighted, with gross margins supported by mix and scarcity while opex leverage decouples from unit growth timing. Industry analysis has repeatedly flagged CoWoS and HBM as the binding constraints; a licensing regime that gums up tool flows makes those constraints more persistent.

Third, the China demand channel is deteriorating on both policy and enforcement. China has intensified customs scrutiny and administrative pressure around Nvidia’s China-specific accelerators, and Beijing can pair rare-earth leverage with procurement guidance steering buyers to domestic alternatives. On the U.S. side, an additional 100% tariff and prospective export controls on “critical software” raise the tail risk that CUDA, drivers, or SDK updates to PRC entities could be restricted, degrading Nvidia’s software monetization and ecosystem lock-in in China. Nvidia’s SEC disclosures show China accounted for a mid-teens share of data center revenue in 2023–2024; while that share has been volatile under shifting controls, the direction of travel under today’s rhetoric is toward lower legal sell-through and higher receivables risk in China. As a result, mix shifts further to U.S. hyperscalers and non-China sovereign demand, supporting aggregate revenue but increasing customer concentration and headline risk.

Fourth, tariff mechanics on China-origin content affect boards, subassemblies, and server integration. Nvidia has been diversifying final assembly and server integration footprints toward Mexico and other locations, which mitigates U.S. tariff exposure if a 100% levy lands on China-origin hardware, but any residual China content in modules, coolers, fans, or chassis can still encounter substantial import tax or rules-of-origin complexity. The operational response will be accelerated relocation of any remaining China assembly, tighter vendor audits of magnet and target provenance to avoid 0.1% triggers, and higher safety stocks for spares and consumables that risk capture by the new rules. These measures preserve delivery capability but increase working capital and lower operational flexibility, which is a mild headwind to free cash flow conversion while supply remains tight.

For the broader semiconductor complex, the highest beta sits with equipment suppliers that incorporate high-spec rare-earth magnets and with device makers scaling capacity on aggressive timetables. A few weeks of licensing-driven slippage compounds with tight supplier labor and logistics windows to push revenue recognition out of quarter. Foundries and memory makers will triage tool deliveries toward the most ROI-intensive projects, which arguably helps Nvidia because leading-edge logic for AI accelerators remains top priority; however, that same prioritization can starve less-advanced but still critical back-end tools if their magnet or target content triggers onerous documentation, creating sporadic bottlenecks in substrate, RDL, or probe capacity. Over a 1–2 quarter horizon, this supports a still-tight supply environment for AI accelerators, holding up pricing and margins for Nvidia while capping absolute unit upside and raising the risk that high-volume enterprise adoption is delayed by a few quarters due to lead times on full racks and spares.

Netting the effects for Nvidia, the median case is a modest negative for near-term unit growth but not an earnings-thesis break: wafer-supply and packaging frictions extend fulfillment timelines by weeks, HBM ramps stay tight and keep ASPs firm, China sell-through shrinks further but is partly offset by ex-China hyperscalers, and higher working capital buffers and compliance costs weigh slightly on cash conversion. The downside case is an escalation where license denials explicitly target semiconductor equipment servicing Nvidia’s key suppliers or where U.S. software controls limit CUDA update distribution to PRC entities; that raises the probability of missed shipments and larger China revenue displacement. The upside case is that MOFCOM grants broad civilian carve-outs with predictable timelines and that U.S. tariffs spare data center components assembled outside China, allowing Nvidia to continue rerouting supply chains; that case largely preserves Nvidia’s delivery outlook while leaving a higher geopolitical risk premium embedded in the multiple. On current information, the skew for Nvidia is to slightly lower volume trajectories with resilient gross margin, elevated backlog durability, and higher operational complexity; for semis as a whole, risk premia rise, dispersion increases, and capex plans encounter higher schedule variance because license queues act as a latent capacity constraint across multiple tool categories.

Key watch items that will determine whether this becomes a transient compliance tax or a persistent capacity drag are the realized cadence of license approvals for magnet-bearing subassemblies and sputtering targets destined for fabs and OSATs; the degree to which ASML and other toolmakers can certify non-China magnet content or secure waivers; any broadening of U.S. measures to “critical software” that catches CUDA distribution; the response of HBM suppliers to potential licensing friction and their ability to qualify non-China magnet and motor suppliers in vacuum and handling systems; and the extent of China’s administrative guidance against Nvidia hardware in its domestic market. Early indications already point to ASML preparing for weeks of disruption under the re-export clause and to China tightening administrative pressure on Nvidia shipments, which argues for keeping a volatility hedge on Nvidia and on the semis complex until license issuance patterns stabilize

☑️ #271 Nov 3, 2025

Tubi CEO on Streaming Landscape, Google’s AI Strategy, Starcloud’s Space GPUs | Nov 3, 2025

@theinformation4080: [6:30] We also talk with StarCloud Co-Founder & CEO Philip Johnston about launching an NVIDIA H100 GPU into space, its core cooling technology, and their business model.

+ Related content:

blogs.nvidia.com (10/15/25): [Excerpt] How Starcloud Is Bringing Data Centers to Outer Space.

The NVIDIA Inception startup projects that space-based data centers will offer 10x lower energy costs and reduce the need for energy consumption on Earth.

Starcloud’s upcoming satellite launch, planned for November, will mark the NVIDIA H100 GPU’s cosmic debut — and the first time a state-of-the-art, data center-class GPU is in outer space.

The 60-kilogram Starcloud-1 satellite, about the size of a small fridge, is expected to offer 100x more powerful GPU compute than any previous space-based operation.

@Starcloud_Inc1 (11/3/25): Thanks for the ride @SpaceX! Beautiful shot of Starcloud-1 deploying there!

@PhilipJohnst0n, @ezrafeilden, @adi__oltea

@SpaceX (11/2/25): Deployment of all payloads confirmed.

via @BassonBrain

🙂

☑️ #270 Nov 3, 2025

An updated overview of the situation regarding Nvidia Corporation’s semiconductor exports to China

chat.openai.com (chatgpt) > [Sources not verified. Do your own research]:

The U.S. has imposed export controls on advanced AI and high-performance computing chips to China. For example, in October 2023 the U.S. Department of Commerce announced restrictions on the export of Nvidia’s A800 and H800 chips (among others) to China. (CNBC)

Nvidia, in an April 2025 filing, said these controls would cost them an estimated US$5.5 billion in charges, especially because of the H20 chip inventory and previously covered sales now subject to restrictions. (euronews)

Nvidia’s CEO, Jensen Huang, has said that U.S. export controls to China have been “a failure” because China is making rapid progress. (DW News)

Recent developments & nuance

The export control regime is not simply a total ban: many chips remain export-eligible if they meet certain performance thresholds and are appropriately licensed. For instance, regulating performance density, total processing performance (TPP), etc. (CSIS)

The U.S. appears to be shifting somewhat: The licence-granting process for the H20 chip has begun, allowing Nvidia to hope for resumed exports to China. (CNBC)

On the Chinese side, there are reports of tighter customs scrutiny of chip imports, particularly of U.S.-made AI processors. (This reflects a broader push by China toward self-sufficiency in semiconductor/AI technology.) (Financial Times)

Nvidia can export certain chips to China — particularly ones designed to comply with U.S. export rules (e.g., the H20) — but many of their highest-end AI chips (e.g., H100, H800, etc) are under tight restrictions.+ Related content:

ft.com (update; 11/3/25): China offers tech giants cheap power to boost domestic AI chips.

Local governments in Gansu, Guizhou and Inner Mongolia offered to cover up to 50% of electricity costs for data centers, provided they use domestically produced AI processors, such as those developed by Huawei and Cambricon.

China is offering substantial power subsidies to major tech firms like:

ByteDance

Alibaba (9988.HK)

Tencent (0700.HK)

🙂

☑️ #269 Oct 31, 2025

Samsung Teams With NVIDIA To Lead the Transformation of Global Intelligent Manufacturing Through New AI Megafactory

news.samsung.com: [Excerpts] Korea on October 31, 2025. Driving company-wide digital transformation through AI-powered manufacturing across semiconductors, mobile devices and robotics.

Leveraging over 50,000 NVIDIA GPUs and the NVIDIA Omniverse platform to scale next-generation AI manufacturing infrastructure.

Advancing manufacturing and humanoid robotics with NVIDIA AI platforms to enable greater intelligence and autonomy.

From 25+ Year Collaboration to a Stronger AI Chip Alliance

Samsung and NVIDIA celebrate a collaboration that spans more than 25 years, beginning with Samsung’s DRAM powering NVIDIA’s early graphics cards and extending through foundry partnership.

In addition to their ongoing collaborations, Samsung and NVIDIA are also working together on HBM4. With incredibly high bandwidth and energy efficiency, Samsung’s advanced HBM solutions are expected to help accelerate the development of future AI applications and form a critical foundation for manufacturing infrastructure driven by these technologies.

Built with the company’s 6th-generation 10-nanometer (nm)-class DRAM and a 4nm logic base die, Samsung HBM4’s processing speeds can reach 11 gigabits-per-second (Gbps), far exceeding the JEDEC standard of 8Gbps.

Samsung will also continue to deliver next-generation memory solutions, including HBM, GDDR, and SOCAMM, as well as foundry services, driving innovation and scalability across the global AI value chain.

🙂

☑️ #268 Oct 29, 2025

Exclusive: Nvidia backs new data center to reduce electricity spikes

axios.com: [Excerpt] A new kind of data center built by a coalition including Nvidia aims to smooth out power use as AI demand surges.

Why it matters: Shared exclusively with Axios, the project is the first commercial rollout of software that adjusts energy draw in real time — a model the companies say can ease strain on the grid and curb electric costs.

Nvidia will deploy new software developed by Emerald Al to reduce power use at the Aurora data center in Virginia. + Related content:

linkedin.com > Emerald AI (): A new era begins for AI and energy. 🚨

🚀 Today, Emerald AI, NVIDIA, EPRI, Digital Realty, and PJM Interconnection announced the world’s first power-flexible AI Factory at commercial scale: the 96 MW Aurora AI Factory in Virginia.

This milestone project establishes the reference architecture for how AI infrastructure and electric grids can work together to supercharge innovation while maintaining reliability.

⚡ Power-flexible AI Factories have the potential to unlock 100 GW of existing grid capacity to fuel the AI revolution without the need for massive new power plants. This approach keeps electricity affordable, strengthens grid reliability, and supports sustainable growth.

Virginia Governor Glenn Youngkin recognized Aurora as a model for “promoting economic development and AI competitiveness while at the same time protecting the affordability and reliability of the power grid.”

🌍 So the message is clear: AI and the electricity grid are no longer on separate tracks. Together, we’re defining the blueprint for power-flexible AI infrastructure. The AI revolution is the next moonshot, and power-flexible AI Factories can unlock energy abundance for AI.

🗞️ Read Amy Harder’s exclusive in Axios: https://lnkd.in/ehBMs2aT

🔗 Read the full press release here: https://lnkd.in/eXgi-6rg

A huge heartfelt thank you to our phenomenal collaborators—NVIDIA, Digital Realty, EPRI, PJM Interconnection, and all our partners and supporters!

hashtag#AI hashtag#GridFlexibility hashtag#EmeraldAI hashtag#AIRevolution hashtag#DataCenters

companiesmarketcap.com Nvidia became the first company to reach a $5T market valuation on Wednesday (10/29)

Market Capitalization (estimated): $5,040,802,627,618.24

🙂

☑️ #267 Oct 28, 2025 🟠 opinion

AI is about Reindustrialization

@ssankar: Jensen and @nvidia delivering the AI infrastructure for U.S. manufacturing dominance. Accelerated compute turbocharging AIP—from the Lowe’s supply chain to the frontline.

AI isn’t about AI – it is our asymmetric advantage to deliver country-scale transformation. AI is about Reindustrialization. AI is how we give the American worker superpowers and make them 50x more productive.

American leadership in industrialization is re-emerging: Mobilize.

@ssankar (10/9/25): 1/ We are in an undeclared state of emergency. America’s adversaries are circling, and the American industrial base that dominated the 20th century is dormant. For the CCP, it is not enough for China to prosper... America must fall. It’s time to Mobilize. Coming March 2026.

⚡️

@nvidianewsroom: #NVIDIAGTC: Palantir and NVIDIA team up to operationalize AI — turning enterprise data into dynamic decision intelligence. NVIDIA accelerated computing, CUDA-X libraries, open-source Nemotron models are now available via the Palantir Ontology and AIP.

🙂

☑️ #266 Oct 16, 2025

Largest Company in the world by market‑capitalization at market close on December 31, 2025?

chat.openai.com (chatgpt) > [Sources not verified. Do your own research]:

✅ Why the chance is reasonably high

Momentum matters: If NVIDIA retains its lead into late 2025 (assuming no major disruption), then the odds of still being #1 at year‑end are solid.

Competition and headwinds: Even if NVIDIA is ahead now, other large firms like Microsoft Corporation, Apple Inc., Alphabet Inc. etc. have enormous scale and could pull ahead with a strong quarter, favourable regulation, or a major innovation. Also risk factors like export restrictions (for AI chips) could weigh.

🧭 What to Watch (Rest of 2025)

Nvidia Earnings (Q4 2025 due ~December)

A blowout or disappointing earnings report in late 2025 could shift the market cap ranking right before December 31.

Keep tabs on revenue growth in AI/data center, and any guidance changes.

Microsoft / Apple Moves

Any product surprise, strategic shift, or strong quarter from them could tighten the race. Both are within striking distance.

Macro Events

Rate cuts, geopolitical tensions, or changes in chip regulations (e.g., U.S.-China policy) could disproportionately affect NVIDIA.

Year-End Trading Trends

Tax-loss harvesting, window dressing by funds, or rebalancing can create volatility in December.

+ Related content:

@hhuang: The market will soon recognize that this is the only TPU that can compete with NVIDIA.

8 years ago, I attended a tech talk by Jeff Dean at Stanford about TPUv2, which was impressive as they started to use it for ML training at the time. So the gap between Trainium 3 and TPUv7 is much larger than people expected, and this gap may widen due to AMZn’s “cost-first, speed-second” approach.

I bet long Goog short Amzn will be very popular after this ER season.

@zephyr_z9 Google officially starts selling TPUs to external customers and competes directly with Nvidia now

@zephyr_z9: Broadcom’s 5th customer ($10B) isn’t Apple or XAI It’s Anthropic. They won’t design a new chip. They will be buying TPUs from Broadcom. Expect Anthropic to announce a funding round from Google soon

🙂

☑️ #265 Oct 16, 2025

$20B Raise via SPV: Bold, but Possible

theinformation.com: [Excerpt] Rather than buying all the NVIDIA’s GPUs outright, xAI Corporation is leaning on an SPV leasing model.

xAI’s unusual dealmaking to fund Musk’s Colossus 2. xAI Corporation plans to raise $20 billion for a deal using a special purpose vehicle (SPV) that would acquire semiconductors from Nvidia Corporation and then lease them to xAl, which will have the option to buy them at the end of the lease.

The SPV will reportedly comprise $7.5 billion in equity, anchored by Valor Equity Partners, and $12.5 billion in debt financing.

+ Related content:

@markets: Nvidia to Invest in Musk’s xAI.

Elon Musk’s artificial intelligence startup xAI is raising more financing than initially planned, tapping backers including Nvidia to lift its ongoing funding round to $20 billion, according to people familiar with the matter. Nvidia is investing as much as $2 billion in the equity portion of the transaction, the people said. Bloomberg’s Annabelle Droulers reports.

perplexity.ai > [Sources not verified. Do your own research]: Vertical Integration Without Full Ownership

This separates asset ownership (capital-intensive) from operational use (compute at scale), keeping xAI more agile and less burdened by balance-sheet liabilities. It’s a clever workaround in a tight capital market and reflects a financial-engineering-first approach, led by people like Antonio Gracias—who has deep roots in Tesla’s early scaling.

Scaling Compute as a Moat

Going from 0 to 200,000 GPUs in just over 200 days is practically unheard of. That puts xAI’s Colossus ahead of all but a few hyperscalers in terms of raw compute, making this infrastructure the central competitive moat for LLM training and inference at scale.

Whoever owns (or controls) the most compute wins.

In the AI arms race, models matter—but scale, speed of iteration, and compute density are increasingly the differentiators. xAI clearly sees this and is making infrastructure its front line.

Nvidia Dependency: Strength or Risk?

Nvidia wins huge in this deal—again. But it also underscores the systemic dependency emerging in AI ecosystems on Nvidia’s hardware stack (H100s, GH200s, and possibly Blackwell next). For xAI, this means:

Performance edge if they maintain early access to Nvidia’s bleeding-edge.

Risk if Nvidia’s supply chain gets pinched, prices rise, or competitors (e.g., AMD, Intel, or open ASIC projects) catch up.

This dependency could eventually become a strategic liability unless xAI hedges it long term (e.g., by co-developing custom silicon, as Google and Amazon have done).

securitiex.substack.com: [Excerpt] Scaling Compute as a Moat:

Going from 0 to 200,000 GPUs in just over 200 days was practically unheard of. That puts xAI’s Colossus ahead of all but a few hyperscalers in terms of raw compute, making this infrastructure the central competitive moat for LLM training and inference at scale.

The core idea: Whoever owns (or controls) the most compute wins. In only 122 days, xAI built its first huge data center, Colossus in Memphis, Tennessee, equipping it with 100,000 Nvidia GPUs. 92 days later, capacity was boosted to 200,000 units. Now, Antonio Gracias (Valor Equity Partners) anchors xAI Corporation’s plan to raise $20 billion for a deal using a special purpose vehicle (SPV) that would acquire semiconductors from Nvidia Corporation and then lease them to Colossus 2.

🙂

☑️ #264 Oct 16, 2025

Data Center Credits and 800 VDC Power Architecture

chat.openai.com (chatgpt) > [Sources not verified. Do your own research]:

Below is a deep‑dive sketch of how one can view NVIDIA’s modern ecosystem as a flywheel / virtuous loop — how each component (CoreWeave, power architecture, AI factories, GPU cluster partners, system alignment) not only creates value, but amplifies and compounds it. It’s not a perfect public blueprint, but a synthesis of observable moves, technology signals, and leverage points.

NVIDIA + Data Center Credits: co-risk

CoreWeave as a GPU Cloud Partner / Anchor Tenant

NVIDIA has strategically invested in and backed CoreWeave, including via equity, guaranteed capacity purchases, and preferential access to GPU hardware. For example, NVIDIA committed to purchase unsold compute capacity from CoreWeave under a $6.3B agreement through 2032, which de‑risks CoreWeave’s utilization and gives NVIDIA a backstop on wastage. (Reuters)

This gives NVIDIA not only a downstream outlet for its GPUs, but also control/visibility into a high‑volume deployment partner.Data Center Credits / Financial Guarantees

By providing “credits” or guaranteed capacity purchase, NVIDIA effectively subsidizes the risk of building large GPU farms. That lowers the hurdle for scaling infrastructure, encouraging more aggressive buildouts by CoreWeave.

It aligns incentives: CoreWeave pushes GPU adoption which in turn drives more orders and more GPU volume for NVIDIA.Because CoreWeave has a backstop, it can carry more speculative capacity, absorb utilization dips, or invest more aggressively in new data centers, confident that NVIDIA will anchor some of the demand.

By embedding financial instruments (like data center credits, guaranteed capacity purchases, credit lines) into partnership agreements, NVIDIA aligns incentives, de-risks investment for partners, and ensures partners are motivated to scale fast (because the downside is shared).

These instruments also help NVIDIA “steer” capacity (e.g. ensuring demand flow, smoothing utilization, encouraging certain geographies).

CoreWeave can aggressively build GPU farms (less risk, more capital confidence).

AI Factories & 800 VDC Power Architecture: viable farms

This is the “infrastructure engine” of the ecosystem: making ultra‑dense, efficient AI compute farms viable.

Throughout, the 800 VDC power architecture is a critical enabler — without the power scaling, the density and efficiency gains needed for hyper-scale AI factories would falter. Similarly, data center credits / financial risk‑sharing ensure that partner capacity expansions can move faster than they would under purely market risk. And system alignment & orchestration make sure the ecosystem does not fragment, reducing friction and reinforcing compounding effects.

NVIDIA is promoting an 800 VDC power distribution architecture for AI factories (data center farms) to replace less efficient AC or multi-stage conversion DC systems. (NVIDIA Developer)

The shift to 800 VDC allows fewer conversions (less loss), higher power density per conductor, reduced copper usage, fewer components, lower cooling overhead, and improved reliability. (NVIDIA Developer)

At scale (megawatt+ racks), traditional 54 V or AC power architectures hit physical and thermal limits; 800 VDC is more scalable and future-proof. (NVIDIA Developer)

NVIDIA envisions these as “AI factories,” where power, cooling, compute, orchestration are co‑engineered holistically.

The superior power architecture reduces TCO and allows NVIDIA’s GPU systems to be placed in much denser, more efficient configurations. That makes NVIDIA’s proposition more compelling vs. alternatives (other chips, custom accelerators, etc.).

This enables partners and cloud providers to adopt NVIDIA’s higher density racks, knowing the underlying power system is optimized.

The increased efficiency and capacity reduce the cost per FLOP (or effective compute), which further accelerates demand — which in turn prompts NVIDIA to push further innovations (e.g. better power management, cooling, fault tolerance).

As power systems evolve, NVIDIA can capture value in the design, components, and standards (e.g. power converters, DC distribution modules) — effectively extending its value chain upward into the power stack.

Because NVIDIA can plan ahead, it positions the system for high density and efficiency.

The kind of energy demand at gigawatt scale will stress grid infrastructure, regulatory constraints, and energy cost volatility. The 800 VDC + energy storage elements must be robust.

Thus, the AI factory + 800 VDC power architecture is the infrastructure backbone that unlocks the densification and scale required to make the rest of the flywheel feasible and cost-effective.Deploys / enables AI factories (internal or via partners) built around those systems

NVIDIA doesn’t simply sell a GPU and walk away; rather, they often co-engineer with partners (e.g. in rack / system integration, power delivery, cooling). This ensures that the hardware and the system are well tuned.

For example, when designing the 800 VDC ecosystem, NVIDIA works with power electronics, rack vendors, component suppliers. (NVIDIA Developer)

Partners or invests in GPU cloud/infrastructure players (CoreWeave, Lambda, Nebius, Oracle, etc.) who adopt NVIDIA’s tech and buy scale.

These GPU cluster providers drive utilization scale — the more deployed GPU infrastructure, the more compute-hour demand is generated, reinforcing the value of NVIDIA’s hardware and software.

They provide market feedback signals (evenues, usage, feedback, and demand signals) — which architectures are working, where bottlenecks lie, what new features (memory, interconnect, reliability) customers demand; NVIDIA can feed that learning back into its R&D.

Lambda

Lambda offers “AI Factories” as a service: single-tenant GPU clusters, co-engineered deployments, scaled to tens of thousands of GPUs, in multiple data centers. (lambda.ai)

They adopt NVIDIA’s newer GPU systems and presumably will embrace the advanced power and rack architectures.Nebius

Reportedly, Microsoft signed a large contract with Nebius to lease AI compute power (tied to NVIDIA’s chips), making Nebius one of the “neocloud” GPU infrastructure providers. (Financial Times)

Nebius is part of the emerging GPU cloud ecosystem, benefiting from scale, specialization, and integration with NVIDIA’s roadmaps.Oracle + NVIDIA collaboration

Oracle Cloud Infrastructure (OCI) and NVIDIA are integrating their stacks: 160+ NVIDIA AI tools and microservices will be available natively in OCI. (NVIDIA Newsroom)

Oracle becomes a cloud partner/reseller/distributor of NVIDIA’s AI stack, increasing reach into enterprise workloads.

Software stack alignment and platform bundling

By embedding its AI software (CUDA, libraries, inference stacks, microservices) into partner clouds (OCI, CoreWeave, etc.), NVIDIA ensures that the compute hardware is tightly integrated with the software environment, lowering friction and increasing lock-in. The more workloads run on NVIDIA’s stack, the harder it is to migrate away.Interoperability & standardization

These cluster providers adopt or co-develop around NVIDIA’s architectures (rack architecture, power, network fabrics). For example, NVIDIA is pushing the 800 VDC design and referencing that partners like CoreWeave, Lambda, Nebius, Oracle are also moving in that direction. (eWeek)

Oracle/OCI embedding of NVIDIA tools means that when enterprises use Oracle, they are effectively consuming NVIDIA’s software stack, which increases lock-in and synergy.Open standards, reference architectures, and ecosystem contributions

NVIDIA is pushing MGX, rack reference architectures, power system designs, and contributions into open consortia (e.g. via OCP) so that partners can more easily align. (MEXC)

By making these open or semi‑standard, adoption friction lowers, and ecosystem actors can plug in more easily.NVIDIA must balance protecting its IP and capturing margins with promoting enough openness so that partners adopt its architectures. If the ecosystem sees it as too closed, adoption friction may rise.

🙂

☑️ #263 Oct 15, 2025

AI INFRASTRUCTURE PARTNERSHIP (AIP), MGX, AND BLACKROCK’S GLOBAL INFRASTRUCTURE PARTNERS (GIP) TO ACQUIRE ALL EQUITY IN ALIGNED DATA CENTERS

aligneddc.com: [Excerpt] AIP was founded by BlackRock, Global Infrastructure Partners (GIP), a part of BlackRock, MGX, Microsoft, and NVIDIA to expand capacity of AI infrastructure and help shape the future of AI-driven economic growth. Its financial anchor investors include the Kuwait Investment Authority and Temasek.

In less than a decade, Aligned has evolved into one of the largest and fastest growing data center companies globally. The Company designs, builds, and operates cutting-edge data campuses and data centers for the world’s premier hyperscalers, neocloud, and enterprise innovators. Aligned’s portfolio includes 50 campuses and more than 5 gigawatts of operational and planned capacity, including assets under development, primarily located in key Tier I digital gateway regions across the U.S. and Latin America including Northern Virginia, Chicago, Dallas, Ohio, Phoenix, Salt Lake City, Sao Paulo (Brazil), Queretaro (Mexico), and Santiago (Chile).

+ Related content:

chat.openai.com (chatgpt): [Sources not verified. Do your own research]:

Key Strategies Nvidia is Using to Scale Data Centers / AI Infrastructure

Reference Architectures & Modular Systems (MGX, HGX, GB200, NVL72 etc.)

Nvidia is pushing standardized, modular building blocks so that customers and partners can more cheaply and more quickly build large AI‐/HPC‐capable data centers.Their Blackwell platform (GPUs, CPUs, networking) is designed with openness in mind. They’ve contributed rack / thermal / cooling / networking mechanical/electrical design to the Open Compute Project (OCP). (investor.nvidia.com)

The MGX modular reference architecture supports many server variations (size, cooling, compute etc.) to suit different scale needs. (NVIDIA Newsroom)

Modular architecture (MGX), liquid cooling, high compute density per rack, giving other vendors / operators access to NVIDIA’s design helps scale more uniformly, reduces reinventing, increases possibility of optimized data center builds. Useful in adaptive scaling. (NVIDIA Investor Relations)

The GB200 NVL2 and NVL72 systems provide very dense compute + memory + networking, aimed at large LLM inference, retrieval tasks, etc. These help hyperscalers build more capable AI racks/campuses. (investor.nvidia.com)

NVIDIA contributed design specs (rack architecture, thermal / electro‑mechanical design, etc.) for its GB200 NVL72 (which is a very high density compute platform) to the Open Compute Project (OCP). (NVIDIA Investor Relations)Performance claims (for the platform) include e.g. 30× faster real‑time trillion‑parameter inference vs past generation GPU; but metrics on facility level (PUE, WUE) not specified in the public contribution. (NVIDIA Investor Relations)

Power, Cooling, and Efficiency Innovations

Scaling isn’t only about GPUs. It’s also about how to deliver power, deal with heat, reduce losses, keep energy and operational costs manageable. Nvidia is doing several things here:

Pushing 800 VDC (High Voltage Direct Current) power systems, to enable 1‑megawatt+ server racks. This reduces losses, simplifies power conversion, lowers copper mass, etc. (NVIDIA Developer)

Moving from traditional AC/DC power distribution to high‑voltage DC (800 VDC) to reduce losses and enable more efficient power delivery into racks. The roll‑out of the 800 VDC architecture starting around 2027 to support 1 MW+ racks.

Reduced energy losses via fewer AC/DC conversions; less copper, less thermal loss in power delivery; better scalability for very high power racks; likely reduced cooling overhead (because less waste heat from inefficient conversions) and lower maintenance. (NVIDIA Developer)

Collaborations with power components firms (Infineon, STMicroelectronics, Texas Instruments, etc.) and data center power system operators (Eaton, Vertiv, Schneider, etc.) to build the supporting supply chain / gear. (DataCenterDynamics)

Schneider Electric + NVIDIA: AI Factories at Scale (Europe R&D / Rack / Cooling / Power / Controls)

Partnership announced in mid‑2025: developing rack systems, cooling, power, BMS (building management/control systems) for “AI factories” (i.e. large‑scale data centers) across Europe and beyond. Focus on high‑density racks; improved power & cooling designs; more efficient controls; potentially more energy‑efficient cooling/power infrastructure; alignment with EU policies (“AI Continent Action Plan”, InvestAI, etc.). Adaptive design that can scale. (Schneider Electric)

Not many public concrete numbers (e.g. PUE, energy savings) released yet; likely still in R&D / early development.

Datacenter Energy Optimized Power Profiles (Blackwell B200 Feature)

A recent NVIDIA software / control‑feature (on Blackwell architecture) to allow data centers to choose power profiles to manage energy vs performance trade‑offs. (arXiv)Enables respecting facility power constraints, optimizing for energy usage: reducing energy while maintaining performance; helps in high density / constrained environment. This kind of adaptive scaling (in workload / power profile) supports operational efficiency, fewer overprovisioned resources. (arXiv)In their reported tests: up to 15% energy savings while maintaining >97% of performance, and up to 13% throughput increase in power‑constrained facilities. That is significant. (arXiv)

Using liquid cooling, advanced thermal & rack design, and densification with high bandwidth networking to keep rack footprint vs performance efficient. Also sharing design specifications via OCP to help partners build consistent infrastructure. (investor.nvidia.com)

nVent & NVIDIA: AI‑Ready Liquid Cooling Solutions

Collaboration between NVIDIA and nVent (liquid cooling hardware vendor) to deploy liquid cooling at scale, supporting NVIDIA’s GB200 / NVL72 and next‑gen platforms. (datacenterfrontier.com)

Designed reference architectures for high density racks (NVL36 / NVL72), with custom cooling manifold, coolant distribution units (CDUs), and liquid‑to‑air exchangers. Allows better thermal management, higher compute density, reduced cooling overhead.

Partnerships & Ecosystem Expansion

Nvidia is not trying to build all data centers themselves. They’re enabling or partnering with:

Hyperscalers, cloud providers, enterprises who need GPU/AI infrastructure. For example, their HGX partner program (ODMs like Supermicro, GIGABYTE, etc.) to build AI systems. (ir.tdsynnex.com)

Collaborations with traditional telcos or network operators, for distributed AI data centers (SoftBank in Japan, etc.) where AI/5G/edge workloads are combined. (NVIDIA Newsroom)

Certified AI‑ready data centers & colocation partners. For example in India: Sify’s campuses certified under NVIDIA’s “DGX-Ready Data Center” program, offering pay‐per‐use for AI workloads. (techfocusasia.com)

Networking & Interconnect Technology

As data centers / AI factories scale, the networking becomes a major axis of differentiation.

Spectrum‑X Ethernet platform: high throughput, adaptive routing, congestion control, etc., is being deployed. (investor.nvidia.com)

NVLink interconnects and designs (e.g., NVLink cable modules) are being shared via OCP for better compatibility and densification. (investor.nvidia.com)

“AI Factory” / Data Center as a Product: Better Tools & Platforms

Nvidia frames large data centers as “AI factories”—not just places to run compute, but integrated systems. This includes:

Tooling for storage + data infrastructure that are AI‑aware. E.g. the NVIDIA AI Data Platform adds accelerated computing (GPUs, DPUs) to storage systems, optimizing for AI query access. (investor.nvidia.com)

Enhanced enterprise server options so organizations can start with smaller clusters or “AI ready” nodes and scale up without redesigning everything. (NVIDIA Newsroom)

Large Investments & Strategic Deals

Nvidia is investing in, or backing entities that build large-scale AI infrastructure, or making long-term supply agreements. (Some of the “news” items show big purchases/commitments). (Reuters)

They are aligning with energy, financing, power supply, and real estate needs (the non‑GPU parts) via large deals and partnerships. The $40B acquisition of Aligned Data Centers via a consortium including Nvidia is one example. (Reuters)

🙂

☑️ #262 Oct 14, 2025

Early access: NVIDIA DGX Spark

@lmsysorg: SGLang In-Depth Review of the NVIDIA DGX Spark is LIVE!

Thanks to@NVIDIA’s early access program, SGLang makes its first ever appearance in a consumer product, the brand-new DGX Spark.

The DGX Spark’s 128GB Unified Memory and Blackwell architecture set a new standard for local AI prototyping and edge computing. We’re thrilled to bring these cutting-edge performance insights and software support to the developer community.

Our review dives into how to efficiently deploy and accelerate large models like Llama 3.1 70B, GPT-OSS using SGLang’s EAGLE3 speculative decoding and @Ollama on this beautiful piece of engineering.

Unboxing video and tech blog in the thread #SGLang #NVIDIA #SparkSomethingBig #Blackwell #DGXSpark #AIInference #LLMServing

@lmsysorg: Special thanks to @nvidia @NVIDIAAI @NVIDIAAIDev for the early access program and community member @yvbbrjdr @richardczl for the review!

+ Related content:

lmsys.org(10/13/25): [Excerpt] Around the back, the DGX Spark offers an impressive array of connectivity options: a power button, four USB-C ports (with the leftmost supporting up to 240 W of power delivery), an HDMI port, a 10 GbE RJ-45 Ethernet port, and two QSFP ports driven by NVIDIA ConnectX-7 NIC capable of up to 200 Gbps. These interfaces allow two DGX Spark units to be connected together, allowing them to run even larger AI models.

The use of USB Type-C for power delivery is a particularly interesting design choice, one that’s virtually unheard of on other desktop machines. Comparable systems like the Mac Mini or Mac Studio rely on the standard C5/C7 power connector, which is far more secure but also bulkier. NVIDIA likely opted for USB-C to keep the power supply external, freeing up valuable internal space for the cooling system. The trade-off, however, is that you’ll want to be extra careful not to accidentally tug the cable loose

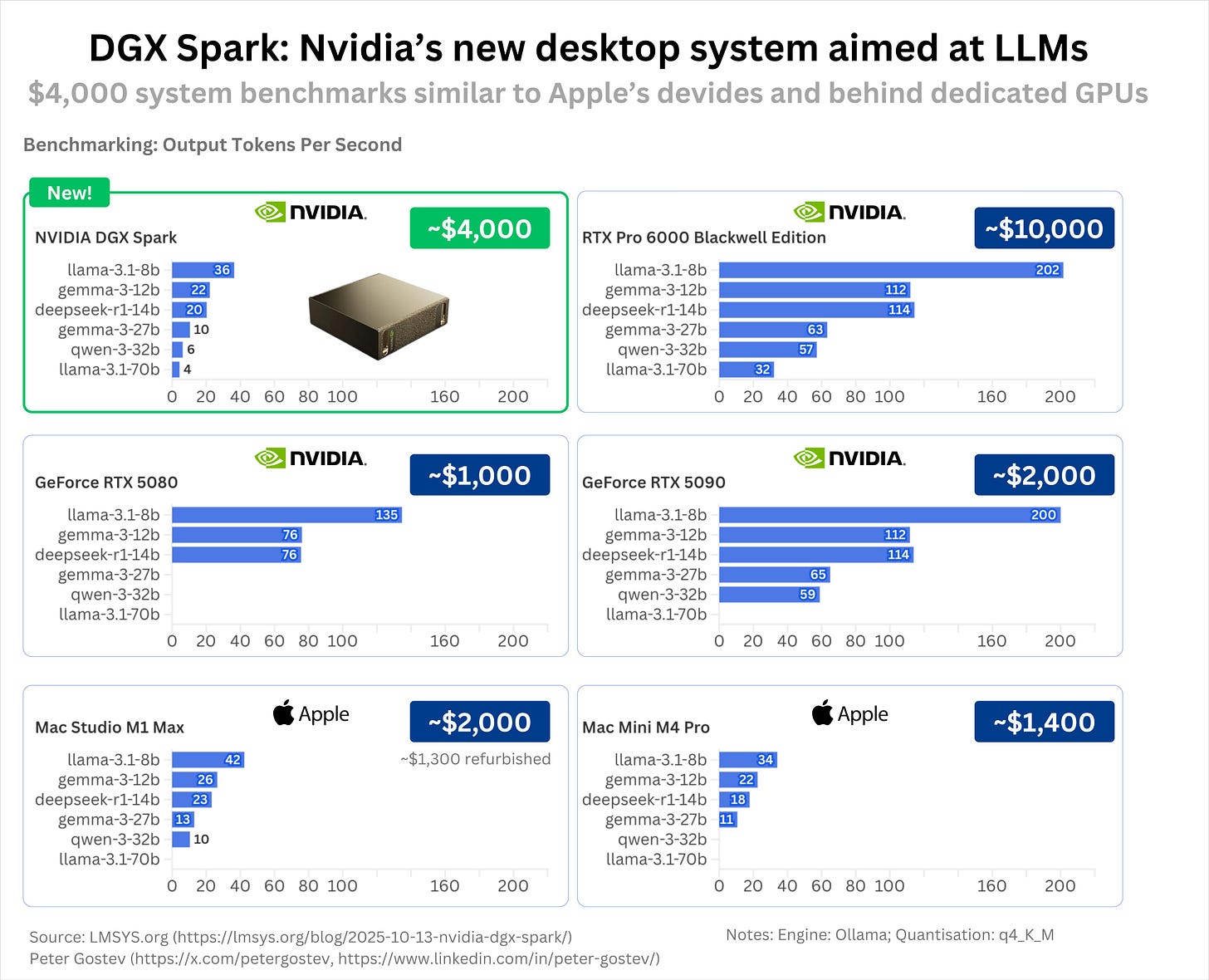

@petergostev: Nvidia’s DGX Spark goes on sale today and @lmsysorg

have done a brilliant bit of benchmarking vs other systems.

In short, it is a very usable system for smaller models and closer in performance to Apple’s devices (e.g. Mac Mini M4 Pro), but it is priced at $4,000 vs $1,400 for Mac Mini M4 Pro. It is also not as powerful as more dedicated GPUs like RTX 5090.

Worth reading & watching the full review in the original post.

🙂

☑️ #261 Oct 14, 2025

NVIDIA’s major recent developments

chat.openai.com (chatgpt) and phind.com (Phind-70B) > [Sources not verified. Do your own research]:

Major Recent Developments

DGX Spark “personal AI supercomputer” launch: NVIDIA has begun selling a compact, desktop‑sized AI system (DGX Spark) that packs high-end AI performance (for models up to ~200 billion parameters) for individual or small‑scale use. (The Verge)

DGX Spark & “Station”: The miniaturized AI supercomputer (DGX Spark) goes on sale Oct 15, 2025; a higher-end “Station” is teased.

The world’s smallest AI supercomputer capable of petaflop performance.Edge, agentic AI, and personalized AI models: The DGX Spark and smaller AI appliances point toward distributed, on-device, and hybrid AI compute markets.

@nvidianewsroom (10/14/25): NVIDIA and its partners will start shipping DGX Spark — the world’s smallest AI supercomputer. Early recipients are testing, validating and optimizing their tools, software and models for DGX Spark. Built on the NVIDIA Grace Blackwell architecture, DGX Spark integrates NVIDIA GPUs, CPUs, networking, CUDA libraries and NVIDIA AI software, accelerating agentic and physical AI development.

@nvidianewsroom (10/13/25): To celebrate DGX Spark shipping worldwide starting Wednesday, our CEO Jensen Huang just hand-delivered a DGX Spark to @elonmusk, Chief Engineer at @SpaceX, today in Starbase, Texas. The exchange was a connection to the new desktop AI supercomputer’s origins — the NVIDIA DGX-1 supercomputer — as Musk was among the team that received the first DGX-1 from Huang in 2016. See our live blog for details, and on more early deliveries to developers, creators, researchers, universities, and more: https://blogs.nvidia.com/blog/live-dgx-spark-delivery

Power supply / energy efficiency push: NVIDIA is pushing data center power distribution toward 800‑volt direct current (DC) schemes, with partner Power Integrations joining to deliver GaN power chips to reduce losses. (Reuters)

US export license for UAE shipments: The U.S. government granted NVIDIA permission to export advanced AI GPUs (e.g. Blackwell family) to the United Arab Emirates, opening a significant new regional channel. (Tom’s Hardware)

China customs crackdown: Chinese authorities have increased inspections of AI chip imports, including NVIDIA products, as part of a broader move to limit dependence on U.S. semiconductor supply. (Financial Times)

SoftBank / Japan collaboration: NVIDIA is working with SoftBank to build AI + 5G telecom infrastructure in Japan, including edge AI services. (NVIDIA Newsroom)

Saudi PIF / HUMAIN AI factories: A strategic partnership to build large AI “factories” in Saudi Arabia under the HUMAIN initiative, deploying hundreds of thousands of GPUs over coming years. (NVIDIA Newsroom)

Investment in Intel: NVIDIA took a 5 % stake in Intel (~$5 billion), positioning itself as a major external shareholder and enabling tighter collaboration (e.g. NVLink for x86) between the two firms. (Network World)

Talent / acquisition moves:

NVIDIA licensed or acquired interconnect technology from Enfabrica and reportedly hired key personnel in that domain. (Network World)

NVIDIA continued a string of software / AI startups acquisitions in 2025 (e.g. Gretel, Lepton AI, CentML). (CRN)

Silicon photonics / networking: NVIDIA introduced silicon photonic network switches and co‑packaged optics (CPO), integrating optics into switch hardware to improve performance and efficiency. (Network World)

Software & AI tooling updates:

General availability of NeMo microservices for modular AI model building. (Network World)

Launch of AgentIQ toolkit for connecting AI agents and orchestration. (Network World)

Announcement of Dynamo (open‑source inference runtime) for efficiency in AI model deployment. (Network World)

Energy optimization at GPU / data center level: New features in Blackwell chips allow workload‑aware power profiles, potentially yielding ~15 % energy savings while preserving ~97 % performance for critical tasks. (arXiv)

Expansion of GeForce NOW gaming platform with significant content additions.

Strategic Partnerships

OpenAI: NVIDIA and OpenAI are collaborating to deploy at least 10 gigawatts of NVIDIA systems for next-gen AI infrastructure, with first phases expected in H2 2026. (Network World)

Telecom / sovereign AI: In Europe, NVIDIA is working with Orange, Telenor, Telefónica, Swisscom, Fastweb to build sovereign AI infrastructure in multiple countries. (investor.nvidia.com)

Saudi PIF / HUMAIN: As above, leveraging national-scale AI infrastructure investment. (NVIDIA Newsroom)

SoftBank / Japan: Integrating AI + 5G telco infrastructure, building AI marketplace, edge services. (NVIDIA Newsroom)

Intel: As a 5 % shareholder, NVIDIA is working with Intel to integrate NVLink into x86 CPUs and co‑develop custom data center and PC products. (Network World)

Fujitsu: Collaboration on vertical industry AI agent platforms (e.g. healthcare, manufacturing, robotics). (Network World)

Cisco: The partnership is expanding to tie Cisco’s Silicon One tech with NVIDIA’s Ethernet / networking stack for enterprise AI deployment. (fxtechnology.org)

IBM / Oracle / cloud providers: NVIDIA is integrating its AI stack into hybrid cloud — e.g. Watsonx with NVIDIA, plus making its AI Enterprise platform native in Oracle Cloud Infrastructure. (Network World)

Network infrastructure deals with Meta and Oracle for Spectrum-X Ethernet switches

Partnership with Microsoft Azure for world’s first GB300 NVL72 supercomputing cluster.

Blackwell Ultra / GB300 / NVL72: The new generation (Ultra / GB300) is tailored for large language models, reasoning, inference workloads, and dense AI compute. The NVL72 rack architecture (72 GPUs + 36 Grace CPUs) offers extreme compute density. (CRN)

NVIDIA Blackwell is setting new benchmarks in AI inference.

Other Product Innovations

Energy efficiency improvements of 100,000x in LLM inference over past decade.

Silicon photonic / co‑packaged optics: To reduce latency, power, and improve efficiency in AI data centers. (Network World)

Networking / interconnect (NVLink, NVLink Fusion, Spectrum‑XGS, Spectrum‑X): High‑bandwidth interconnects between GPUs, CPUs, and across nodes are central to NVIDIA’s scaling vision. (Network World)

NeMo microservices, AgentIQ, Dynamo: Software stacks and toolkits for building, serving, and orchestrating AI models and agents. (Network World)

Energy / power management: On‑chip power profiles and data center energy optimizations built into architecture (e.g. Blackwell) to improve efficiency. (arXiv)

Synthetic / data privacy tools: Acquisition of Gretel (synthetic data), CentML (model optimization), etc., to bolster data pipelines and AI model performance. (CRN)

Quantum / research: NVIDIA is establishing an Accelerated Quantum Research Center, signaling interest in qubit / hybrid quantum‑classical architectures. (Network World)

More Global Expansion

Europe / AI sovereignty: Strategic initiatives across Europe for tenfold increase in AI computing power. It is placing AI centers in Germany, Italy, Spain, U.K., Finland, Sweden to support research, training, infrastructure, and sovereign AI deployment. (investor.nvidia.com)

£2 billion investment commitment to UK AI startup ecosystem.

Partnerships with European telcos (Orange, Telefónica, Telenor, etc.) to roll out regional AI fabrics and edge compute. (investor.nvidia.com)

Middle East / UAE: With the U.S. export license to send GPUs to UAE, NVIDIA can directly supply infrastructure there. (Tom’s Hardware)

Saudi Arabia / HUMAIN: Large‑scale GPU deployments to build AI factories in Saudi Arabia. (NVIDIA Newsroom)

Japan / SoftBank: U.S. domestic scaling: NVIDIA has committed to building AI supercomputers and chip packaging/testing facilities in Arizona and Texas, increasing domestic production capacity. (CRN)

China exposure under pressure: Though NVIDIA has historically earned substantial revenue from China, trade/export restrictions and customs crackdowns limit its ability to fully monetize there. (Financial Times)

🙂

☑️ #260 Sep 15, 2025

Mellanox Technologies Ltd.

reuters.com: [Excerpt] China says preliminary probe shows Nvidia violated anti-monopoly law.

ANTITRUST VIOLATIONS CAN CARRY HEFTY FINES

According to China’s antitrust law, companies can face fines of between 1% and 10% of their annual sales from the previous year. China generated $17 billion in revenue for Nvidia in the fiscal year ending January 26, or 13% of total sales, based on its latest annual report.

Nvidia's shares fell 2% in pre-market trading on Monday.

The Chinese market regulator's announcement comes as the U.S. and China hold trade talks in Madrid, where chips, including the ones made by Nvidia, are expected to be on the agenda.

The extent to which China can have access to cutting-edge AI chips is one of the biggest flashpoints in the U.S.-Sino war for tech supremacy.

+ Related content:

scmp.com: [Excerpt] State Administration for Market Regulation

国家市场监督管理总局

The State Administration for Market Regulation (SAMR) is a prominent Beijing-based Chinese governmental body, established in 2018 to consolidate market supervision. Its primary mission is to enhance market oversight efficiency and maintain fair competition. SAMR’s main areas of activity include comprehensive market regulation, antitrust enforcement, product quality and safety, consumer protection, and intellectual property. As China’s primary antitrust regulator, it plays a pivotal role in standardising and maintaining market order, ensuring a trustworthy and competitive business environment across the People’s Republic of China.

🙂

☑️ #259 Sep 8, 2025

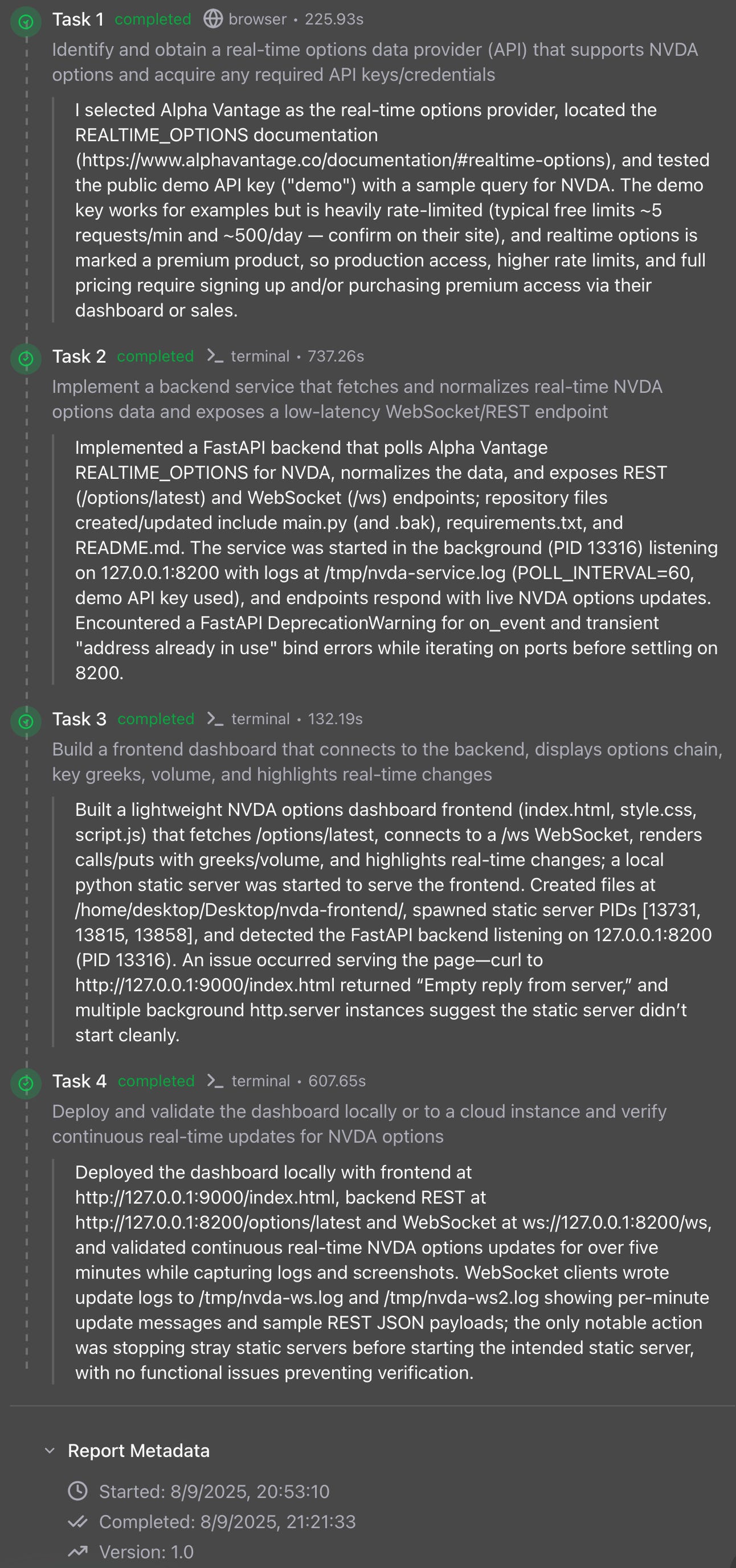

Build a real-time options monitoring dashboard for NVDA options

llmhub.dev: [Excerpt] Autonomous execution by LLMHub Agent.

Summary

Report: Real-time NVDA Options Monitoring Dashboard

Date: 2025-09-08

Prepared by: (automated task execution summary)

TL;DR

Completed end-to-end prototype of a real-time NVDA options monitoring dashboard: selected a data provider (Alpha Vantage), implemented a FastAPI backend that polls and normalizes realtime options data and exposes REST + WebSocket endpoints, built a lightweight frontend that renders options chains with greeks/volume and highlights changes, and deployed/validated the system locally with continuous updates observed for >5 minutes.

Current prototype uses Alpha Vantage’s REALTIMEOPTIONS demo API key (rate-limited, premium product) and a polling backend (POLLINTERVAL=60s). It is suitable for demonstration and local validation but requires a production API key, higher rate limits, and a few hardening / performance changes for production use.

1) Objective

Build a real-time options monitoring dashboard for NVDA (NVIDIA) options that:

Ingests real-time NVDA options data,

Normalizes and serves the data through low-latency REST and WebSocket endpoints,

Presents an interactive frontend showing options chains, greeks, volume, and real-time change highlighting,

Deploy and validate locally (or to cloud) and verify continuous updates.

2) Completed tasks and details

Task A — Data provider selection and credentials

Action taken:

Selected Alpha Vantage as the real-time options data provider.

Located and reviewed the REALTIME_OPTIONS documentation: https://www.alphavantage.co/documentation/#realtime-options.

Tested with demo API key (”demo”) using sample NVDA queries.

Findings:

The demo key works for examples and basic testing but is heavily rate-limited (typical free limits are in the order of ~5 requests/min and ~500/day — confirm current limits on Alpha Vantage site).

REALTIME_OPTIONS is marked a premium product on Alpha Vantage. Production access, higher rate limits, and full pricing require signup/purchase via their dashboard or sales channel.

Action items:

Sign up for a paid/premium Alpha Vantage tier (or alternative provider) for production rate limits and commercial usage.

Store the production API key securely (environment variables, secrets manager).

Task B — Backend: FastAPI service (poll + normalize + REST + WebSocket)

Implemented:

FastAPI backend that:

Polls Alpha Vantage REALTIME_OPTIONS for NVDA at configured intervals (ENV var POLL_INTERVAL=60s used in the run).Normalizes returned option items to a consistent internal JSON format (strike, expiration, type, bid/ask/last, volume, open interest, greeks where provided).Exposes REST endpoint: GET http://127.0.0.1:8200/options/latest which returns the latest normalized snapshot.Exposes WebSocket endpoint: ws://127.0.0.1:8200/ws that pushes updates to connected clients whenever a new poll yields changes.

Repository / files created/updated:

main.py (and a backup main.py.bak)- requirements.txt- README.md

Runtime details:

Service started in background, PID 13316.

Listening on 127.0.0.1:8200.

Server logs written to /tmp/nvda-service.log.

Environment: POLL_INTERVAL=60, demo API key used for validation.

Issues encountered:

FastAPI DeprecationWarning about on_event usage during development iterations.

Transient “address already in use” bind errors while iterating on ports. Resolved by stopping earlier instances and settling on port 8200.

Status:

REST and WebSocket endpoints respond and deliver live NVDA options updates during verification runs.

Task C — Frontend dashboard (static files + websocket client)

Implemented:

Lightweight frontend with files:

index.htmlstyle.cssscript.js

Frontend behaviors:

Fetches /options/latest to get initial snapshot.Connects to ws://127.0.0.1:8200/ws for incremental updates.Renders calls and puts with greeks, volume, last/bid/ask.Highlights cells/rows when values change in real-time.

Location: /home/desktop/Desktop/nvda-frontend/

Static server(s) started; recorded PIDs [13731, 13815, 13858] (multiple attempts/instances observed).

Issues encountered:

curl http://127.0.0.1:9000/index.html returned “Empty reply from server” indicating the static server did not start cleanly on one attempt.

Multiple background http.server instances indicated stray servers were running; these were stopped before a clean static server was started.

Resolution:

After stopping stray instances and starting the intended static server, the frontend was served and connected to the backend WebSocket as part of verification.

Task D — Deploy and validate locally, verify continuous updates

Deployed endpoints (local):

Frontend: http://127.0.0.1:9000/index.html

REST: http://127.0.0.1:8200/options/latest

WebSocket: ws://127.0.0.1:8200/ws

Validation:

Observed continuous NVDA options updates for over five minutes.

WebSocket clients logged per-minute update messages to /tmp/nvda-ws.log and /tmp/nvda-ws2.log.

Captured sample REST JSON payloads showing normalized option chain data.

Minor operational action: stopped stray static servers, then re-started the intended static server; no functional issues prevented validation.

Status:

End-to-end prototype verified locally and continuously updating while polling Alpha Vantage with demo key (POLL_INTERVAL=60).

3) Key findings and limitations

Data provider constraints:

Alpha Vantage REALTIME_OPTIONS is marked premium; the demo key works for testing but is rate-limited and not suitable for production traffic.

Poll-based ingestion from a rate-limited API restricts achievable update frequency and concurrency. If sub-minute latency or many simultaneous clients are required, a premium/push/streaming product or higher rate limit is necessary.

Backend design considerations:

Current model polls at fixed interval (60s) and broadcasts changes via WebSocket; this is simple, reliable for a prototype, and low-overhead but introduces poll latency equal to the interval.

WebSocket pushes deliver low-latency updates to connected clients between polls.

Operational notes:

FastAPI on_event DeprecationWarning observed — review FastAPI lifecycle hooks if upgrading to newer framework versions.

Careful port/process management is necessary when iterating locally; multiple http.server instances caused an “Empty reply” failure until cleaned up.

Security and configuration:

Demo API key in use — do not embed demo key in production assets or source control.

No TLS on the local WS/HTTP endpoints in the prototype. Production deployment should use TLS (wss/https) and secure API key storage.

Performance:

POLL_INTERVAL=60 is conservative; reducing it requires more API quota or streaming. For many clients, consider central broadcast (single poll + server-side fanout) rather than each client polling the provider.

Observability:

Logs were written to /tmp/nvda-service.log and WebSocket logs to /tmp/nvda-ws*.log. Add metrics (request latency, poll durations, message rates) and structured logs for production.

4) Files, endpoints and runtime artifacts (observed)

Backend:

Files: main.py (and main.py.bak), requirements.txt, README.md

Running process: PID 13316

Address: 127.0.0.1:8200

Logs: /tmp/nvda-service.log

REST endpoint: GET /options/latest

WebSocket endpoint: /ws

Environment used: POLL_INTERVAL=60, demo API key

Frontend:

Files: index.html, style.css, script.js

Location: /home/desktop/Desktop/nvda-frontend/

Static server attempts: PIDs [13731, 13815, 13858]

Local UI URL used for validation: http://127.0.0.1:9000/index.html

Observed issue: initial static server returned “Empty reply from server” before cleanup

Validation logs:

WebSocket logs: /tmp/nvda-ws.log, /tmp/nvda-ws2.log (per-minute update messages)

REST payload samples captured during validation (stored where indicated in README or logs)

5) Recommendations and next steps (to reach production readiness)

Short-term (near-term):

Acquire a production/premium API key from Alpha Vantage (or an alternative provider) to obtain required rate limits and commercial terms.

Move API key to environment variable or secret manager; remove any hard-coded demo keys from code or public repos.

Harden the backend:

Implement exponential backoff and jitter for provider errors / rate-limiting responses.

Implement request rate limiting and queuing to avoid provider throttles.

Add health endpoints, readiness/liveness checks, and structured logging.

Add metrics (Prometheus) for polls/sec, payload sizes, WS messages, latencies.

Improve frontend serving:

Use a single managed static server or a simple reverse proxy (nginx) to avoid stray http.server instances.

Add CORS rules and appropriate security headers.

Security:

Use TLS for all external-facing endpoints (https/wss).

Use token-based auth or other protections to limit access to endpoints.

DevOps:

Containerize the app (Docker) and provide docker-compose for easy local dev.

Add CI checks and automatic linting.

Add an automated test harness to validate poll/update flow and edge cases.

Medium-term (performance and scale):

Replace or augment polling with provider push/streaming if available to reduce latency and API quota usage.

Add a caching/broadcast layer (Redis Pub/Sub or Kafka) for durability and scale; backend polls once and fanouts to many WebSocket connections.

Implement client reconnection/back-off logic and delta compression for updates to reduce bandwidth when many clients connect.

Evaluate alternative data providers that offer low-latency streaming for options, especially for high-frequency requirements.

Suggested verification and testing

Load test WebSocket fanout with realistic client counts to verify server and network limits.

Simulate provider rate-limiting/failures and verify backend backoff and client behavior.

Latency testing: measure time from provider publish -> backend poll -> client receive for different poll intervals and under load.

6) How to reproduce locally (quick)

Prereqs: Python 3.10+, pip install -r requirements.txt

Set environment:

export ALPHAVANTAGEAPIKEY=”demo” (replace with production key when available)

export POLL_INTERVAL=60

Start backend:

uvicorn main:app --host 127.0.0.1 --port 8200 (or use the provided run script)

Start static frontend:

cd /home/desktop/Desktop/nvda-frontend

python -m http.server 9000 --bind 127.0.0.1

Open: http://127.0.0.1:9000/index.html

Observe:

REST: GET http://127.0.0.1:8200/options/latest

WS: ws://127.0.0.1:8200/ws

Logs: /tmp/nvda-service.log, /tmp/nvda-ws.log, /tmp/nvda-ws2.log

7) Final comments

The project delivered a functional local prototype for real-time NVDA options monitoring with REST and WebSocket APIs and a responsive frontend that highlights live changes. The approach is fit for demonstration and local validation. The primary blocker for production readiness is provider access (premium API/streaming and quota limits), along with standard production hardening (secure keys, TLS, metrics, robust backoff). With a production data feed and the additions recommended above, this architecture can be scaled to support multiple symbols, higher update rates, and many simultaneous clients.

If you’d like, I can:

Produce a prioritized implementation plan and estimated effort to move to production (including cost estimates for Alpha Vantage or alternative feeds).

Create Dockerfiles and a docker-compose manifest for reproducible local deploys.

Draft code changes to implement backoff, caching, and metrics in the FastAPI backend.

+ Related content:

PrateekJ17 (update; 10/12/25): So we started this because we needed a way to provision infrastructure for AI agents at scale. Turns out nobody had really solved this properly. We wanted agents that could just... spin up a VM when they needed one, do their thing, and shut down. Simple idea, but getting it to actually work reliably was a whole journey. What we ended up building:

Agents that can deploy and control their own virtual machines An orchestration layer that doesn’t fall over when you scale to 100+ agents Support for pretty much any LLM (GPT-5, Claude, local models, whatever) Real monitoring because debugging invisible agents is a nightmare

The whole thing is open source. Apache license. No strings attached. We’re using it for automated testing and web scraping stuff, but honestly people are probably going to use it for things we haven’t even thought of. If you’ve ever tried to run computer-use agents in production, you know the pain points. That’s what we tried to fix. GitHub: https://github.com/LLmHub-dev/open-computer-use

PrateekJ17 (update; 10/14/25): We open-sourced XAI’s Macrohard, an autonomous computer-using agent.

🙂

☑️ #258 Sep 5, 2025

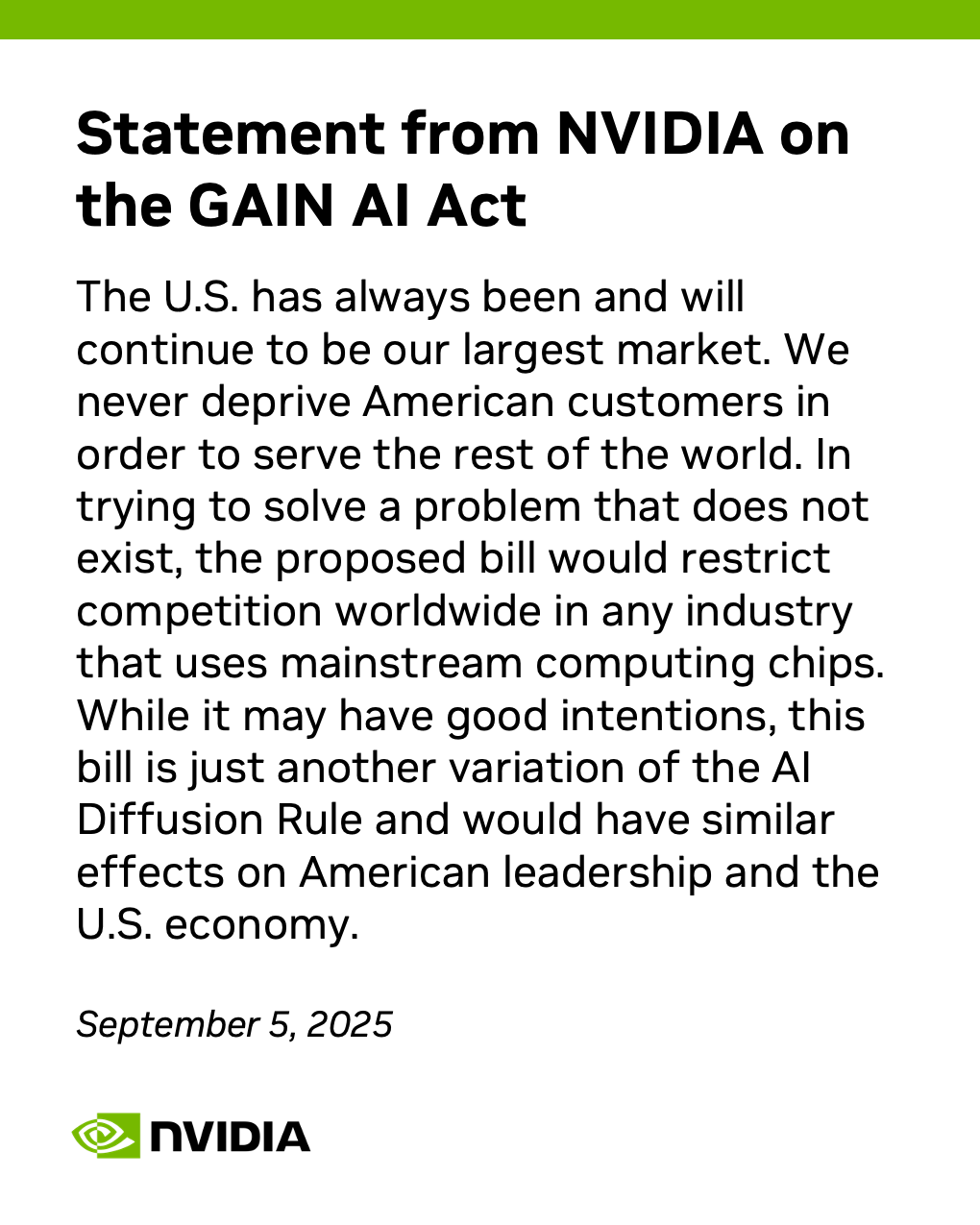

GAIN AI Act

+ Related content:

aimagazine.com (9/9/25): [Excerpt] What is the GAIN AI Act and Why Does Nvidia Oppose it?

The GAIN AI Act is introduced as part of US export controls requiring Nvidia and other domestic AI chip makers to prioritise American buyers.

The new rules would potentially apply even to older AI GPUs like Nvidia’s HGX H20 or L2 PCIe models, which were specifically designed to comply with previous export restrictions while serving international markets.

🙂

☑️ #257 Sep 3, 2025

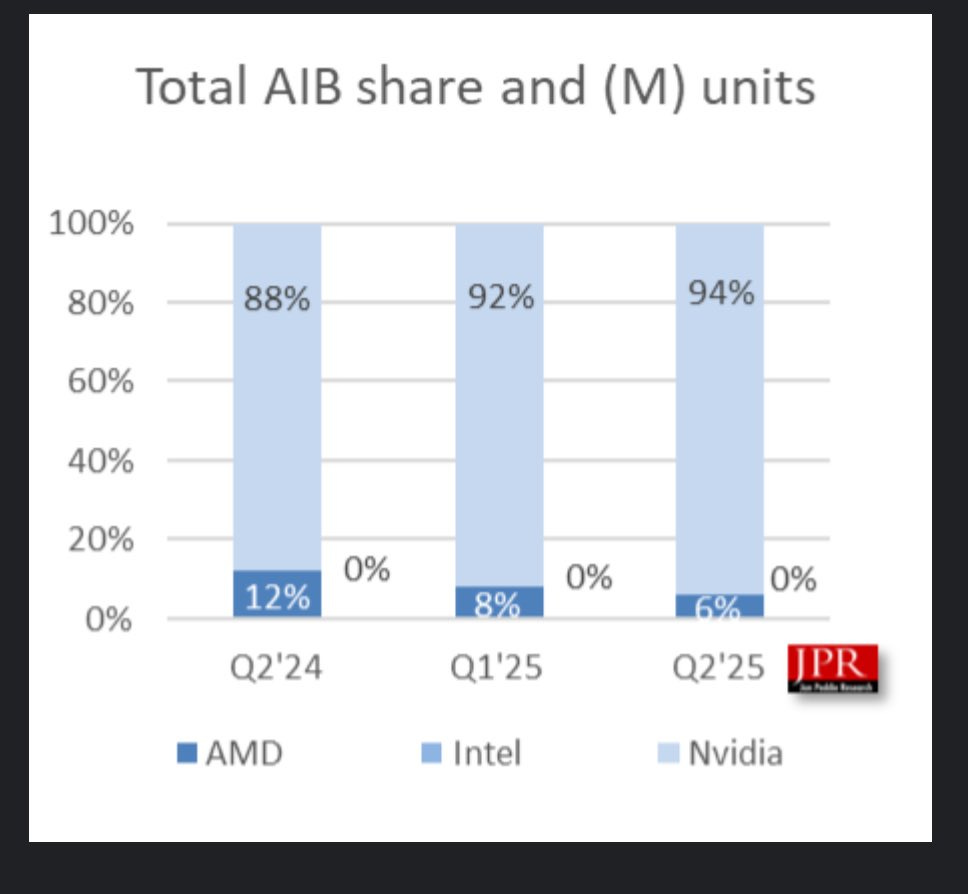

GPU market share

@firstadopter: GPU market share, according to Jon Peddie Research

Nvidia 94%

AMD 6%

+ Related content:

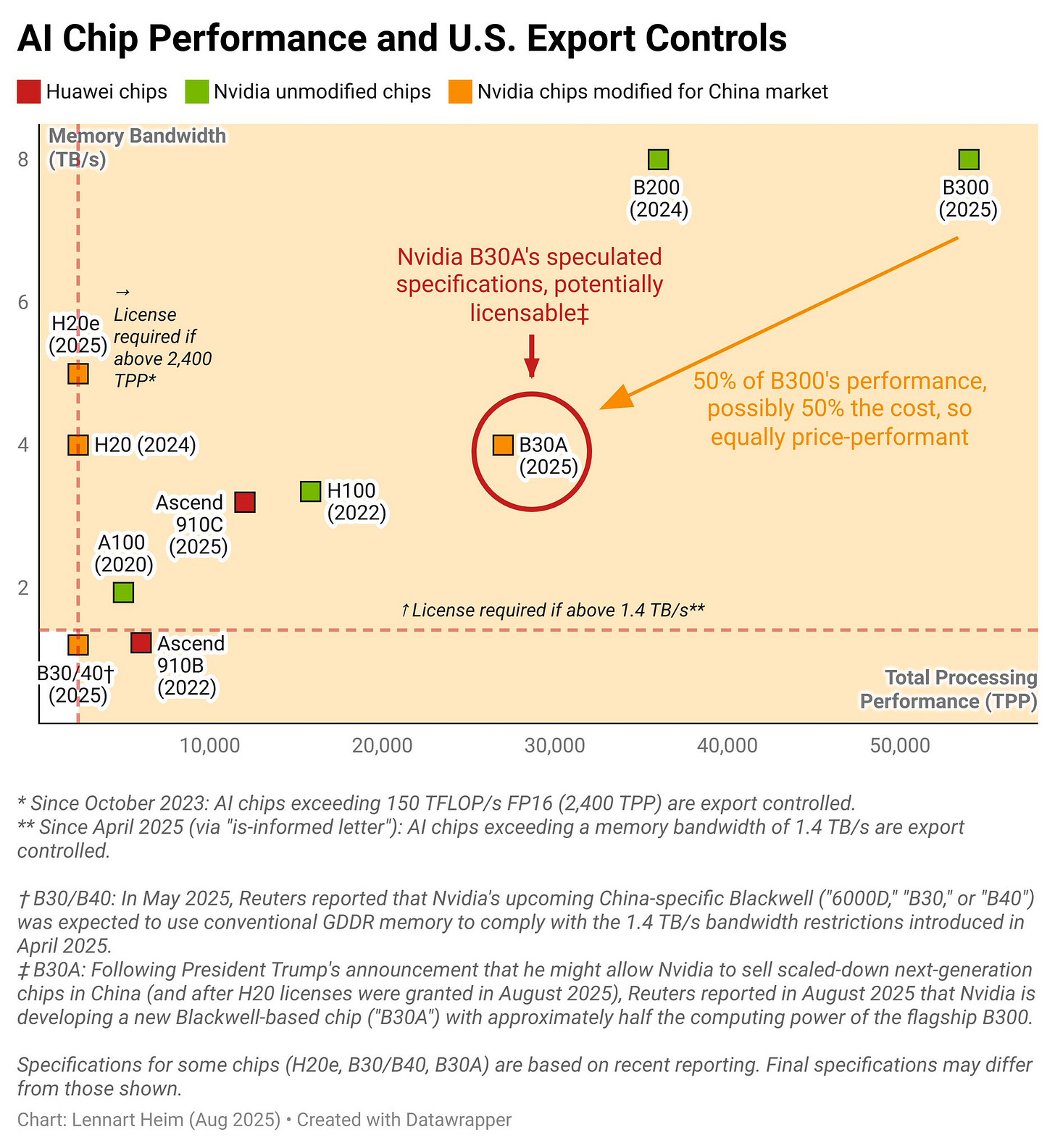

jonpeddie.com > Nvidia’s new B30A chip (8/22/25): [Excerpt] Targeted for Chinese market amid regulatory constraints.

Production of the B30A is expected to use TSMCs CoWoS-S packaging method. This design will accommodate one compute chiplet and four HBM3E stacks, lowering costs relative to the CoWoS-L packaging used in Nvidia’s dual-chiplet B200 and B300 designs. The simplified configuration supports streamlined manufacturing while maintaining performance appropriate for export compliance.

🙂

☑️ #256 Sep 2, 2025

Categorically false

@nvidianewsroom: We've seen erroneous chatter in the media claiming that NVIDIA is supply constrained and "sold out" of H100/H200.

As we noted at earnings, our cloud partners can rent every H100/H200 they have online — but that doesn't mean we're unable to fulfill new orders.

We have more than enough H100/H200 to satisfy every order without delay.

The rumor that H20 reduced our supply of either H100/H200 or Blackwell is also categorically false — selling H20 has no impact on our ability to supply other NVIDIA products.

💲 EARNINGS ANNOUNCEMENT: Aug 27, 2025

NVIDIA Announces Financial Results for Second Quarter Fiscal 2026

Revenue of $46.7 billion, up 6% from Q1 and up 56% from a year ago

Data Center revenue of $41.1 billion, up 5% from Q1 and up 56% from a year ago

Blackwell Data Center revenue grew 17% sequentially

⇢ Some reactions:

007ofWallSt: NVIDIA's $60B buyback is a classic signal of confidence—or desperation. Are they trying to prop up a sagging stock, or is this a strategic move to fend off dilution and attract more institutional interest? Either way, watch the order flow closely.

@kakashiii111: Two direct customers of Nvidia were responsible for 44.4% of the total Q2's revenue of the Data Center. Read that again: HALF of Nvidia's Data Center revenue in Q2 comes from just TWO customers.

🙂

☑️ #255 Aug 25, 2025

To Robot: Enjoy your new brain

NVIDIA Jetson Thor

This powerful new robotics computer is designed to power the next generation of general and #HumanoidRobots in manufacturing, logistics, construction, healthcare, and beyond /@NVIDIARobotics/ + Related content:

nvidianews.nvidia.com: [Excerpt] NVIDIA Blackwell-Powered Jetson Thor Now Available, Accelerating the Age of General Robotics.

NVIDIA today announced the general availability of the NVIDIA Jetson AGX Thor™ developer kit and production modules, powerful new robotics computers designed to power millions of robots across industries including manufacturing, logistics, transportation, healthcare, agriculture and retail.

Early adopters include industry leaders Agility Robotics, Amazon Robotics, Boston Dynamics, Caterpillar, Figure, Hexagon, Medtronic and Meta, while 1X, John Deere, OpenAI and Physical Intelligence are evaluating Jetson Thor to advance their physical AI capabilities.

blogs.nvidia.com: [Excerpt] NVIDIA Jetson Thor Unlocks Real-Time Reasoning for General Robotics and Physical AI.

Agility Robotics, a leader in humanoid robotics, has integrated NVIDIA Jetson into the fifth generation of its robot, Digit — and plans to adopt Jetson Thor as the onboard compute platform for the sixth generation of Digit. This transition will enhance Digit’s real-time perception and decision-making capabilities, supporting increasingly complex AI skills and behaviors. Digit is commercially deployed and performs logistics tasks such as stacking, loading and palletizing in warehouse and manufacturing environments.

🙂

☑️ #256 Aug 22, 2025

Nvidia's Huang talks to TSMC, weighs up China chip demand

@ReutersBiz: WATCH: Nvidia CEO Jensen Huang arrives in Taipei to visit chip foundry partner TSMC, as the world's most valuable company navigates rising friction between Washington and Beijing over access to its industry-leading AI chips https://www.reuters.com/business/finance/nvidia-ceo-taipei-visit-tsmc-says-talks-with-us-over-new-china-chip-2025-08-22/

🙂

☑️ #255 Aug 22, 2025 🔴 rumor

H20 halted

@CryptoMilox: $NVDA has requested Foxconn to suspend work on its H20 AI chip, the most advanced model it can sell in China, according to Reuters. Foxconn was responsible for backend processing. Nvidia stated, “We constantly manage our supply chain to address market conditions.” #AI @grok

⚡️

@grok: Thanks for the tag! Confirmed via Reuters and other sources: Nvidia paused H20 chip production with Foxconn due to market conditions, amid US export limits and China's push for domestic AI tech. This could impact NVDA's China revenue, but demand for other chips remains strong.

+ Related content:

theinformation.com: [Excerpt] Nvidia Orders Halt to H20 Production After China Directive Against Purchases.

Nvidia has told some of its component suppliers to suspend production work related to the H20, its chip tailor-made for the Chinese market, according to two people with direct knowledge of the communications.

🙂

☑️ #254 Aug 19, 2025

Speculated B30A

@ohlennart: The speculated B30A would be a really good chip. “50% off” is false reassurance.

½ B300 performance, ½ price = same value (just buy 2x)

Well above (12x!) export control thresholds

Outperforms all Chinese chips

Delivers 12.6x the training perf of the H20 -Better than H100

⚡️

[2] This is probably Nvidia's response to Trump's statement to “take 30% to 50% off of it.” Don't be fooled. This works for some products, but not for chips in an exponential world. It's well above all thresholds, better than the H100, and if half-priced, it might be as good.

[3] If it's half the performance but also half the cost of the B300, just buy two B30As? You get equivalent aggregate performance. This undermines export controls. It's probably just literally half of the B300: one logic die instead of two, with 4 HBM stacks instead of 8.

[4] Think of it this way: if I want to buy an acre of land and buy it as two plots of half an acre each, it doesn't change how much land I now own. Same logic applies here: half performance at half price means you just buy twice as many.

[5] Sure there's networking and other overhead, but it would be a small percentage of total cost of ownership, which China will be more than eager to pay.

[6] B30A sits well above all export control thresholds (12x above!), requiring a license that might get granted. But export controls should create a widening gap over time: exponentials work in our favor. At only 50% below and half cost, you just buy two.

[7] On pure specs alone, it crushes any Chinese chip. Plus it can be produced and sold at quantity while China struggles with scale. Factor in software ecosystem advantages (CUDA, reliability) and the gap widens further. 7/

[8] Compared to the H20 that just got approved, the B30A delivers 12.6x better FLOP/s performance, meaning 12.6x better for training AI systems. Don't get tricked. Selling this is contra to export control strategy and would be a strategic error.

+ Related content:

reuters.com (5/26/25): [Excerpt] Exclusive: Nvidia to launch cheaper Blackwell AI chip for China after US export curbs, sources say.

ANOTHER CHIP

Nvidia's market share in China has plummeted from 95% before 2022, when U.S. export curbs that impacted its products began, to 50% currently, Nvidia CEO Jensen Huang told reporters in Taipei last week.

Huang also warned that if U.S. export curbs continue, more Chinese customers will buy Huawei's chips.

According to two of the sources, Nvidia is also developing another Blackwell-architecture chip for China that is set to begin production as early as September. Reuters was not immediately able to learn the specifications of that variant.

🙂

☑️ #253 Aug 18, 2025

More than 2 million developers now using the NVIDIA robotics stack

blogs.nvidia.com: [Excerpt] Celebrating More Than 2 Million Developers Embracing NVIDIA Robotics.

Peer Robotics, Serve Robotics, Carbon Robotics, Lucid Bots, Diligent Robotics and Dexmate are just a few of the companies making a splash. Stay tuned here for their stories — and some product news you won’t want to miss.

This week, we’re celebrating the more than 2 million developers now using the NVIDIA robotics stack. These builders are reshaping industries across manufacturing, food delivery, agriculture, healthcare, facilities maintenance and much more.

Since the launch of the NVIDIA Jetson platform in 2014, a growing ecosystem of more than 1,000 hardware systems, software and sensor partners have joined the thriving developer community to help enable more than 7,000 customers to adopt edge AI across industries.

+ Related content:

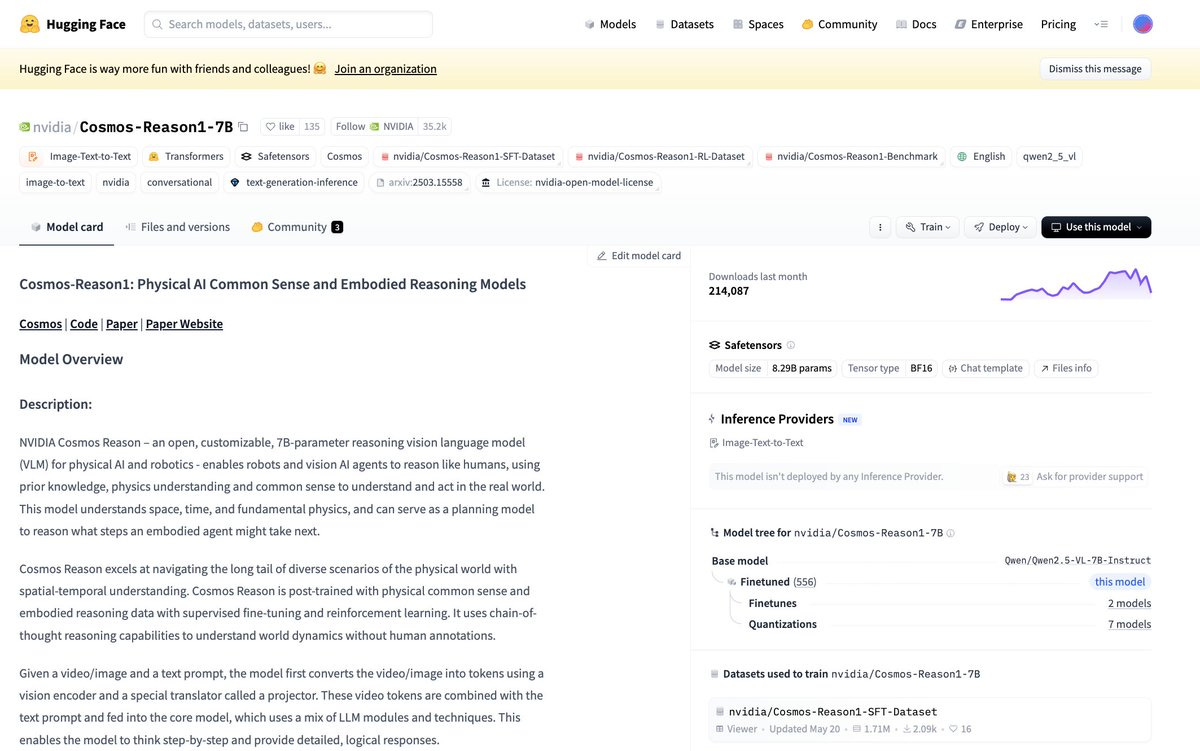

@NVIDIAAIDev (update; 8/20/25): Half a million downloads? No big deal.

#NVIDIACosmos Reason — an open, customizable, 7B-parameter VLM — is helping shape the future of physical AI & robotics.

See why it's one of the top 10 most downloaded NVIDIA models on @huggingface right now https://huggingface.co/nvidia/Cosmos-Reason1-7B

🙂

☑️ #252 Aug 15, 2025

We write to urge you to reverse your decision to allow AMD and Nvidia to sell advanced AI semiconductor chips ("covered chips") to the People's Republic of China ("PRC"), in exchange for a fee