“AI datacenters will be built next to energy production sites that can produce gigawatt-scale, low-cost, low-emission electricity continuously. Basically, next to nuclear power plants. The advantage is that there is no need for expensive and wasteful long-distance distribution infrastructure.“ /@Yann LeCun/

☑️ #96 Sep 5, 2025

XPUs

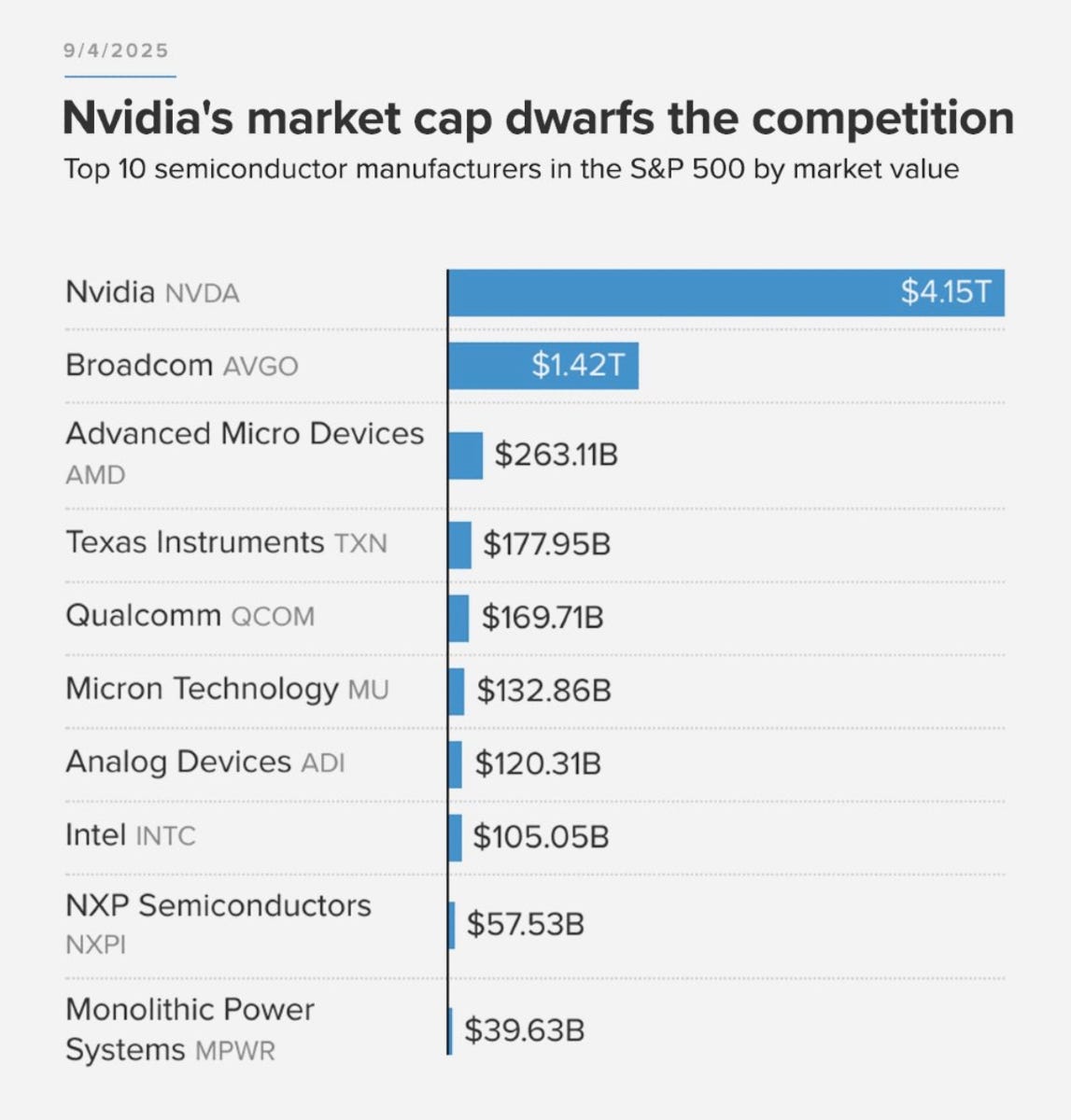

@stocksharks_: Broadcom has emerged as a big winner in the AI boom with its XPU accelerator chips—cheaper and simpler than Nvidia’s GPUs, but built to run specific AI programs with high efficiency

+ Related content:

goldmansachs.com (9/4/25): [Excerpt] Key stock takeaways:

We expect the stock to trade up following a solid quarter with guidance above the Street, despite elevated expectations heading into the quarter.

We believe the most significant development was Broadcom's announcement that it has converted another new custom silicon customer focused on inference, which is expected to help drive "material" upside to management's prior expectation of Al Semiconductor revenue growth of -60% in 2026. The company's Al Networking prospects remain compelling heading into 2026 with significant scale-up and scale-out opportunities driven by its Tomahawk 6 and Jericho 4 products.

Management noted that it currently has a total backlog of over $110bn, which implies significant business visibility over the next two years. Finally, the company announced that CEO Hock Tan has announced his intention to remain CEO through at least 2030. We remain Buy rated on Broadcom as we see the company remaining the leader in Al custom compute and merchant networking silicon (which is likely to grow in importance), and we believe the company's enterprise software portfolio is under appreciated

ft.com (9/4/25): OpenAI committing to $10bn in orders of the new “XPUs”, to be used internally.

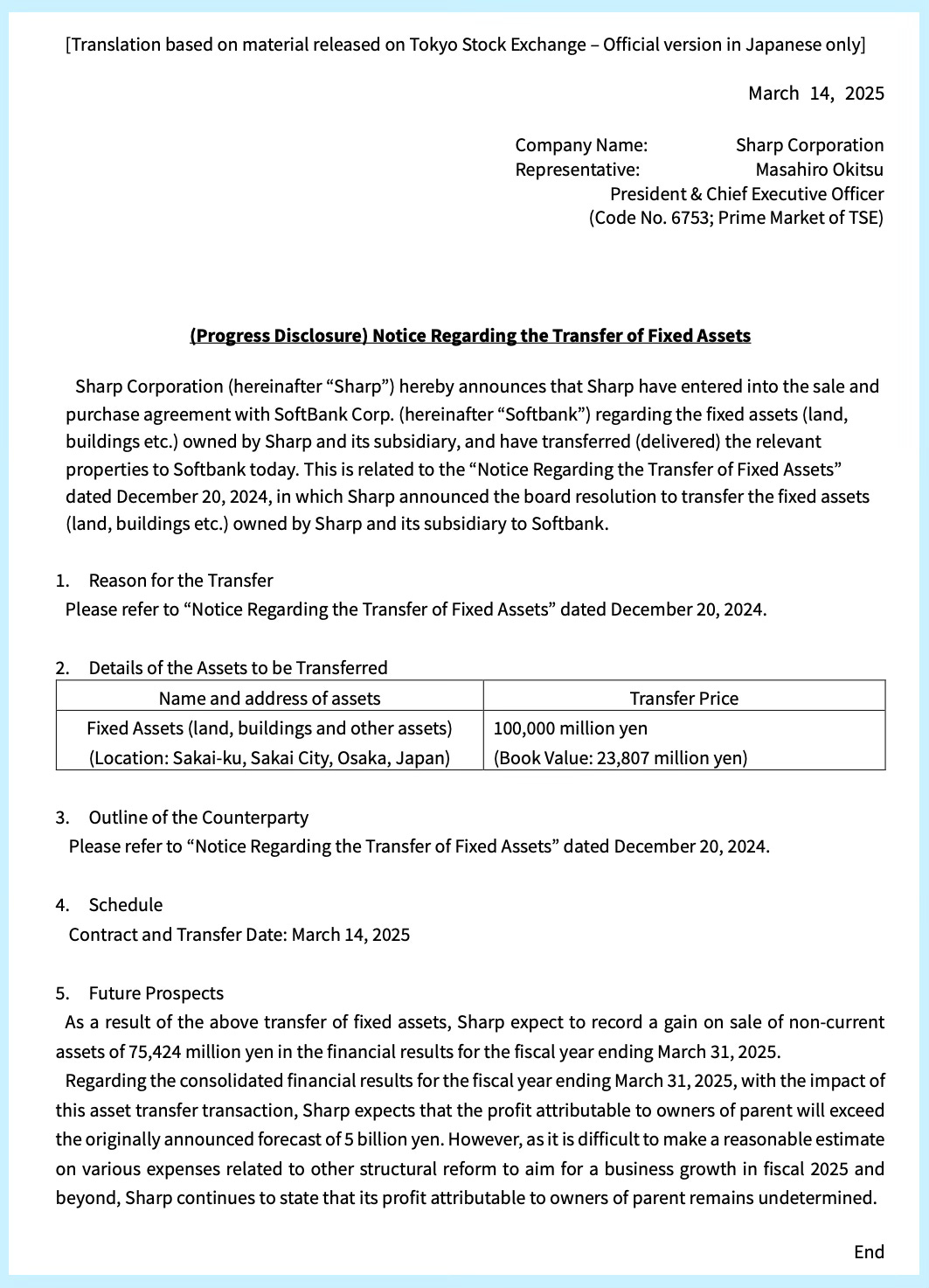

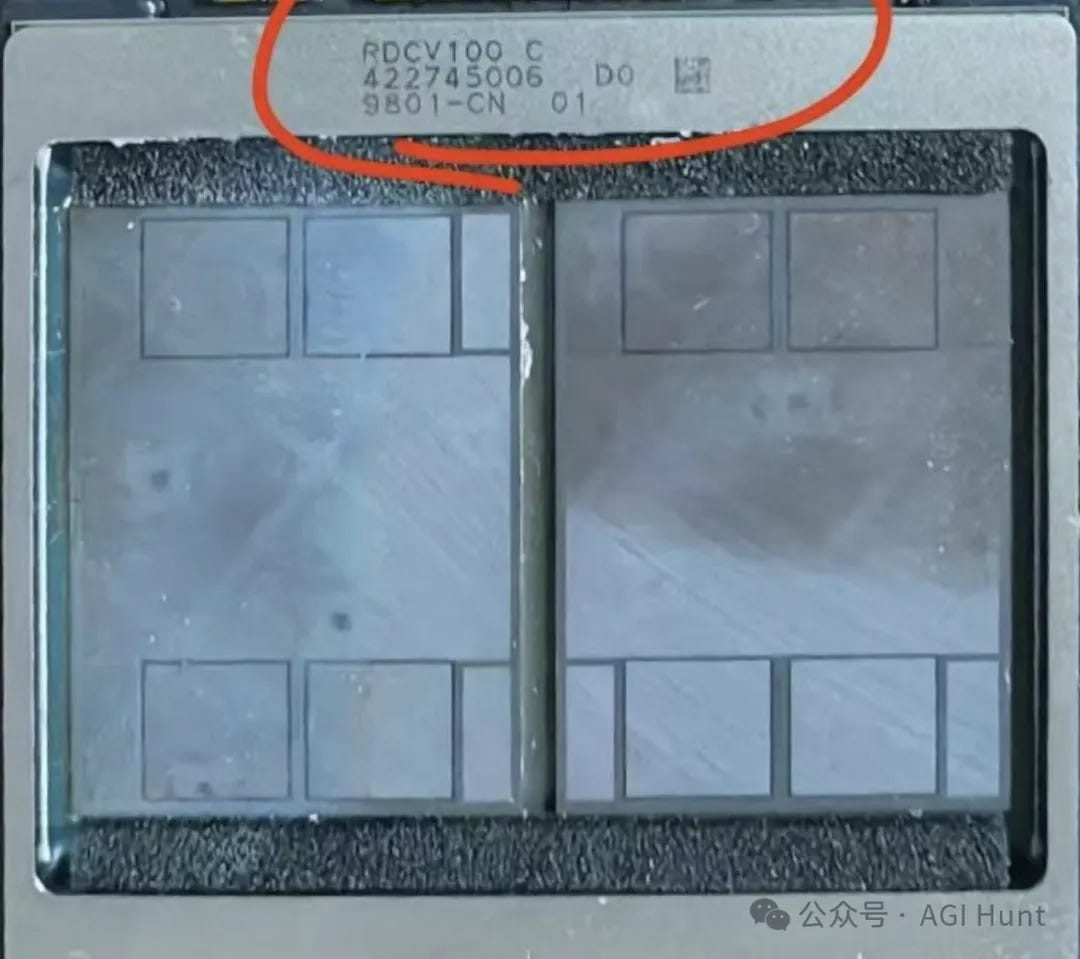

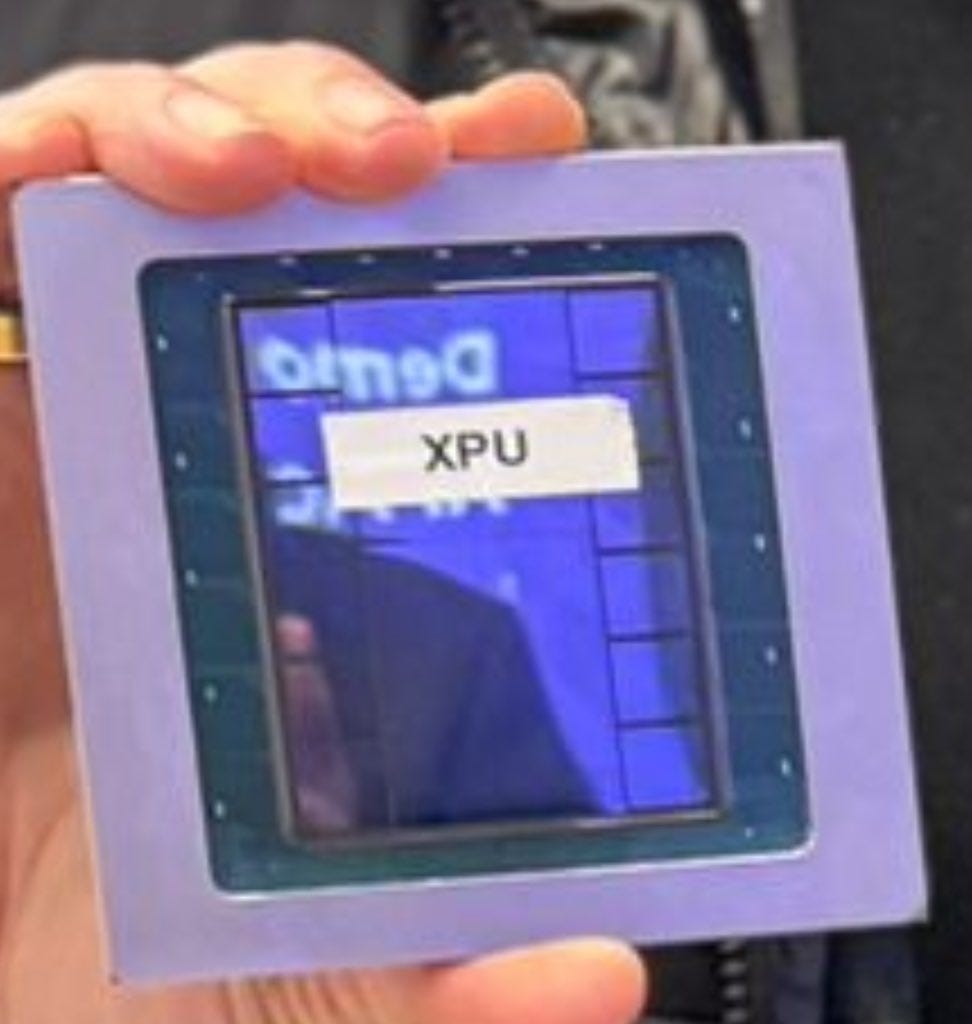

@PatrickMoorhead (3/21/24): Here’s another fun one. The guy who’s smiling (Frank Ostojic) runs @Broadcom’s custom silicon group. He should be smiling as he announced that he has a third XPU design from a large “consumer AI company.” To the right is a close up of the XPU. You can see the 2 compute units on the center and all the HBM to the left and right. A full up custom SoC with lots and lots of compute, HBM, very high speed intra chip connectivity and, as you’d expect, the highest performance external networking.

@PatrickMoorhead (3/21/24): Some fun pics from the @Broadcom AI investor day today. This is obviously a wafer in its protective case. This “XPU” (AI accelerator) chip uses a 3D package. The top die is a 700mm2 accelerator on top of an 800m2 SRAM. Yes I’m surprised as: A/ I don’t know $AVGO had 3D chops. (It does). B/ I didn’t know the company did SOCs (I mistakenly thought its XPUs were ASICs like networking) Its XPUs are full SoCs with an accelerator, HBM, BOATLOADS of networking and PHYs

🙂

☑️ #95 Sep 2, 2025

VEU reversal

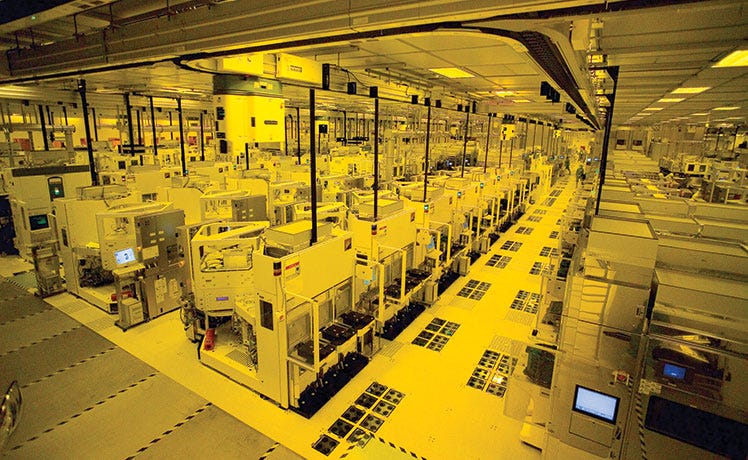

siliconangle.com: [Excerpt] US limits TSMC’s ability to send chipmaking equipment to its fabs in China.

The Taiwanese company uses hardware from several U.S. equipment suppliers to power its fabs. One of the largest companies on the list, Applied Materials Inc., makes machines for the deposition and etching phases of the chip production workflow. The company’s systems can deposit various materials on a silicon wafer and then form them into transistors.

TSMC also counts Milpitas, California-based KLA Corp. as a supplier. The latter company makes equipment for monitoring the reliability of chip production lines. Shares of KLA and Applied Materials dropped 3% and 2%, respectively, today on the revocation of TSMC’s VEU authorization.

“While we are evaluating the situation and taking appropriate measures, including communicating with the US government, we remain fully committed to ensuring the uninterrupted operation of TSMC Nanjing,” TSMC said in a statement today.

The Commerce Department previously revoked the VEU licenses of SK hynix Inc. and Samsung Electronics Co., the world’s two largest memory suppliers. Officials stated at the time that the companies may continue operating their fabs in China, but won’t be permitted to make upgrades or add manufacturing capacity. SK hynix maintains a flash chip facility in Nanjing that it bought from Intel Corp. a few years ago.

+ Related content:

bis.gov > Federal Register Notices (update; 9/2/25): [Excerpt] [FR Citation: FR 42321] Revocation of Validated End-User Authorizations in the People's Republic of China.

In this final rule, the Bureau of Industry and Security (BIS) amends the Export Administration Regulations (EAR) to revise the existing Validated End-User (VEU) Authorizations list for the People's Republic of China (PRC) by removing Intel Semiconductor (Dalian) Ltd; Samsung China Semiconductor Co. Ltd; and SK hynix Semiconductor (China) Ltd.

media.bis.gov (8/28/25): [Excerpt] Department of Commerce Closes Export Controls Loophole for Foreign-Owned Semiconductor Fabs in China.

WASHINGTON, D.C. — Today, the Department of Commerce’s Bureau of Industry and Security (BIS) closed a Biden-era loophole that allowed a handful of foreign companies to export semiconductor manufacturing equipment and technology to China license-free. Now these companies will need to obtain licenses to export their technology, putting them on par with their competitors.

The loophole is known as the Validated End-User (VEU) program. In 2023, the Biden Administration expanded the VEU program to allow a select group of foreign semiconductor manufacturers to export most U.S.-origin goods, software, and technology license-free to manufacture semiconductors in China. No U.S.-owned fab has this privilege — and now, following today’s decision, no foreign-owned fab will have it either.

Former VEU participants will have 120 days following publication of the rule in the Federal Register to apply for and obtain export licenses. Going forward, BIS intends to grant export license applications to allow former VEU participants to operate their existing fabs in China. However, BIS does not intend to grant licenses to expand capacity or upgrade technology at fabs in China. Read more

koreaherald.com (8/30/25): US to require Samsung, SK hynix to acquire licenses for sending US chipmaking tools to China.

The United States Commerce Department announced a plan Friday to strip South Korean tech firms Samsung Electronics and SK hynix Inc. of "validated end-user (VEU)" status, a move that will require them to secure licenses for sending certain US chipmaking equipment to their plants in China.

On the Federal Register, the department's Bureau of Industry and Security said it will revise the existing VEU authorizations list for China by removing the companies as well as Intel Semiconductor Ltd. The department stressed its intention not to grant licenses to expand their capacity or upgrade technology at plants in China.

VEU authorization for TSMC Nanjing will be revoked effective December 31, 2025

🙂

☑️ #94 Aug 30, 2025

NEBIUS: “Yes, we are behind schedule — around two months late. Instead of 4 months, we will do it in 6. Not because of design flaws or team issues, but simply due to late deliveries from a few suppliers“

linkedin.com > Charles-Antoine Beyney: 🚀 50MW now. 300MW next. And one innovation that will change everything.

It’s been a little over a month since my last update — and here we are, about to finalize Phase 1 and soon Phase 2, with the first 50MW being delivered to our customer Nebius .

Yes, we are behind schedule — around two months late. Instead of 4 months, we will do it in 6. Not because of design flaws or team issues, but simply due to late deliveries from a few suppliers (no names here… but they know 😡).

On the other hand, some partners have been rock solid:

• Carrier HVAC delivered on time and supported us every step of the way 👏

• Our internal GC team at North East Precast went above and beyond. You are LEGENDARY. Mega kudos — we love you guys!

• Our engineering teams (kept discreet for a reason 😉) — you are beyond legendary. Thank you a million.

🔜 What’s next?

Not much… just 300MW more to deliver in the next 6–9 months. That includes not only the Datacenter but also the Power Plant, the Cooling Plant (yes, at this scale chilled water is no longer generated inside the DC but in a dedicated mega plant)… and much more.

💡 And in about 45 days, we’ll unveil at least one innovation that will change this industry forever — and many others.

I genuinely believe you’ll love DataOne as much as we do after that (okay, I may be biased 😉). But trust me, this will blow your mind. And here’s a challenge:

👉 If anyone guesses what this innovation is, I’ll personally offer a business class ticket from wherever you live, bring you to Vineland for a full private tour, and host you for 2 days in NYC (all on me — yes, even SAF is included, we compensate carbon at DataOne).

Stay tuned. The future of datacenters is being built right here, right now. 🌍⚡

hashtag#AI hashtag#Datacenters hashtag#Innovation hashtag#EnergyTransition hashtag#DataOne

+ Related content:

nebius.com (3/5/25): [Excerpt] We’re introducing the 300 MW New Jersey region and expanding to Iceland.

We’re thrilled to announce a major upgrade to our US-based compute capacity. To bring it to life, we’ve joined forces with DataOne, an AI hosting infrastructure company, to ensure that the first phase of the New Jersey facility goes live this summer. We’re also launching a colocation facility in Iceland with Verne, a provider of sustainably powered data centers across the Nordics, and expect it to go live this month.

New Jersey custom-built data center

The collaboration between Nebius and DataOne takes a distinctive approach to DC construction and energy efficiency. The facility will be built using Nebius’ own design, while the partnership leverages DataOne’s expertise to achieve an ambitious goal: constructing a state-of-the-art data center in just 20 weeks.

At the core of the facility is an innovative approach to power generation. We’ll leverage behind-the-meter electricity and advanced energy technology to maximize sustainability while simultaneously strengthening operational reliability — exactly what AI innovators need for their workloads.

The New Jersey site — our first major data center in the US — will go live phase by phase and is expandable up to a total of 300 MW. We previously committed to 100 MW of installed capacity by the end of 2025, and we are prepared to accelerate beyond that if required to meet demand.

dataone.eu : Vineland NJ

• 350 MW IT load

• 65 000 sqm over 44ha of land

• Green Gas powered

• Tier-3 compatible, ISO27001, 14001, 5001.

nebius.com: [Excerpt] Infrastructure for the age of AI.

Based in Amsterdam. Listed on Nasdaq. Operating worldwide.

AI is no longer a distant promise — it is becoming a defining force impacting every aspect of our lives. To fulfill its potential as a general-purpose technology that benefits all of society, AI needs purpose-built infrastructure: vast amounts of compute, optimized software and hardware, and collaborative ecosystems.

This is what Nebius builds: vertically integrated AI infrastructure that accelerates AI innovation globally and at scale. With large-scale GPU clusters deployed across Europe and the US, Nebius’s full-stack cloud platform combines the scale, flexibility and reliability of a hyperscaler with the power and performance of a supercomputer.

We serve a fast-growing ecosystem of AI innovators of all sizes — from startups to research institutes to enterprises — across sectors including healthcare and life sciences, robotics, finance and entertainment, as well as national AI programs.

The Nebius team includes hundreds of engineers with deep expertise in building world-class tech infrastructure, as well as in-house AI R&D. Headquartered in Amsterdam and listed on Nasdaq (NBIS), Nebius has a global footprint, with hubs across Europe, North America and the Middle East.

🙂

☑️ #93 Aug 30, 2025

[xAI Code Repo] xAI is already suing Li for trade secret theft, seeking damages and an injunction to stop him from using or sharing the info

@muskonomy: BREAKING: Elon Musk confirms ex-xAI engineer joined OpenAI and “uploaded xAI’s entire codebase”

The engineer had earlier sold $7M worth of his xAI stock before leaving now facing a lawsuit for stealing Grok trade secrets

+ Related content:

@elonmusk: Not allegedly. He downloaded the entire xAI code repo, the logs prove he did it and he admitted he did it!

apps.cand.uscourts.gov > Case Number > 3:25-cv-07292 (8/28/25): X.AI Corp. et al v. Li. Defend Trade Secrets Act (of 2016)

dockets.justia.com: Docket Report

Misappropriating trade secrets is legally considered theft under U.S. laws like the Defend Trade Secrets Act.

🙂

☑️ #92 Aug 30, 2025 🔴 rumor

Alibaba new AI Chip: not designed for training Al models, but only for inference

wsj.com: [Excerpts] Alibaba Creates AI Chip to Help China Fill Nvidia Void. Chinese tech companies spark market exuberance by signaling they are catching up to U.S.

SINGAPORE-Chinese chip companies and artificial-intelligence developers are building up their arsenal of homegrown technology, backed by a government determined to win the Al race with the U.S.

The rapid adoption of AI across China’s economy is creating a big demand for inference—when AI programs tap their training to deliver output such as a smartphone voice assistant’s answers. Inference typically doesn’t require the most advanced chips.

Previous cloud-computing chips developed by Alibaba have mostly been designed for specific applications. The new chip, now in testing, is meant to serve a broader range of AI inference tasks, said people familiar with it.

The chip is manufactured by a Chinese company, they said, in contrast to an earlier Alibaba AI processor that was fabricated by Taiwan Semiconductor Manufacturing. Washington has blocked TSMC from manufacturing AI chips for China that use leading-edge technology.

+ Related content:

Other replacements for the H20:

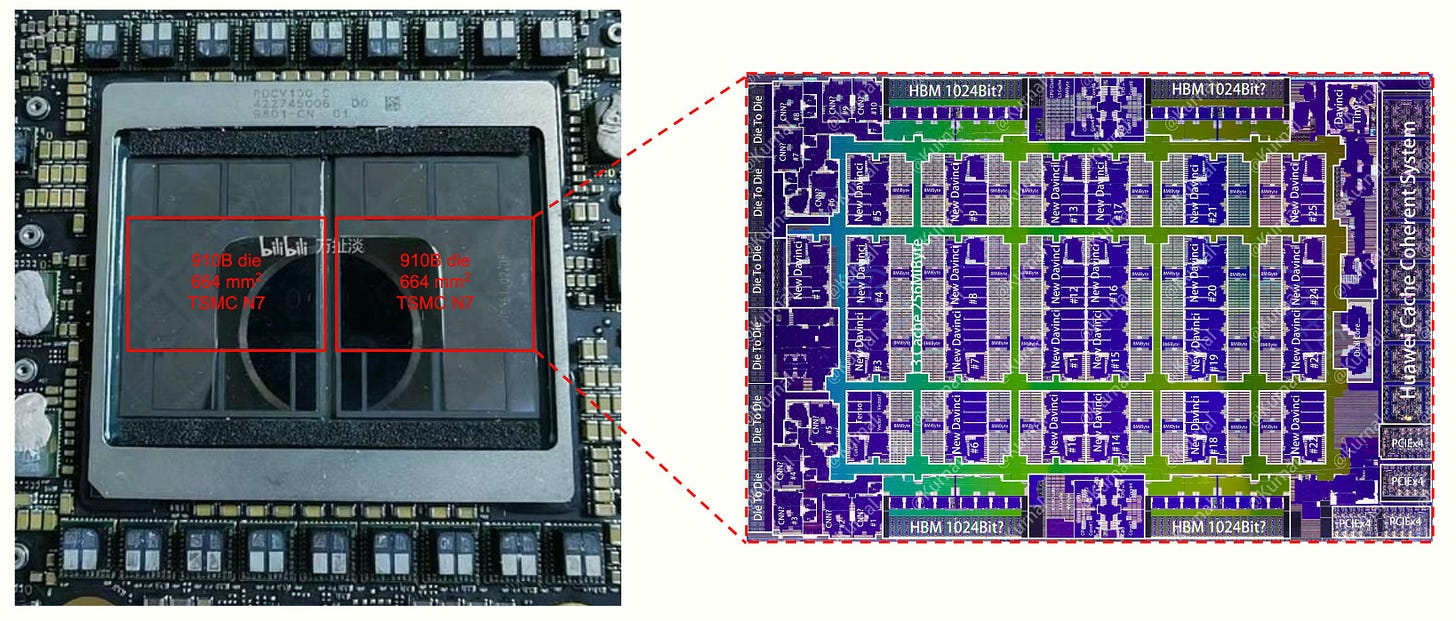

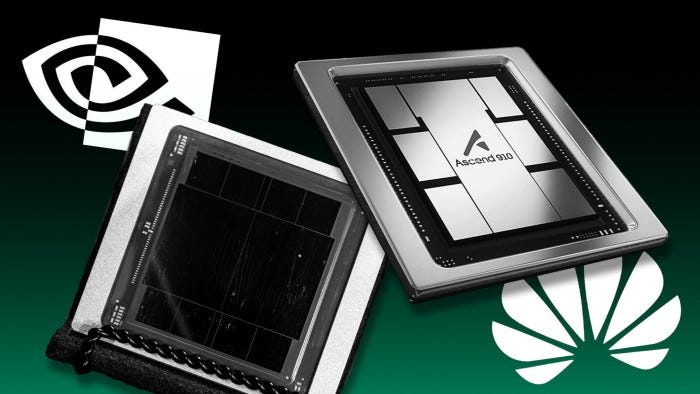

e.huawei.com: Huawei Ascend 910B and 910C

Based on the Ascend series (Huawei Ascend) AI processor, Huawei Ascend computing includes Atlas series modules, boards, small stations, servers, clusters and other product forms, creating a full-scene AI infrastructure scheme for "end, edge, cloud". ... Based on the HUAWEI Ascend AI processor and basic software, build Atlas artificial intelligence computing solutions, including Atlas series modules, boards, small stations, servers, clusters and other rich product forms, creating a full-scene A for "end, edge, cloud" I infrastructure scheme covers the whole process of reasoning and training in the field of deep learning.

e.huawei.com > Shengteng calculation (8/30/25: New Huawi 96GB GPU

cambricon.com > Siyuan 590 Series

metax-tech.com: Xisi N100 (MXN Series)

🙂

☑️ #91 Aug 28, 2025

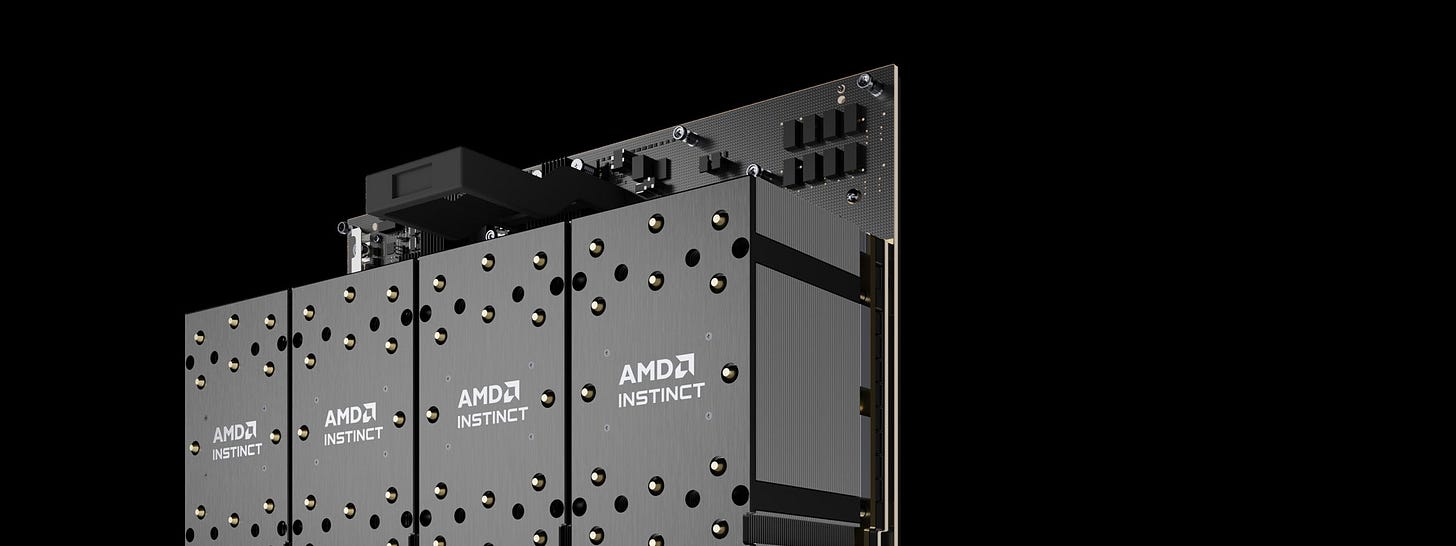

AMD’s ecosystem

@AMD: AMD is making a bold move to strengthen its global partner ecosystem.

We're increasing channel investment by double digits, unifying programs worldwide, and deepening collaboration with distributors, OEMs & solution providers across SMB and enterprise. Get the full story from @DylanOnChips in @CRN: https://crn.com/news/components-peripherals/2025/exclusive-amd-makes-big-channel-funding-boost-as-it-builds-true-partner-program

🙂

☑️ #90 Aug 25, 2025

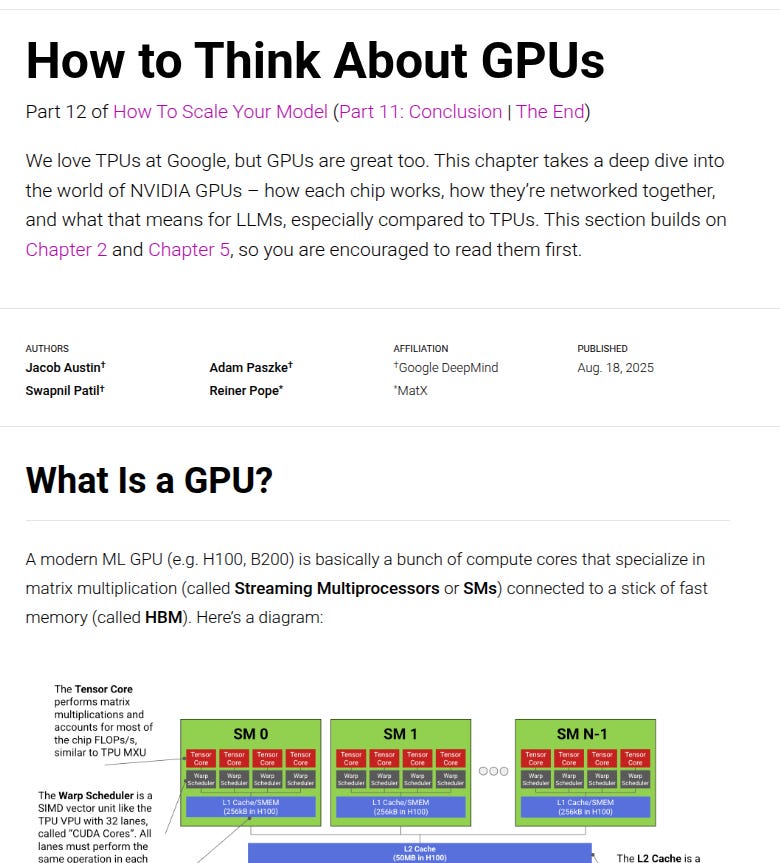

How to think about GPUs

@rohanpaul_ai: A team at DeepMind wrote this solid piece on how you must think about GPUs. Essential for AI engineers and researchers.

+ Related content:

jax-ml.github.io: [Excerpt] How to Think About GPUs.

Part 12 of How To Scale Your Model (Part 11: Conclusion | The End)

We love TPUs at Google, but GPUs are great too. This chapter takes a deep dive into the world of NVIDIA GPUs – how each chip works, how they’re networked together, and what that means for LLMs, especially compared to TPUs. This section builds on Chapter 2 and Chapter 5, so you are encouraged to read them first.

🙂

☑️ #89 Aug 25, 2025

TMSC cuts Chinese tools

@NikkeiAsia: BREAKING: TSMC will not use Chinese tools in its latest 2-nanometer chipmaking production lines -- the most advanced in the entire industry -- which will go into mass production this year.

+ Related content:

@StockMKTNewz (8/22/25): Nvidia $NVDA CEO Jensen Huang just said Taiwan Semiconductor $TSM is:

"One Of The Greatest Companies In The History Of Humanity”

🙂

☑️ #88 Aug 22, 2025

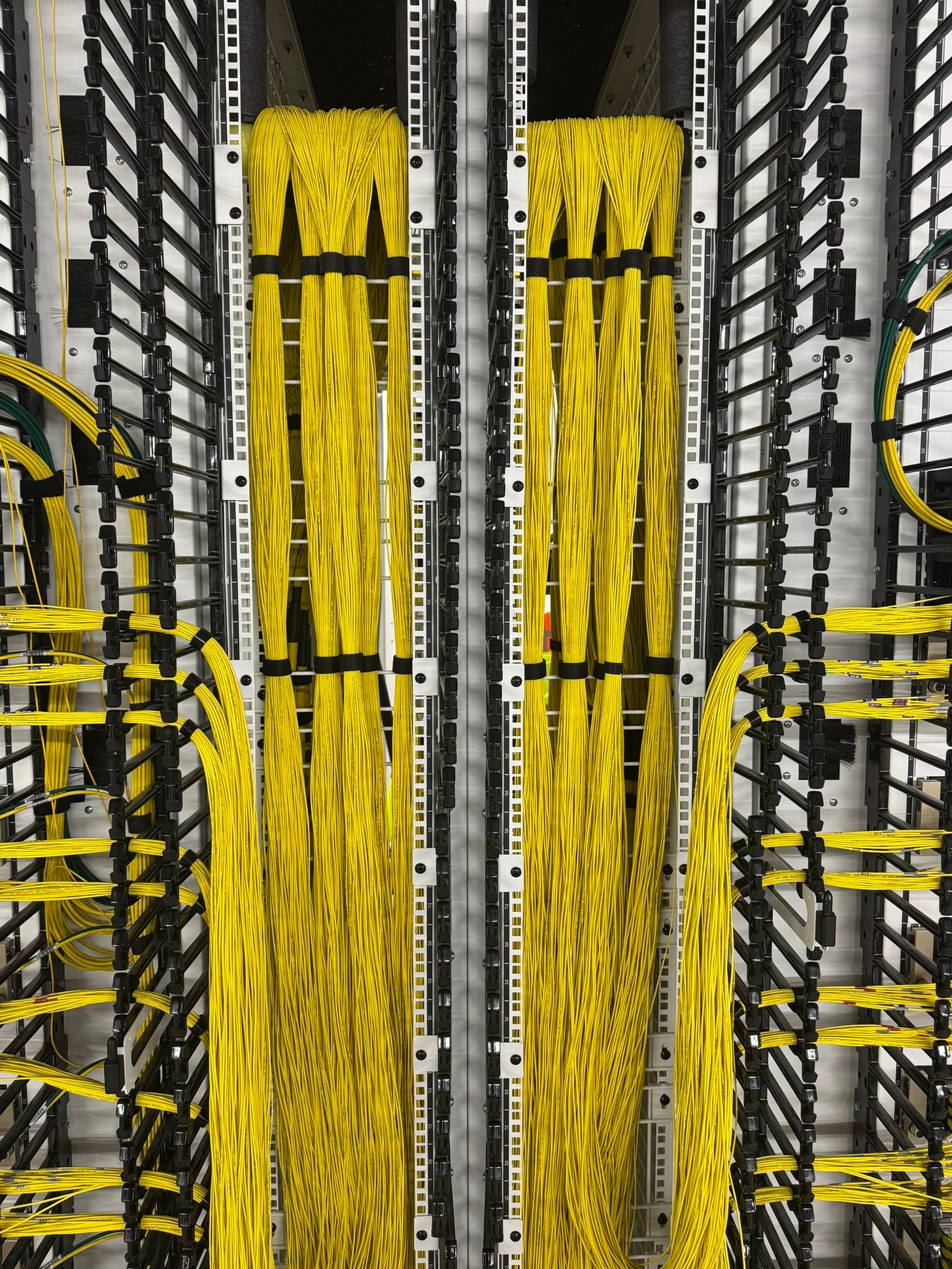

Lambda AI Cluster Tour with Supermicro NVIDIA HGX B200 ft.

@Supermicro: Join us for an inside look at the Lambda AI Cluster in Columbus, Ohio. Learn more: https://www.supermicro.com/en/success-story/lambda

Patrick from ServeTheHome takes us on exclusive tour of Supermicro’s cutting-edge NVIDIA B200 AI cluster, built for Lambda and housed at Cologix in Columbus, Ohio. Powered by thousands of Blackwell GPUs, 400GbE and NDR InfiniBand networking, and 10s of PB of VAST storage, our high-density CPU nodes and advanced liquid-cooling solutions deliver unparalleled performance for AI workloads. Discover how Supermicro’s innovative infrastructure is shaping the future of AI.

⏰ Timestamps

0:00 Introduction

1:36 Cooling an AI GPU Cluster

2:13 Powering an AI Cluster

2:51 AI Cluster Facility Design Cold/Hot Aisle

3:20 Storage

4:11 Networking

5:45 Supermicro NVIDIA HGX B200 8 GPU servers

9:59 NVIDIA DGX GB200 NVL72 14:27 Final Words and a Hint at the Next Tour

+ Related content:

🙂

☑️ #87 Aug 20, 2025

GPUs, ASICs, and memory trends

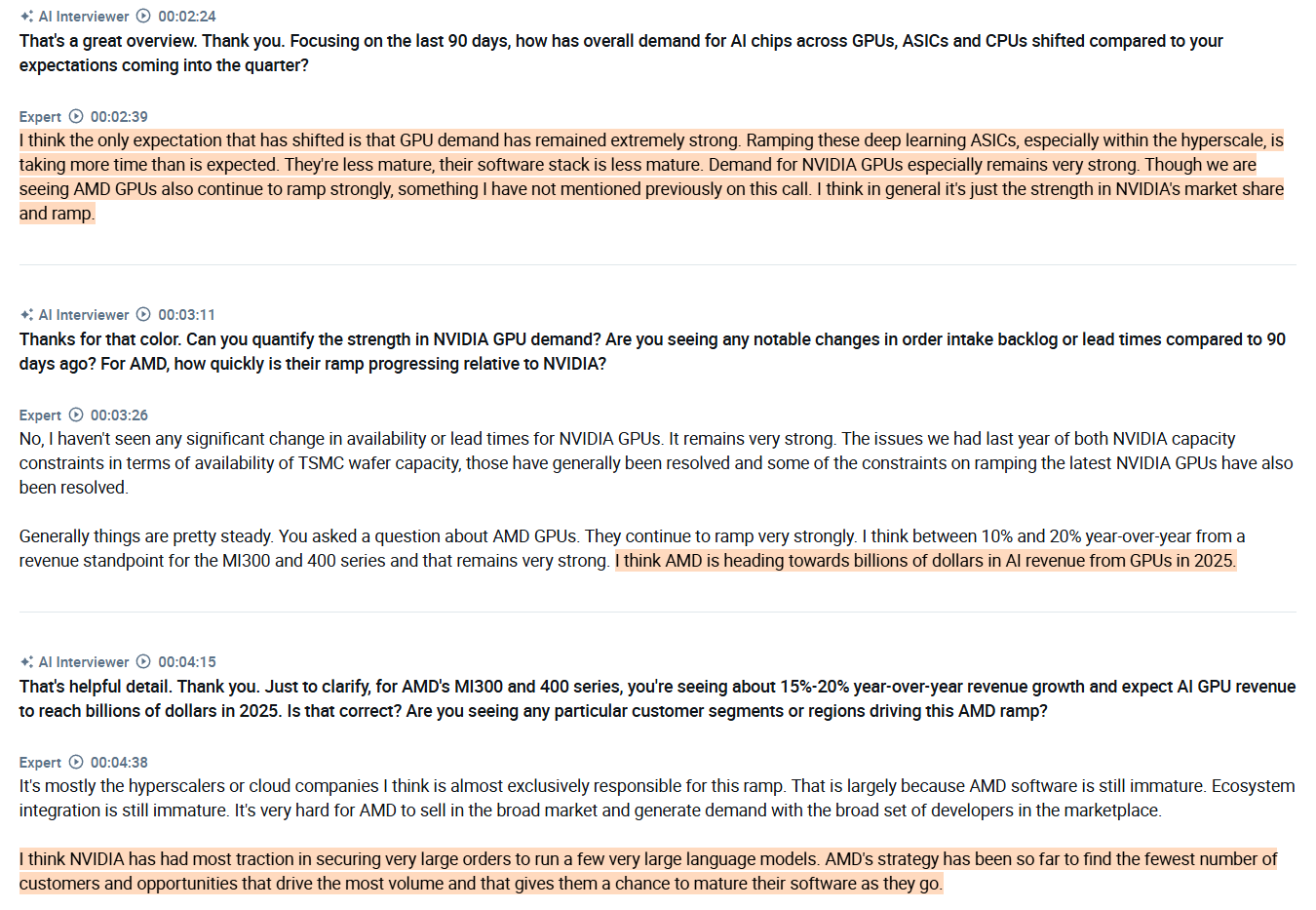

@AlphaSenseInc: Interview with an industry expert about insights into GPUs, ASICs, and memory trends ( $NVDA, $AMD, $MU, $GOOGL ):

The expert emphasizes that GPU demand, especially for $NVDA, remains extremely strong and unchanged in terms of availability or lead times. He stresses that $AMD is also ramping quickly, with the MI300 and 400 series GPUs growing at around 15–20% year-over-year; however, adoption is concentrated almost entirely among hyperscalers because $AMD's software and ecosystem are still immature.

According to the expert, $NVDA is maintaining pricing very firm, with little to no discounting, due to extremely strong demand, while deep learning ASIC start-ups are offering discounts in the 10–20% range, primarily targeting non-hyperscaler and international customers seeking to build domestic AI infrastructure. He stresses that this discounting is less about performance and more about positioning on price, as these smaller players try to carve out market share outside the core hyperscaler segment.

In relation to ASICs, expert explained that hyperscalers build their own ASICs, such as $GOOGL's TPU, almost exclusively for internal use, while start-ups target the merchant market. However, because hyperscalers rely on in-house chips or established GPU providers like $NVDA and $AMD, the addressable market for these start-ups is shrinking. He stresses that this makes opportunities increasingly narrow, especially as $NVDA and $AMD combine hardware strength with massive investments in software and ecosystem support.

The expert emphasizes that HBM remains one of the most significant bottlenecks in the AI semiconductor supply chain, with only three major vendors; SK hynix, Samsung, and Micron producing HBM3 and HBM3E. He notes that supply from all three sources is fully allocated through 2025, and lead times for new demand are now approaching 12 months. These suppliers prioritize $NVDA first, then the hyperscalers, which leaves start-up ASIC vendors struggling to secure capacity.

He explains that HBM3 pricing has already increased by 20–30% year-over-year, driven by both extreme demand and the fact that each new GPU or ASIC generation requires significantly more HBM content. Looking forward, he expects continued upward pressure on pricing as vendors invest heavily in advancing to HBM4, which will double I/O bandwidth and density.

The expert stresses that while the overall AI semiconductor market is expanding rapidly, $AMD has been the clear share gainer, now reaching an estimated 5%–10% share and around $7–8 billion in GPU revenue. He emphasizes that this hasn’t reduced $NVDA's revenue but has modestly slowed its growth as $AMD ramps new products.

🙂

☑️ #86 Aug 20, 2025

META: Our data centers with AI-optimized design, the first of which is slated to come online in 2026

about.fb.com: [Excerpt] Meta’s Kansas City Data Center and Upcoming AI-Optimized Data Centers

Developing the Next Generation of Our Data Centers

The grand opening of this data center represents a pivotal moment as we invest in scalable infrastructure optimized for our global work in AI. Our data centers with AI-optimized design, the first of which is slated to come online in 2026, will blend high-performance and flexibility with a mix of custom hardware solutions. These data centers also leverage AI solutions to prioritize resource efficiency as we develop new technologies and expand our work building the future of human connection.

+ Related content:

facebook.com: Kansas City, we are online!

We are excited to announce the Meta Kansas City Data Center is now serving traffic. That means this data center is now part of our global infrastructure that brings our technologies and programs to life, making it possible to connect billions of people worldwide.

Since breaking ground in 2022, we’ve been proud to call Kansas City, Missouri home. We chose Kansas City because it offered excellent infrastructure, a robust electrical grid, a strong pool of talent for construction and operations jobs, and incredible community partners. Construction of the Kansas City Data Center has resulted in an average of 1,500 skilled trade workers on site at peak, and the data center will support more than 100 operational jobs once completed. This facility represents an investment of more than $1 billion in the state of Missouri.

From the beginning, we have been committed to being an active partner in the Kansas City community to support its long-term vitality. Since we broke ground in 2022, we have enjoyed a strong partnership with this community, providing more than $1 million in direct funding to Clay County, Platte County and City of Kansas City Missouri schools and nonprofits, including through our annual Data Center Community Action Grants.

Meta approaches data center sustainability from the ground up — from design and construction to operations. We prioritize water stewardship with our Kansas City Data Center using a cooling technology that is significantly more water efficient than the industry standard. Plus, the data center captures and infiltrates rainwater on site and incorporates water-saving fixtures and technologies within data center facilities.

Like at all of our data centers, the Kansas City Data Center’s electricity use is matched with 100% clean and renewable energy. And, we’re proud to say that our data center buildings are LEED Gold certified — achieving very high standards for energy efficiency, water conservation, supply chain responsibility and recycling.

Thank you to the State of Missouri, Kansas City, Mayor Quinton Lucas, Missouri Partnership, Kansas City Area Development Council, Evergy, Missouri Department of Economic Development, Turner Construction - Kansas City, and all of our local partners for helping us get to today. We are extremely proud to be part of the Kansas City community and look forward to continuing to strengthen our partnership for years to come.

Here's what people are saying about today's opening event:

“Meta’s investment in Kansas City is a clear signal that our city is a place where innovation, talent, and community come together. We look forward to working with Meta to ensure this development integrates well with our community priorities and delivers meaningful benefits for Kansas City residents." - Mayor Quinton Lucas

“Meta’s grand opening in Kansas City is a powerful testament to Missouri’s ability to attract and support the most innovative companies in the world. This investment brings transformative technology, high-quality jobs, and lasting economic impact to our state, and we are proud that Meta chose Missouri as a key part of its future.” - Subash Alias, CEO, Missouri Partnership

🙂

☑️ #85 Aug 14, 2025

Intel loses key engineers to Samsung amid restructuring and project cancellations

biz.chosun.com: [Excerpt] Engineers flock to competitors as Intel's financial woes deepen and projects are shelved.

Industry observers predict that Intel will continue to see personnel losses as it cancels or reduces previously announced foundry-related investments and plans for new factories. CEO Pat Gelsinger previously noted, “Unfortunately, the investment in production capacity that Intel made over the past few years has been an excessive investment that vastly exceeds demand, and it cannot be considered prudent,” indicating that the direction of next-generation technology development will be adjusted.

However, advice has also emerged from within and outside Samsung to focus on 'filtering out the best' according to the division’s manpower demands. A senior official at Samsung commented, “Instead of blindly scouting based solely on the Intel brand, a careful process to select individuals suited to the needed processes is essential,” adding that “since Intel has a rich talent pool with extensive research in all semiconductor processes, we should seek ways for large-scale workforce reductions to benefit Samsung.”

+ Related content:

newsroom.intel.com (update; 8/22/25): Intel and Trump Administration Reach Historic Agreement to Accelerate American Technology and Manufacturing Leadership.

The government’s equity stake will be funded by the remaining $5.7 billion in grants previously awarded, but not yet paid, to Intel under the U.S. CHIPS and Science Act and $3.2 billion awarded to the company as part of the Secure Enclave program. Intel will continue to deliver on its Secure Enclave obligations and reaffirmed its commitment to delivering trusted and secure semiconductors to the U.S. Department of Defense. The $8.9 billion investment is in addition to the $2.2 billion in CHIPS grants Intel has received to date, making for a total investment of $11.1 billion

sec.gov (update; 8/25/25): [Excerpt] August 25, 2025 - 8-K: Current report

1.01 - Entry into a Material Definitive Agreement

3.02 - Unregistered Sales of Equity Securities

7.01 - Regulation FD Disclosure

8.01 - Other Events (The registrant can use this Item to report events that are not specifically called for by Form 8-K, that the registrant considers to be of importance to security holders.)

9.01 - Financial Statements and Exhibits

🙂

☑️ #84 Aug 14, 2025

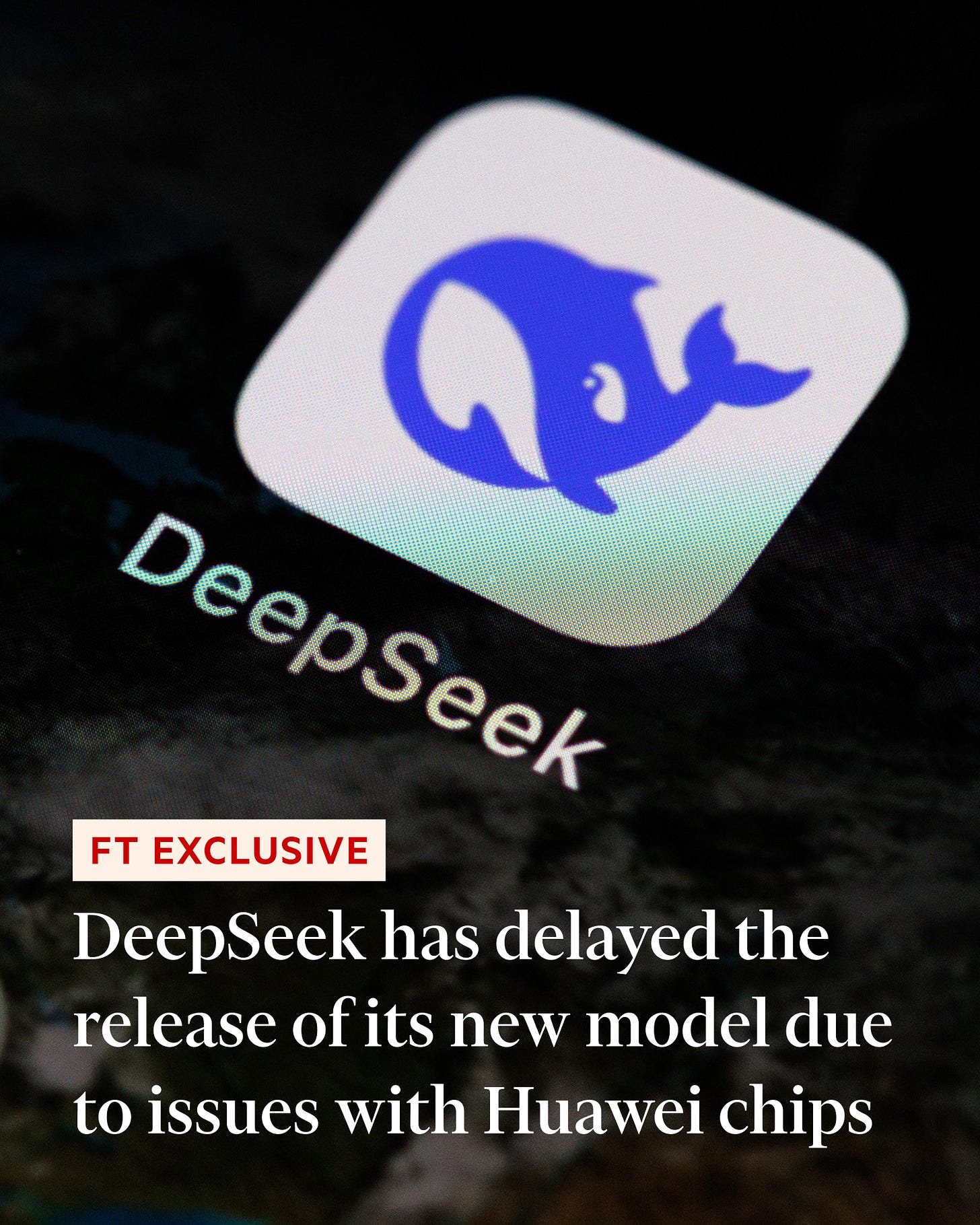

Difficulties of training the start-up’s latest system with Huawei’s semiconductors highlight dependence on Nvidia

@FT: The Chinese AI start-up encountered persistent technical issues during its R2 training process using Huawei’s Ascend chips, highlighting the limits of Beijing’s push to replace US technology https://www.ft.com/content/eb984646-6320-4bfe-a78d-a1da2274b092

🙂

☑️ #83 Aug 12, 2025

AI Computing

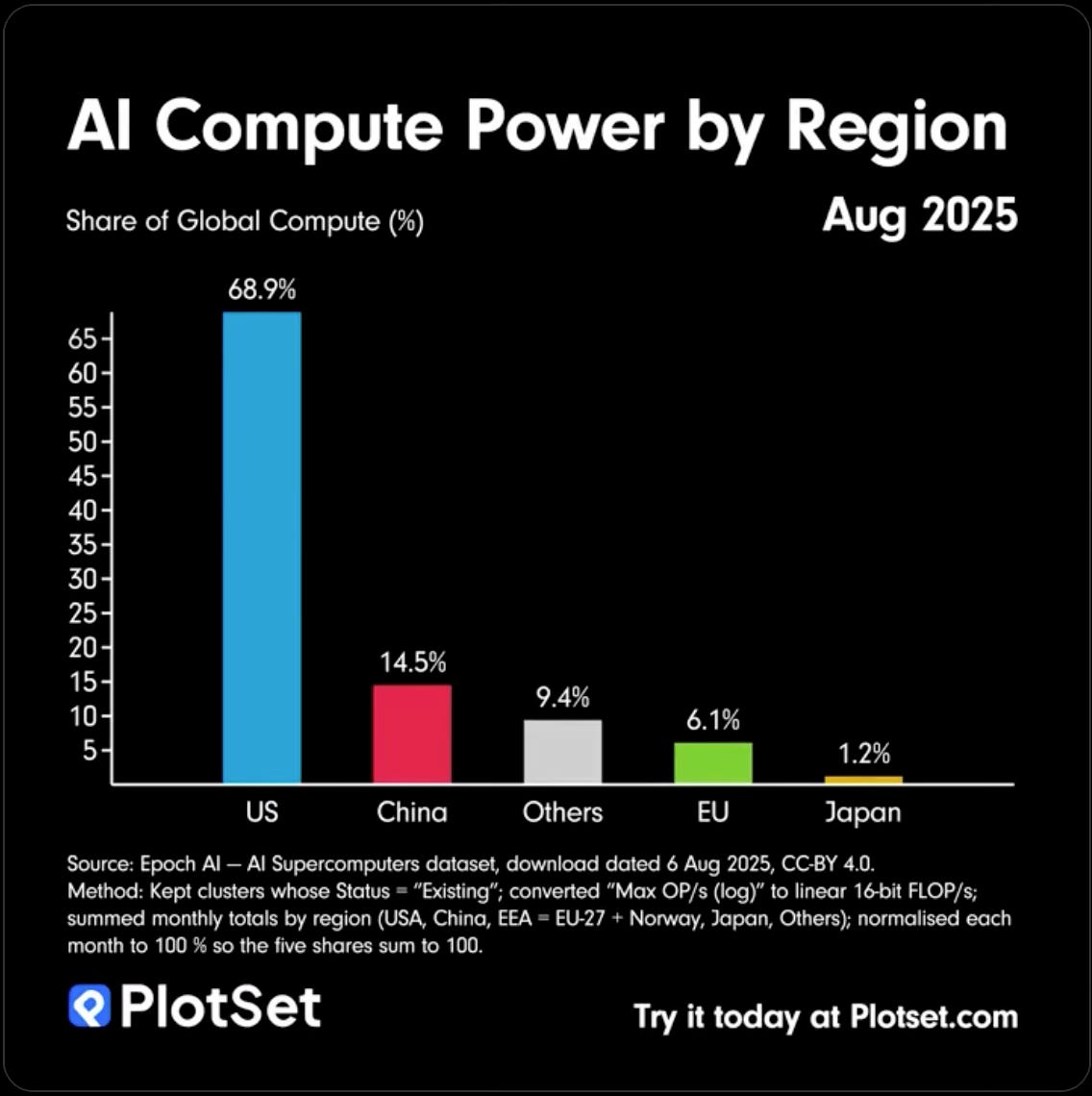

@JamesEagle17: I had to triple-check this. It's common knowledge that DeepSeek and Chinese AI is catching up with the US. But 5 years of supercomputer data show that the opposite is actually happening. In fact, the US is smashing it out the par.

+ Related content:

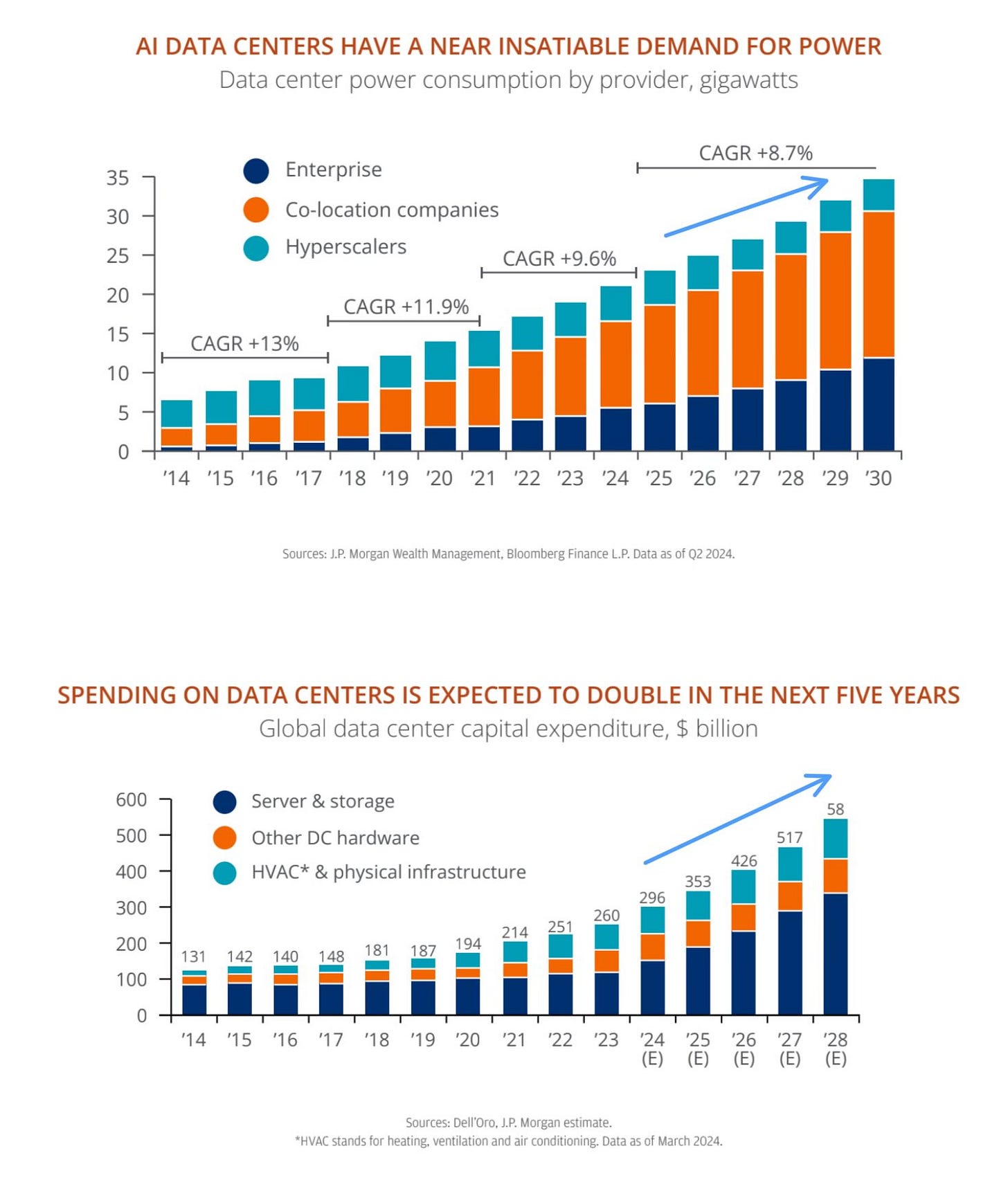

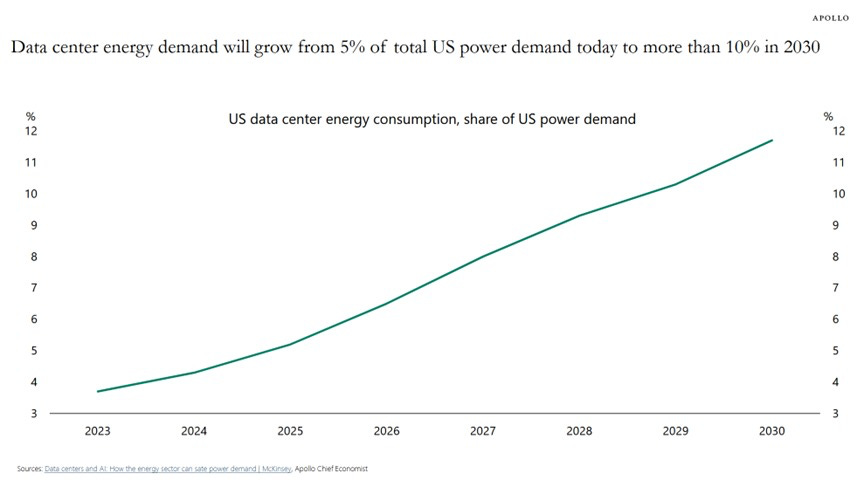

@KobeissiLetter: Shocking stat of the day:

Data center energy consumption has reached a record 5% of total US power demand.

This increase has been driven by the rapid adoption of digitalization and AI technologies.

This percentage is now estimated to more than DOUBLE over the next 5 years, according to McKinsey.

Additionally, data center load is set to account for up to 40% of net new demand added until 2030.

Overall, electricity demand for data centers is expected to grow at a compounded annual growth rate of +23% through 2030.

Energy will soon be the AI bottleneck

🙂

☑️ #82 Aug 11, 2025

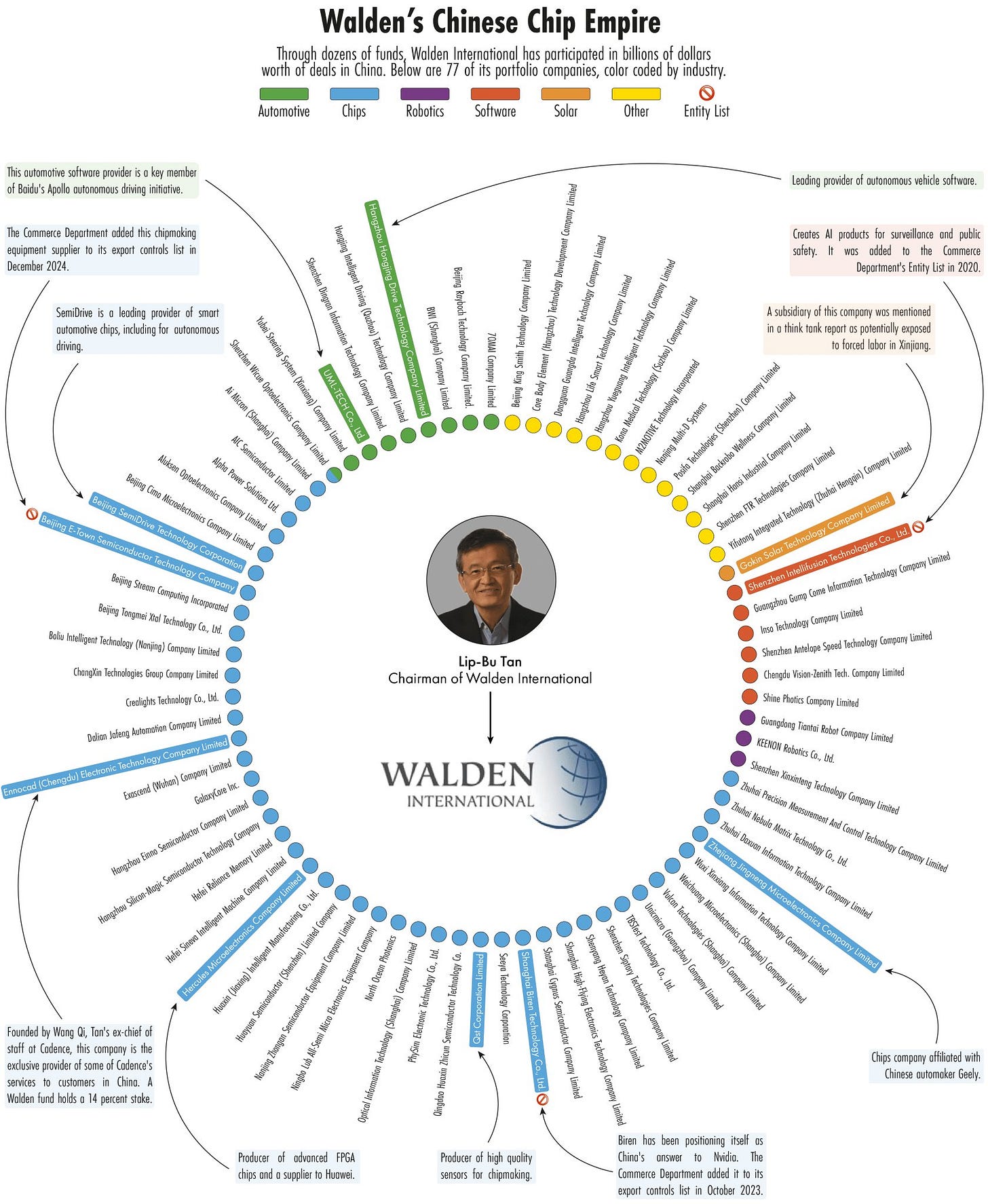

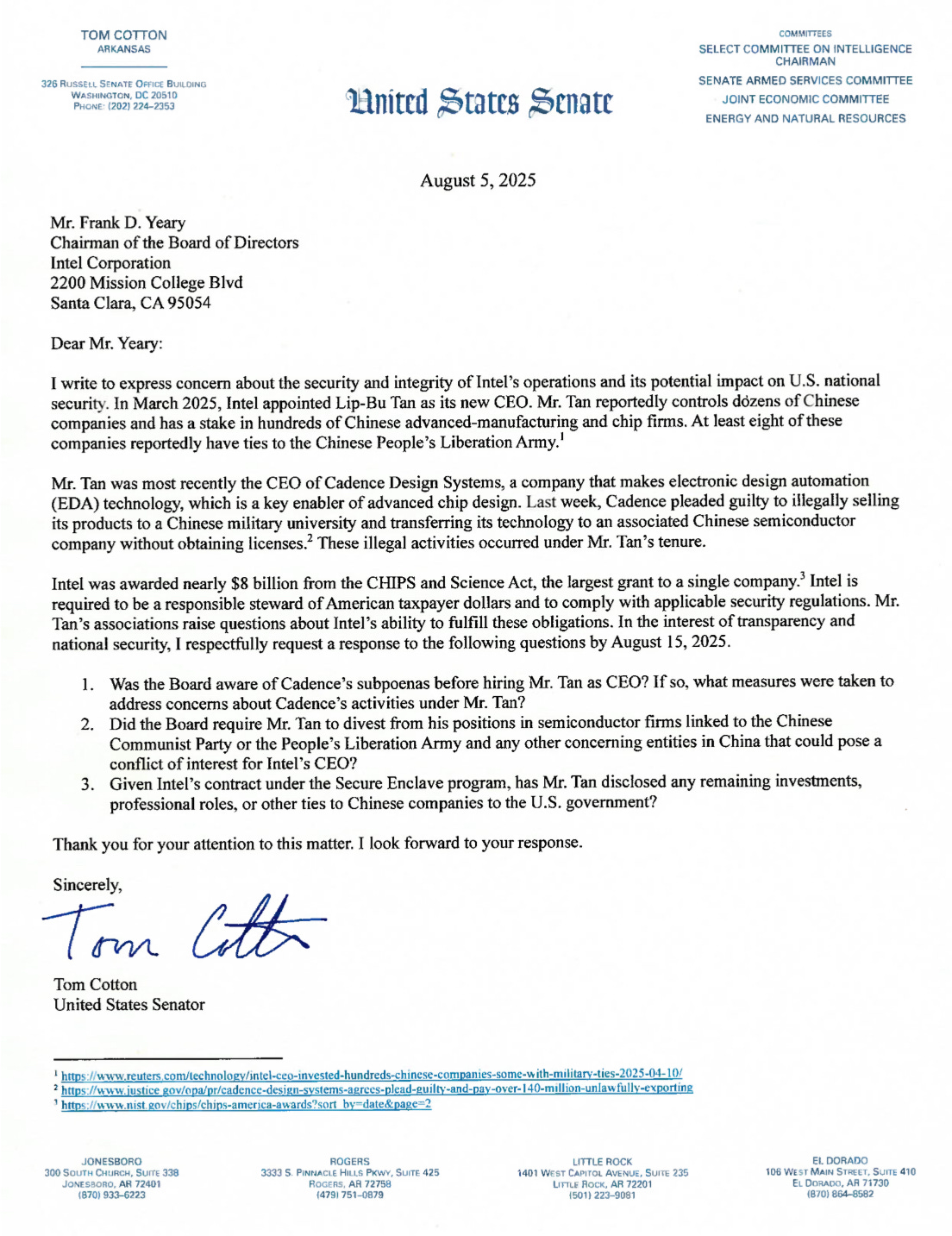

Lip-Bu Tan moment: Walden’s Chinese Chip Empire

Intel CEO said to visit visited White House this MondayReporting live from Washington, DC (just dropped my son off for law school) and got this money shot of the Intel CEO (and @cnbc) walking in to the Whitehouse! $INTC

+ Related content:

@economicsnoah (8/7/25): In March, @eliotcxchen and I were the first to extensively report the scale of Intel CEO Lip-Bu Tan's investments in China. We tracked at least 77 portfolio companies of Tan's VC Walden International. Today President Trump called for Tan's resignation, calling him "CONFLICTED.

@SenTomCotton (8/6/25): The new CEO of @intel reportedly has deep ties to the Chinese Communists. U.S. companies who receive government grants should be responsible stewards of taxpayer dollars and adhere to strict security regulations. The board of @Intel owes Congress an explanation.

🙂

☑️ #81 Aug 8, 2025

Lip-Bu Tan moment

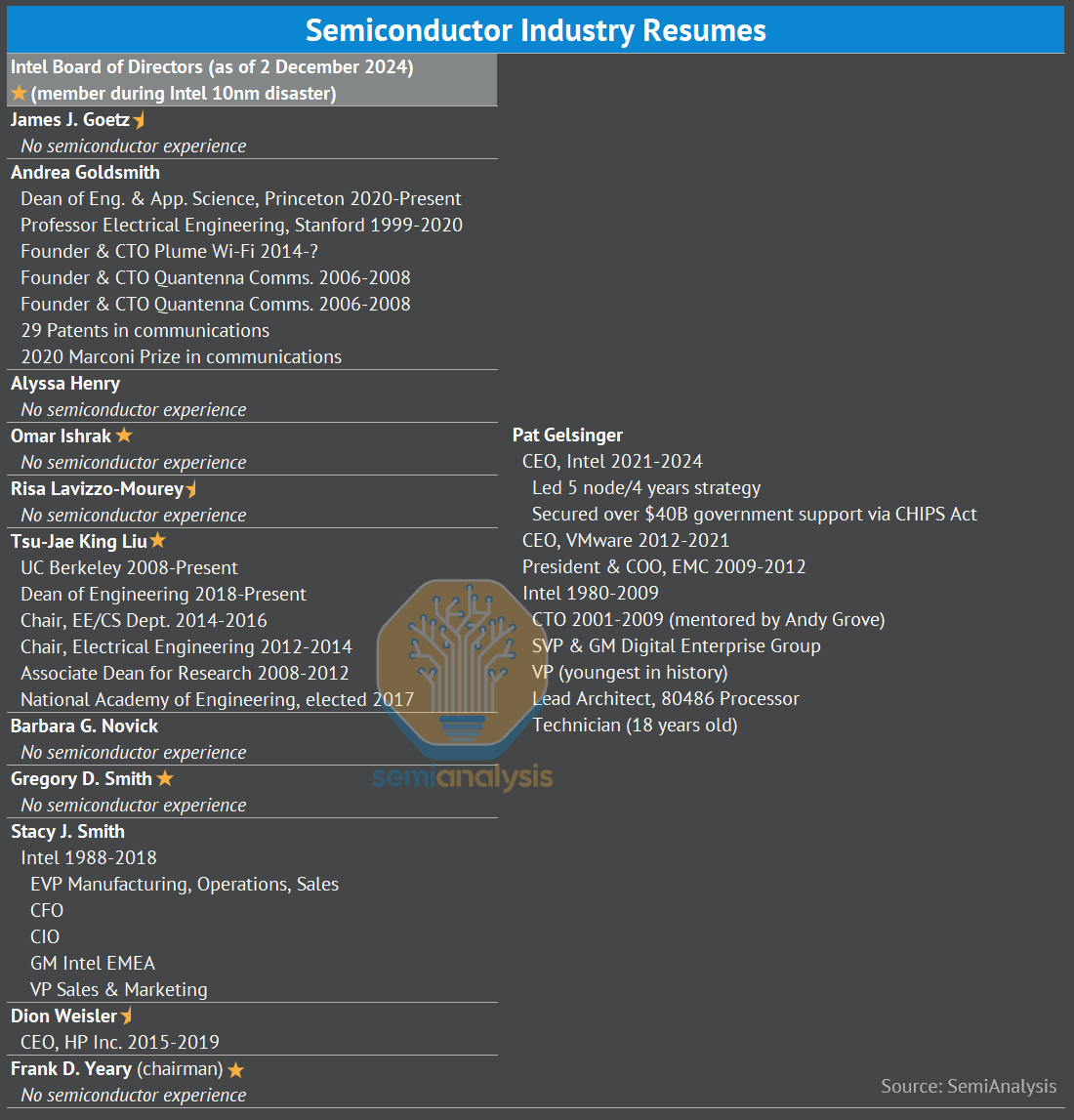

@SemiAnalysis_: Intel Board Issues In light of the recent journal post about the board we cannot emphasis this enough - Yeary is not fit for the job in the slightest. We wrote about how the Intel board systematically destroyed America's first great semiconductor company over a decade of mismanagement. Yeary was there the entire time.

It is Yeary not LBT who needs to leave Intel. Reminder for everyone, Yeary wants to spin the foundry, while he has no semiconductor experience and was on the board for the entire 10nm debacle. read more:

https://semianalysis.com/2024/12/09/intel-on-the-brink-of-death/

+ Related content:

newsroom.intel.com (8/7/25): [Excerpt] My commitment to you and our company. The following note from Lip-Bu Tan was sent to all Intel Corporation employees on August 7, 2025:

Dear Team,

I know there has been a lot in the news today, and I want to take a moment to address it directly with you.

Let me start by saying this: The United States has been my home for more than 40 years. I love this country and am profoundly grateful for the opportunities it has given me. I also love this company. Leading Intel at this critical moment is not just a job – it’s a privilege. This industry has given me so much, our company has played such a pivotal role, and it's the honor of my career to work with you all to restore Intel's strength and create the innovations of the future. Intel's success is essential to U.S. technology and manufacturing leadership, national security, and economic strength. This is what fuels our business around the world. It’s what motivated me to join this team, and it’s what drives me every day to advance the important work we’re doing together to build a stronger future.

There has been a lot of misinformation circulating about my past roles at Walden International and Cadence Design Systems. I want to be absolutely clear: Over 40+ years in the industry, I’ve built relationships around the world and across our diverse ecosystem – and I have always operated within the highest legal and ethical standards. My reputation has been built on trust – on doing what I say I’ll do, and doing it the right way. This is the same way I am leading Intel.

newsroom.intel.com (8/7/25): [Excerpt] Our commitment to advancing U.S. national and economic security.

Intel, the Board of Directors, and Lip-Bu Tan are deeply committed to advancing U.S. national and economic security interests and are making significant investments aligned with the President's America First agenda. Intel has been manufacturing in America for 56 years. We are continuing to invest billions of dollars in domestic semiconductor R&D and manufacturing, including our new fab in Arizona that will run the most advanced manufacturing process technology in the country, and are the only company investing in leading logic process node development in the U.S. We look forward to our continued engagement with the Administration.

newsroom.intel.com (7/24/25): Lip-Bu Tan: Steps in the Right Direction.

3. Refine Our AI Strategy.

We will focus our AI efforts on developing a cohesive silicon, system and software stack strategy. In the past, we have approached AI with a traditional, silicon- and training-centric mindset. This needs to change – and we have already started incubating new capabilities while attracting new talent.

As we make this shift, we will concentrate our efforts on areas we can disrupt and differentiate, like inference and agentic AI. Our starting point will be emerging AI workloads – then we will work backward to design software, systems and silicon that enable the best customer outcomes. We have a lot of work underway, and will be sharing more about our plans in the coming months.

🙂

☑️ #80 Aug 5, 2025

A possible leak of trade secrets

cnbc.com: [Excerpt] World’s largest chipmaker TSMC says it has discovered potential trade secret leaks.

Taiwan Semiconductor Manufacturing Co. said it had detected “unauthorized activities” that led to the discovery of potential trade secret leaks.

TSMC said it has taken action against the personnel involved and launched legal proceedings.

The world’s biggest semiconductor manufacturer dominates the market for the manufacturing of the most advanced chips and counts major firms like Apple and Nvidia as clients.

+ Related content:

asia.nikkei.com: TSMC fires workers for breaching data rules on cutting-edge chip tech.

TAIPEI -- Taiwan Semiconductor Manufacturing Co. has fired several employees for violating rules on obtaining sensitive information related to cutting-edge chip technology and initiated legal action over the matter, Nikkei Asia learned

esg.tsmc.com (7/14/22): TSMC Establishes the "Trade Secret Registration System Alumni Association" to Comprehensively Upgrade Industrial Competitiveness.

TSMC's "Supply Chain Trade Secret Management Sharing" Implementation Framework.

28-nanometer fabrication process (2018)

It is not the first time that TSMC has been the target for potential theft. In 2018, a Taiwanese court indicted a former employee for copying trade secretes related to the 28-nanometer fabrication process, with intent to transfer them to a semiconductor company in mainland China.

🙂

☑️ #79 Jul 30, 2025

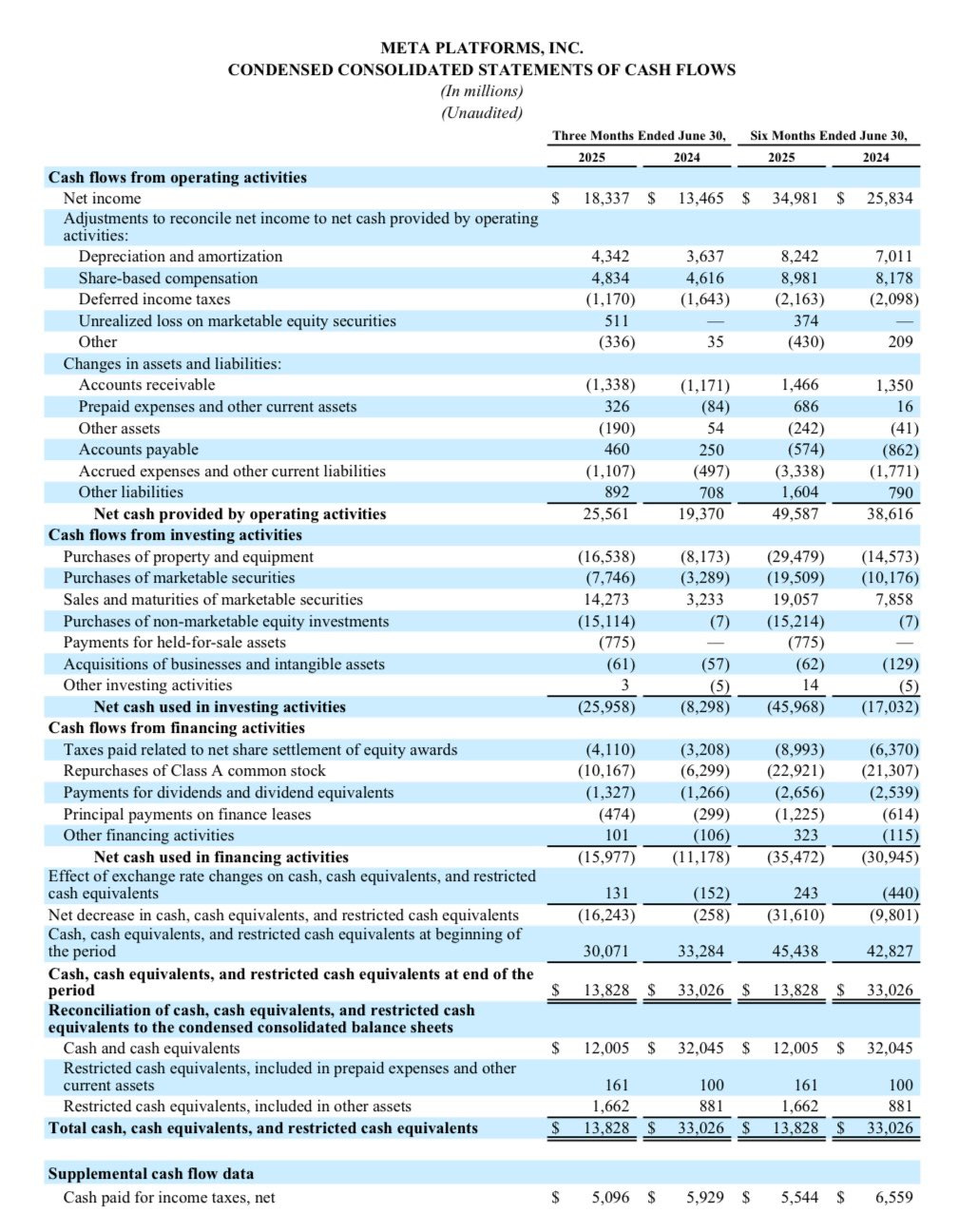

META: If the true economic life on its GPU’s is actually 2-3 years, most of its “profits” are materially overstated

@RealJimChanos: It looks like $META’s depreciable life on its capital base ($210B at 6/30/25) was 11-12 years, as of the 2Q. If the true economic life on its GPU’s is actually 2-3 years, most of its “profits” are materially overstated.

(2) Put another way, $META increased its capital base 45% Y/Y in the 2Q, from $145B to $210B, or $65B. Yet annualized D&A increased only $2.8B ($700M x 4) in the recent 2Q. That’s 22-year life, on the margin. Whatever you think of the stock price here-that’s patently absurd.

(3) I would also note that $META is now growing its capital base more than 2x its revenues, 45% vs 21%. And for those of you posting/asking, we have NO short position/recommendation in $META.

+ Related content:

investor.atmeta.com (7/30/25): Meta Reports Second Quarter 2025 Results.

@Benzinga (7/30/25): Meta Platforms Earnings Call Q2 2025 | $META | 🔴 WATCH LIVE.

@Convertbond (7/30/25): Zuck lost $4B on a Metaverse bet, now he's cheating on the AI capex - balance sheet - income statement game?

Listen to @RealJimChanos right now.

🙂

☑️ #78 Jul 23, 2025

Oklo and Liberty Energy partnering to combine nuclear, gas, and grid services into a single solution for data centers and industrial customers

@oklo: Oklo and Liberty Energy partnering to combine nuclear, gas, and grid services into a single solution for data centers and industrial customers.

A next-generation energy model backed by deep operational experience and built for scale.

+ Related content:

oklo.com: [Excerpt] Oklo and Liberty Energy Launch Next-Generation Integrated Power Solution.

Liberty’s Forte natural gas power generation and load management solution will provide initial reliable primary power and flexible energy services, along with future grid management services focused on optimization and resiliency. As Oklo’s Aurora powerhouses come online, they will be integrated to provide clean, continuous baseload energy, complementing Liberty’s natural gas power.

🙂

☑️ #77 Jul 14, 2025

Prometheus & Hyperion Data Centers

facebook.com > Mark Zuckerberg: For our superintelligence effort, I'm focused on building the most elite and talent-dense team in the industry. We're also going to invest hundreds of billions of dollars into compute to build superintelligence. We have the capital from our business to do this.

SemiAnalysis just reported that Meta is on track to be the first lab to bring a 1GW+ supercluster online.

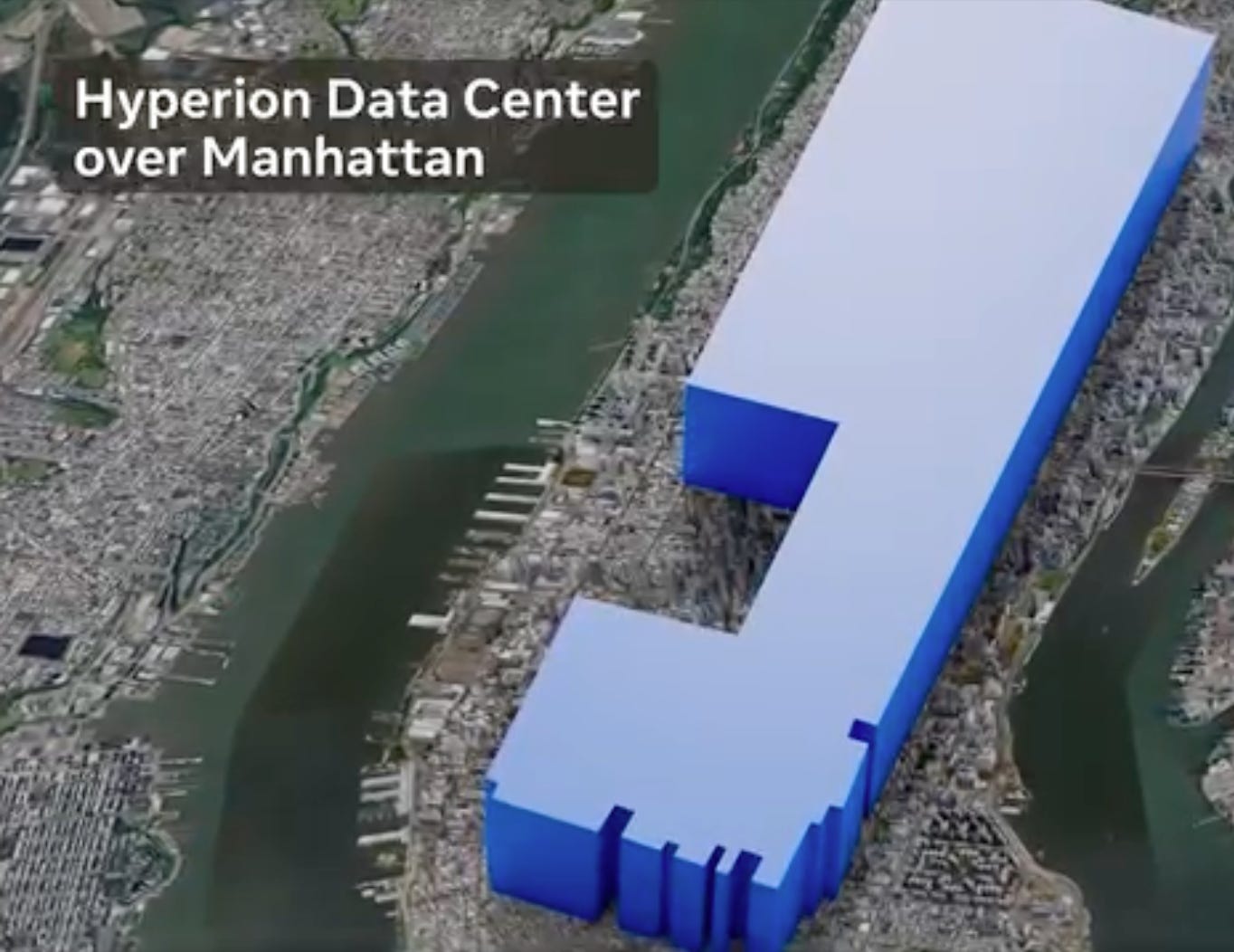

We're actually building several multi-GW clusters. We're calling the first one Prometheus and it's coming online in '26. We're also building Hyperion, which will be able to scale up to 5GW over several years. We're building multiple more titan clusters as well. Just one of these covers a significant part of the footprint of Manhattan.

Meta Superintelligence Labs will have industry-leading levels of compute and by far the greatest compute per researcher. I'm looking forward to working with the top researchers to advance the frontier!

+ Related content:

datacenters.atmeta.com: Data Centers > Locations

datacenters.atmeta.com: Blog

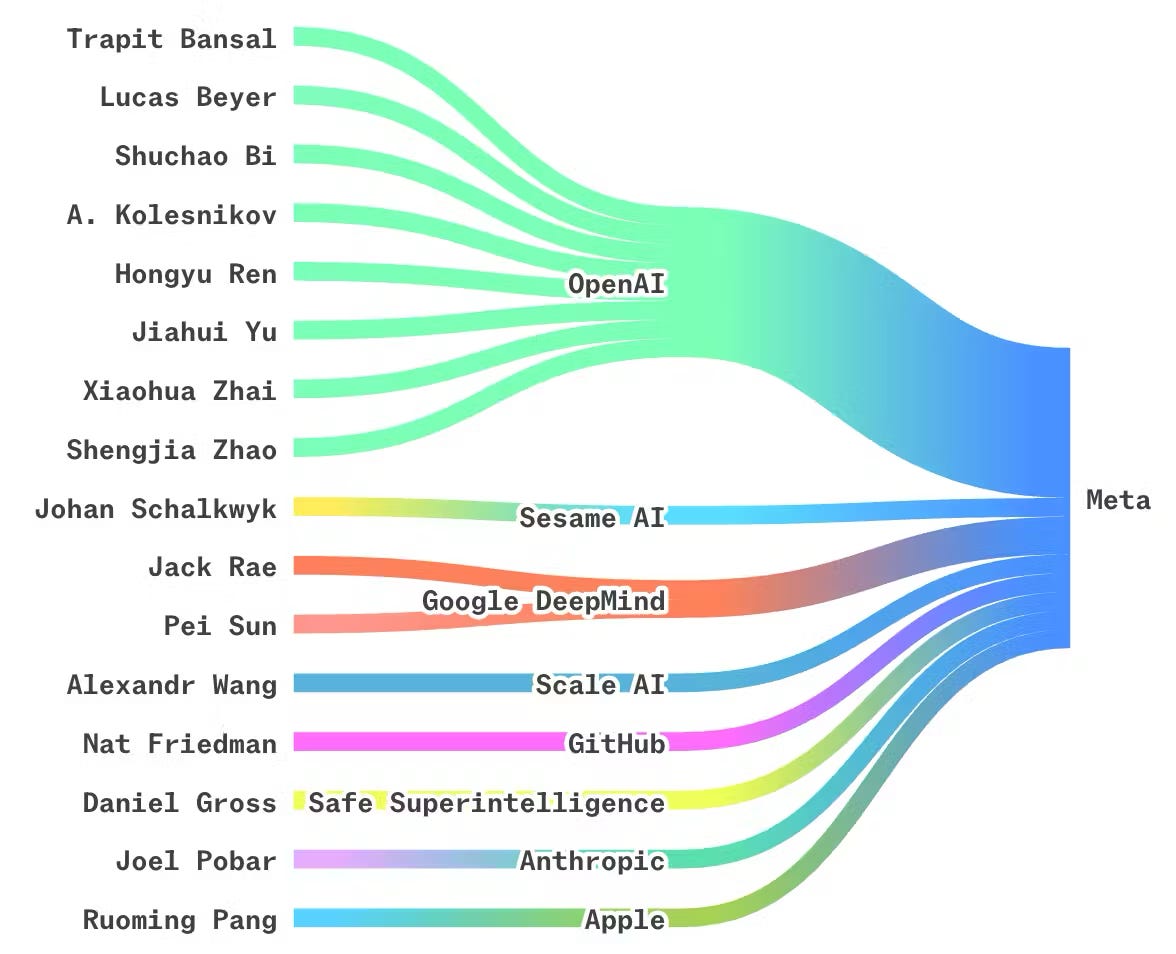

bloomberg.com (6/30/25) via sherwood.news (7/8/25): [Excerpt] Meta poaches top Apple AI executive for its superintelligence group.

cnbc.com(6/30/25): Here is Zuckerberg’s full internal memo released Monday:

As the pace of AI progress accelerates, developing superintelligence is coming into sight. I believe this will be the beginning of a new era for humanity, and I am fully committed to doing what it takes for Meta to lead the way. Today I want to share some details about how we’re organizing our AI efforts to build towards our vision: personal superintelligence for everyone.

We’re going to call our overall organization Meta Superintelligence Labs (MSL). This includes all of our foundations, product, and FAIR teams, as well as a new lab focused on developing the next generation of our models.

Alexandr Wang has joined Meta to serve as our Chief AI Officer and lead MSL. Alex and I have worked together for several years, and I consider him to be the most impressive founder of his generation. He has a clear sense of the historic importance of superintelligence, and as co-founder and CEO he built ScaleAI into a fast-growing company involved in the development of almost all leading models across the industry.

Nat Friedman has also joined Meta to partner with Alex to lead MSL, heading our work on AI products and applied research. Nat will work with Connor to define his role going forward. He ran GitHub at Microsoft, and most recently has run one of the leading AI investment firms. Nat has served on our Meta Advisory Group for the last year, so he already has a good sense of our roadmap and what we need to do.

We also have several strong new team members joining today or who have joined in the past few weeks that I’m excited to share as well:

Trapit Bansal -- pioneered RL on chain of thought and co-creator of o-series models at OpenAI.

Shuchao Bi -- co-creator of GPT-4o voice mode and o4-mini. Previously led multimodal post-training at OpenAI.

Huiwen Chang -- co-creator of GPT-4o’s image generation, and previously invented MaskGIT and Muse text-to-image architectures at Google Research

Ji Lin -- helped build o3/o4-mini, GPT-4o, GPT-4.1, GPT-4.5, 4o-imagegen, and Operator reasoning stack.

Joel Pobar -- inference at Anthropic. Previously at Meta for 11 years on HHVM, Hack, Flow, Redex, performance tooling, and machine learning.

Jack Rae -- pre-training tech lead for Gemini and reasoning for Gemini 2.5. Led Gopher and Chinchilla early LLM efforts at DeepMind.

Hongyu Ren -- co-creator of GPT-4o, 4o-mini, o1-mini, o3-mini, o3 and o4-mini. Previously leading a group for post-training at OpenAI.

Johan Schalkwyk -- former Google Fellow, early contributor to Sesame, and technical lead for Maya.

Pei Sun -- post-training, coding, and reasoning for Gemini at Google Deepmind. Previously created the last two generations of Waymo’s perception models.

Jiahui Yu -- co-creator of o3, o4-mini, GPT-4.1 and GPT-4o. Previously led the perception team at OpenAI, and co-led multimodal at Gemini.

Shengjia Zhao -- co-creator of ChatGPT, GPT-4, all mini models, 4.1 and o3. Previously led synthetic data at OpenAI.

I’m excited about the progress we have planned for Llama 4.1 and 4.2. These models power Meta AI, which is used by more than 1 billion monthly actives across our apps and an increasing number of agents across Meta that help improve our products and technology. We’re committed to continuing to build out these models.

In parallel, we’re going to start research on our next generation of models to get to the frontier in the next year or so. I’ve spent the past few months meeting top folks across Meta, other AI labs, and promising startups to put together the founding group for this small talent-dense effort. We’re still forming this group and we’ll ask several people across the AI org to join this lab as well.

Meta is uniquely positioned to deliver superintelligence to the world. We have a strong business that supports building out significantly more compute than smaller labs. We have deeper experience building and growing products that reach billions of people. We are pioneering and leading the AI glasses and wearables category that is growing very quickly. And our company structure allows us to move with vastly greater conviction and boldness. I’m optimistic that this new influx of talent and parallel approach to model development will set us up to deliver on the promise of personal superintelligence for everyone.

We have even more great people at all levels joining this effort in the coming weeks, so stay tuned. I’m excited to dive in and get to work.

🙂

☑️ #76 Jun 27, 2025

Mitsui Fudosan to set up chip-focused development hub in Tokyo

asia.nikkei.com: [Excerpt] Initiative aims to connect companies and talent spread across Japan.

TOKYO -- Mitsui Fudosan will establish a semiconductor-focused industrial development hub in Tokyo as soon as autumn, Nikkei has learned, bringing together companies and research organizations in the sector to help foster new businesses and develop talent.

The company plans to enlist 200 to 300 organizations as members in its new group within a few years. Mitsui Fudosan aims to create a new growth field by moving beyond its role as a real estate company to become an industrial developer that can lead in bringing together industry, government and academia.

+ Related content:

mitsuifudosan.co.jp: News

🙂

☑️ #75 Jun 18, 2025

Texas Instruments plans to invest more than $60 billion to manufacture billions of foundational semiconductors in the U.S.

ti.com: [Excerpt] Leading U.S. companies Apple, Ford, Medtronic, NVIDIA and SpaceX strengthen partnerships with TI to unleash the next era of American innovation.

Backed by the strength of TI’s U.S. manufacturing presence

TI is a driving force behind the return and expansion of semiconductor manufacturing in the U.S. The company’s more than $60 billion investment in U.S. manufacturing includes building and ramping seven, large-scale, connected fabs. Combined, these fabs across three manufacturing mega-sites in Texas and Utah will manufacture hundreds of millions of U.S.-made chips daily that will ignite a bold new chapter in American innovation.

Sherman, Texas: SM1, TI’s first new fab in Sherman will begin initial production this year, just three years after breaking ground. Construction is also complete on the exterior shell of SM2, TI’s second new fab in Sherman. Incremental investment plans include two additional fabs, SM3 and SM4, to support future demand.

Richardson, Texas: TI’s second fab in Richardson, RFAB2, continues to ramp to full production and builds on the company’s legacy of introducing the world’s first 300mm analog fab, RFAB1, in 2011.

Lehi, Utah: TI is ramping LFAB1, the company’s first 300mm wafer fab in Lehi. Construction is also well underway on LFAB2, TI’s second Lehi fab that will connect to LFAB1.

Learn more

Press kit (includes images, video b-roll and fact sheet)

Additional press kits (Sherman, Texas, Lehi, Utah, and Richardson, Texas)

+ Related content:

@TexasInstruments (6/17/25): Making progress at TI's 300mm wafer fab in Sherman, Texas.

🙂

☑️ #74 Jun 12, 2025

Micron and Trump Administration Announce Expanded U.S. Investments in Leading-Edge DRAM Manufacturing and R&D

investors.micron.com: [Excerpt] Micron Plans to Invest Approximately $200 Billion in Semiconductor Manufacturing and R&D in Idaho, New York and Virginia, Enhancing Domestic Memory Supply and Technology Leadership.

Micron to Build Second Leading-Edge Memory Manufacturing Fab in Idaho, Modernize and Expand Virginia Fab and Bring End-to-End High Bandwidth Memory (HBM) Manufacturing Capabilities to U.S. to Meet Anticipated AI-Driven Demand.

Micron’s approximately $200 billion broader U.S. expansion vision includes two leading-edge high-volume fabs in Idaho, up to four leading-edge high-volume fabs in New York, the expansion and modernization of its existing manufacturing fab in Virginia, advanced HBM packaging capabilities and R&D to drive American innovation and technology leadership. These investments are designed to allow Micron to meet expected market demand, maintain share and support Micron’s goal of producing 40% of its DRAM in the U.S. The co-location of these two Idaho fabs with Micron’s Idaho R&D operations will drive economies of scale and faster time to market for leading-edge products, including HBM.

+ Related content:

@MicronTechnology: Micron’s approximately $200B investment plans in U.S. manufacturing and R&D | Micron Technology.

🙂

☑️ #73 Jun 11, 2025

The core team behind Lamini x AMD

@HyperTechInvest: $AMD continues the talent grab The company just announced they acquired the core team behind Lamini

Lamini helps enterprises train LLMs based on their data

They have a lot of experience deploying models with $AMD accelerators

CEO Sharon Zhou worked as a machine learning product manager at Google and will become vice president of AI at $AMD

The startup raised $25 million last year from several investors, including Andrew Ng, Dropbox CEO Drew Houston, and Lip-Bu Tan, who later became Intel’s CEO

+ Related content:

pitchbook.com: Lamini ovreview. Who are Lamini’s investors?

Plug and Play Tech Center, Aglaé Ventures, AMD Ventures, Amplify Partners, are 5 of 17 investors who have invested in Lamini.

lamini.ai (9/26/23): [Excerpt] Lamini & AMD: Paving the Road to GPU-Rich Enterprise LLMs.

What’s more, with Lamini, you can stop worrying about the 52-week lead time for NVIDIA H100s. Using Lamini exclusively, you can build your own enterprise LLMs and ship them into production on AMD Instinct GPUs. And shhhh… Lamini has been secretly running on over one hundred AMD GPUs in production all year, even before ChatGPT launched. So, if you’ve tried Lamini, then you’ve tried AMD.

Now, we’re excited to open up LLM-ready GPUs to more folks. Our LLM Superstation is available both in the cloud and on-premise. It combines Lamini's easy-to-use enterprise LLM infrastructure with AMD Instinct™ MI210 and MI250 accelerators. It is optimized for private enterprise LLMs, built to be heavily differentiated with proprietary data.

🙂

☑️ #72 Jun 5, 2025

Oligopolies

@Invesquotes: The semiconductor industry is likely one where people tend to miss the forest for the trees

Sure, it's cyclical...but how many industries are there with so much long-term optionality where the supply chain is made up of oligopolies?

Not many is my guess!

🙂

☑️ #71 Jun 4, 2025

DRT vs Equinix

@dualedgeinvest: Coming Soon: DRT vs Equinix — A Tale of Two Data Center REITs.

One plays the long game with big acquisitions.

The other bets on steady, service-driven growth.

Different strategies. Different risks. Different rewards.

📡 Full deep-dive drops soon — subscribe now to catch it first - while it’s still free.

Which one are you betting on?

+ Related content:

DLR 0.00%↑ digitalrealty.com: [Excerpt] Where tomorrow comes together.

We’ve created the leading data center platform, a meeting place where companies, technology, and data come together to turn data into intelligence.

@digitalrealty (3/21/24): 20 years of Digital Realty.

EQIX 0.00%↑ equinix.com: [Excerpt] Equinix is the world’s digital infrastructure company.

We interconnect industry-leading organizations across a digital-first world, empowering them with trusted, secure and sustainable-minded infrastructure.

🙂

☑️ #70 Jun 2, 2025

IBM Unveils watsonx AI Labs: The Ultimate Accelerator for AI Builders, Startups and Enterprises in New York City

newsroom.ibm.com: [Excerpt] New AI initiative will co-create gen AI solutions with IBM clients, nurture NYC talent, advance enterprise AI implementations.

The lab benefits from – and helps fuel – New York City's status as a global AI hub. New York City has more than 2,000 AI startups, and its AI workforce grew by nearly 25% from 2022 to 2023, according to Tech:NYC. Since 2019, more than 1,000 AI-related companies in New York City have raised $27 billionin funding.

Also today, continuing its commitment to the local startup ecosystem, IBM announced it will acquire expertise and license technology from Seek AI, the New York City-based startup building AI agents to harness enterprise data. Seek AI helps businesses leverage agentic AI to mine value from enterprise data, and their expertise will serve as a foundational part of watsonx AI Labs.

+ Related content:

seek.ai (9/26/24): [Excerpt] How to Power Your Data Strategy with Agentic AI.

There’s been a lot of buzz and excitement recently about agentic AI, the next generation of artificial intelligence systems that are set apart from traditional AI by their ability to operate autonomously and take independent action to accomplish specific goals.

First things first: with all the noise out there about agents, how do we even define them? At its most basic interpretation, IBM defined an AI agent in simple terms as, “...a system or program that is capable of autonomously performing tasks on behalf of a user or another system by designing its workflow and utilizing available tools.”

🙂

☑️ #69 Jun 2, 2025

View on U.S. “reciprocal” tariffs and the FX impact on profitability?

pr.tsmc.com > TSMC Shareholders’ Meeting Resolutions > @x.com/rwang07: Memo note on TSMC Shareholders Meeting (Q&A) Hosted by CEO C.C. Wei on June 3, 2025.

The company has pledged to run on 100 % renewable energy by 2040. Has it invested in renewable‑energy projects?

Green power is in short supply in Taiwan. TSMC has considered building its own facilities but must follow legal requirements. For now, it invests in green power wherever possible. TSMC currently pays one of the country’s highest electricity rates and also helps its supply chain adopt green power. Taiwan will start collecting a carbon fee next year. We fully support renewable energy and will buy all the green power we can obtain.

Will Taiwan’s recent electricity‑price hikes delay the use of green power?

Costs will indeed rise, but TSMC is highly profitable and can absorb them. TSMC’s overseas fabs already usage rate of green power is reaching 100%, while usage in Taiwan lags badly. Whenever green power is available, we will definitely use it; if necessary, we will give the green‑energy sector long‑term commitments. We will disclose any sustainability information to investors and stakeholders. By 2030 we will disclose our annual renewable‑energy usage each year.

View on U.S. “reciprocal” tariffs and the FX impact on profitability?

Every 1 % appreciation of the NT dollar trims our gross margin by 0.4 %. An 8 % rise has reduced margin by a little over 3 %. We can only keep working hard and charge the value we deserve. Tariffs affect us somewhat but indirectly; lower demand hurts business, though AI demand is still greater than supply. We fear nothing except a global economic slump. We are maintaining 25 % growth, and earnings will hit a record high this year.

Will technology be stolen if we build fabs overseas?

No. Our know‑how comes from 10 000 R&D engineers and 10 000 production‑improvement staff. New technology is first installed in a “mother fab,” refined for six to twelve months, then moved into volume production. If we are confident about building fabs worldwide, we are confident the technology will not leak.

The return on TWSE:2330 trails NYSE:TSM by a wide margin?

The two prices always differ, but the trends are the same. Share prices are driven by supply and demand. In the near term we are not considering conversion between ADRs and common shares.

How are humanoid‑robot chip orders?

They have already started and are strong. We are also using AI to enhance our own capabilities—business is doing very well.

Could capacity become excessive?

Our customers are very aggressive. We have an excellent planning system to gauge real demand and have recently begun talking with our customers’ customers. US $40 billion in capex is huge—we act very carefully to avoid mistakes.

How do U.S. production lines replicate Taiwan fabs?

The Yields are identical; costs differ.

Capital expenditure and cash flow

Our dividend policy is stable and growing. We pay out 70 % of free cash flow as cash dividends. Once investments start paying back, dividends will rise faster.

Building a supercomputer with NVIDIA and Foxconn?

We are working closely with both companies.

Rapid AGI development and TSMC?

AI consists of software and hardware; software progress needs hardware support. All AI customers worldwide must cooperate with TSMC. We take responsibility for providing enough chips and are striving to meet their demand.

On Huawei's Chips

Frankly, we cannot claim complete control. We do our best to comply with laws and regulations and check whether the products we manufacture (for customers) violate any rules.

U.S. requests for technology transfer?

Our technology will not be stolen.

On Middle East and Rumor of Setting up Fab

We will not build fabs there. Competitors in that region are also our customers, and we will treat them well.

Depreciation (equipment) policy

TSMC's equipment is fully depreciated within five years. Depreciation is a non‑cash item and does not affect ROE. Dividends are based on free cash flow.

Impact of supplier Asahi Kasei in Japan and full support for TSMC?

We will certainly help wherever we can in Taiwan.

Earthquake‑risk assessment

TSMC production lines are the world’s most robust. We have internal risk management and close cooperation with local governments. No need to worry—TSMC always recovers the fastest after natural disasters.

Will TSMC raise prices significantly to cover tariffs and overseas‑fab costs?

What we think internally we cannot say aloud.

Traffic issues at the Kumamoto fab?

We keep working with the government to improve transportation; each site location has been fully discussed with local residents and authorities.

TSMC’s outlook for the next 5–10 years?

Extremely positive.

🙂

☑️ #68 May 31, 2025

SAIMEMORY: SoftBank, Intel work on AI memory chips that use half as much power

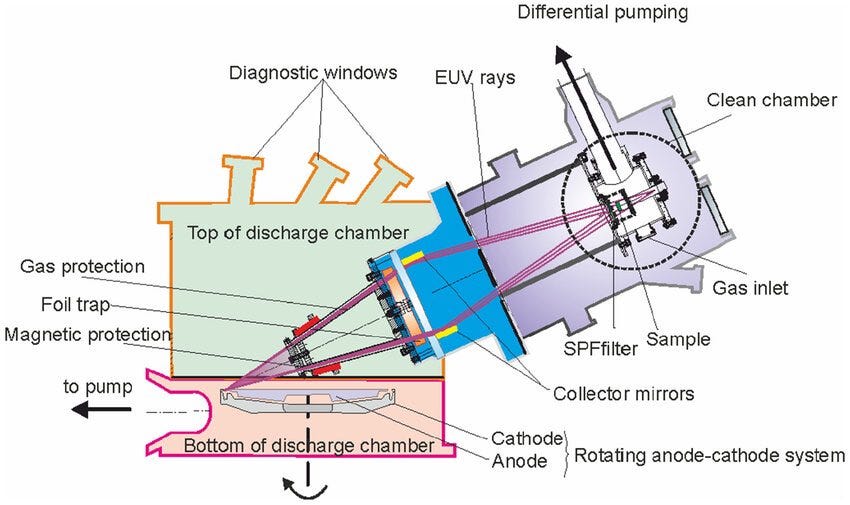

asia.nikkei.com: [Excerpt] TOKYO -- SoftBank and Intel are developing a type of memory for artificial intelligence expected to consume much less electricity than current chips, helping to build efficient AI infrastructure in Japan.

The companies plan to develop a structure for stacked DRAM chips that uses a different wiring structure than current advanced high-bandwidth memory, slashing power consumption by roughly half.

+ Related content:

newsonjapan.com: [Excerpt] SoftBank Startup Aims to Revolutionize AI Chips.

The Japanese government, which has enacted legislation allocating more than 10 trillion yen to support next-generation semiconductor and AI development, is expected to back SAIMEMORY’s production efforts as part of this broader policy push. This suggests that SAIMEMORY, like Rapidus before it, could become a future recipient of state support.

In an interview with TV Tokyo, SoftBank’s head of semiconductor development expressed confidence in the project, citing strong demand for low-power, low-cost memory solutions. When asked about potential collaboration with Rapidus, the spokesperson said it would depend on future technical developments but expressed optimism that the partnership could work.

University of Tokyo are also participating in the project.

🙂

☑️ #67 May 27, 2025

New consortium wants to build AI Gigafactory

@handelsblatt.com: [Translated] [Excerpt] They are the factories of the future: the EU is supporting the construction of data centers for artificial intelligence. German corporations are negotiating a consortium for such an AI Gigafactory.

According to Handelsblatt information, SAP, Deutsche Telekom, Ionos, the Schwarz Group and Siemens are negotiating a joint application to the European Union (EU) for such a gigantic data center for AI applications. There are intensive discussions, five managers from the industry reported.

At the conference "Technology Experience Convention Heilbronn" (TECH), an initiative founded by the Handelsblatt Media Group in cooperation with Schwarz Digits, SAP board member Thomas Saueressig said about the AI project:

"It is only possible in partnership, that is quite clear. Which model is the right one is currently being discussed."

Ionos CEO Achim Weiß said the initiative was an "important step for more digital sovereignty". Therefore, they will participate in a corresponding tender. And Rolf Schumann, Chief Digital Officer of the Schwarz Group, emphasized in a panel discussion: "We hope that we will bring everyone together, manage the matter and get it through together."

+ Related content:

tech-europe.org: TECH 2025 by Handelsblatt

🙂

☑️ #66 May 15, 2025

UAE - US AI Cluster

g42.ai : [Excerpt] G42 to lead a consortium with US partners to build 5GW UAE-US AI Campus.

Built by G42 in partnership with U.S. technology leaders, the UAE-US AI Campus will deliver sovereign, AI-grade compute at scale, supporting regional innovation and serving nearly half the world’s population.

+ Related content:

wam.ae: [Excerpt] UAE, US Presidents attend unveiling of Phase 1 of new 5GW AI campus in Abu Dhabi.

The UAE-US AI campus will include 5GW of capacity for AI data centres in Abu Dhabi, providing a regional platform from which US hyperscalers will be able to offer latency-friendly services to nearly half of the global population.

Once completed, the facility will leverage nuclear, solar, and gas power to minimise carbon emissions and will also house a science park driving advancements in AI innovation.

The campus will be built by G42 and operated in partnership with several US companies. The endeavour builds on a new framework by the two countries’ governments – the US-UAE AI Acceleration Partnership – to deepen cooperation and collaboration on AI and advanced technologies.

cnbc.com (update; 5/16/25): Nvidia, Cisco, Oracle and OpenAI are backing the UAE Stargate data center project.

U.S. tech giants Nvidia, Cisco, Oracle and OpenAI are supporting the “UAE Stargate” artificial intelligence data center announced this week, multiple people familiar with the deal confirmed Friday

The new AI campus, the largest outside the US, will be home to US hyperscalers and large enterprises that can leverage the capacity for regional ‘compute resources’ with the ability to serve the Global South.

🙂

☑️ #65 May 13, 2025

Japan: The 2-Nanometer Challenge

japan-forward.com: [Excerpt]: Rapidus Begins 2-nm Chip Pilot Production, Vital for Japan's Tech Future.

Mass Production Challenges

The first prototypes are expected between mid- and late-July. Rapidus will begin by producing standard chips using IBM's technology. Customer-specific prototypes will follow, with the goal of delivering them within fiscal 2025.

To improve yield — the ratio of usable chips — Rapidus is using a method called "batch-type" or "single-wafer processing," in which wafers are handled one at a time. While not ideal for mass production, this approach allows real-time feedback to the design team. Defects can be quickly addressed, enabling faster improvement of the yield rate.

+ Related content:

japan-forward.com (12/23/24): Rapidus: From Hokkaido to the World.

In a push to promote the domestic production of microchips, the Japanese government has funded the Rapidus project with over ¥900 billion JPY ($5.75 billion USD) so far. Private companies like Toyota and Sony have also invested in the startup. The aim is for Japan to compete with global leaders like Taiwan and South Korea.

Koike is confident that Rapidus can pull this off. "The 2-nm technology will change our lives," he adds. He points to Hokkaido's agricultural sectors where farms are struggling to find workers and farmers are getting old with little hope of finding young people who want to take over their business. Koike hopes that the AI chip will help overcome problems faced by aging societies. With AI, fully automated farms could raise livestock or grow food in the future.

Fundamental to this is the tiny AI chips that Rapidus wants to mass produce. Only the 2-nm chip can provide the speed needed to process the huge amount of data needed to run complex operations like a robotic farm or self-driving cars and trucks.

Update (5/19/25): More than half of Japan's seven new semiconductor plants (facilities completed since fiscal year 2023) remain inactive, Nikkei reported citing industry data.

🙂

☑️ #64 May 12, 2025

147 zettabytes annually

@probnstat: As of 2025, the world generates approximately 402.74 million terabytes of data every single day. This staggering amount equates to about 147 zettabytes annually, underscoring the immense scale at which information is being created, captured, and consumed globally.

To put this into perspective, this daily data generation is equivalent to streaming over 100 million high-definition movies simultaneously. The surge is largely driven by the proliferation of digital platforms, the Internet of Things (IoT), and the increasing digitization of services across sectors.

This exponential growth in data not only reflects our increasing reliance on digital technologies but also presents challenges and opportunities in data management, storage, and analysis.

https://explodingtopics.com/blog/data-generated-per-day

+ Related content:

explodingtopics.com (4/24/25): [Excerpt] Amount of Data Created Daily (2025)

Video is responsible for over half (53.72%) of all global data traffic.

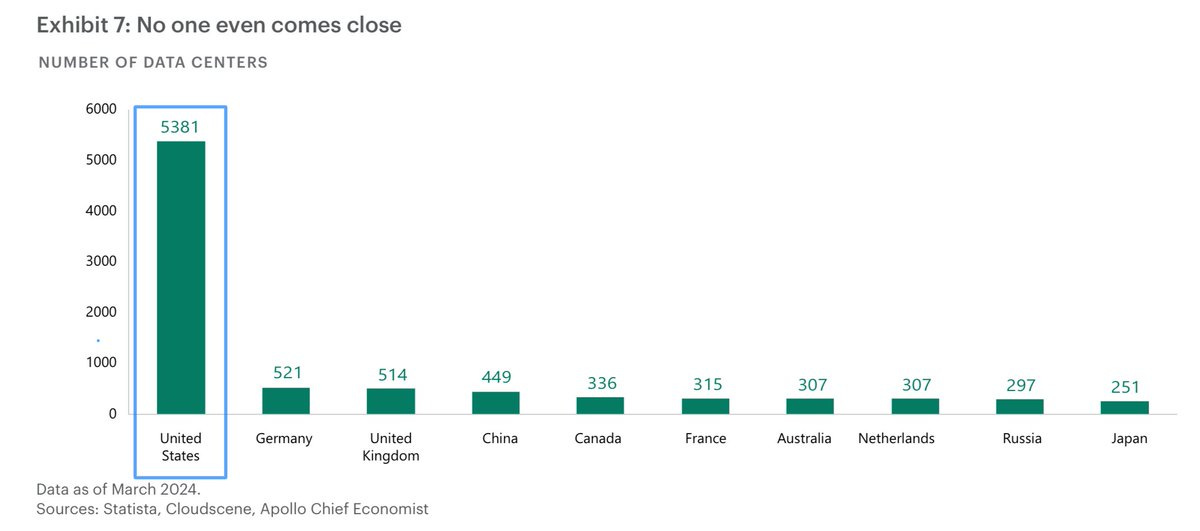

The US has over 10x more data centers (5,426) than any other country.

and Canada 337 data centers make up the rest of the top five.

bit (binary digit) > kilobyte (1,024 bytes) > megabyte (1,024 KB) > gigabyte (1,024 MB) > terabyte (1,024 GB) > petabyte (1,024 TB) > exabyte (1,024 PB) > zettabyte (1,024 EB) ≈ 1 sextillion bytes

🙂

☑️ #63 Apr 30, 2025

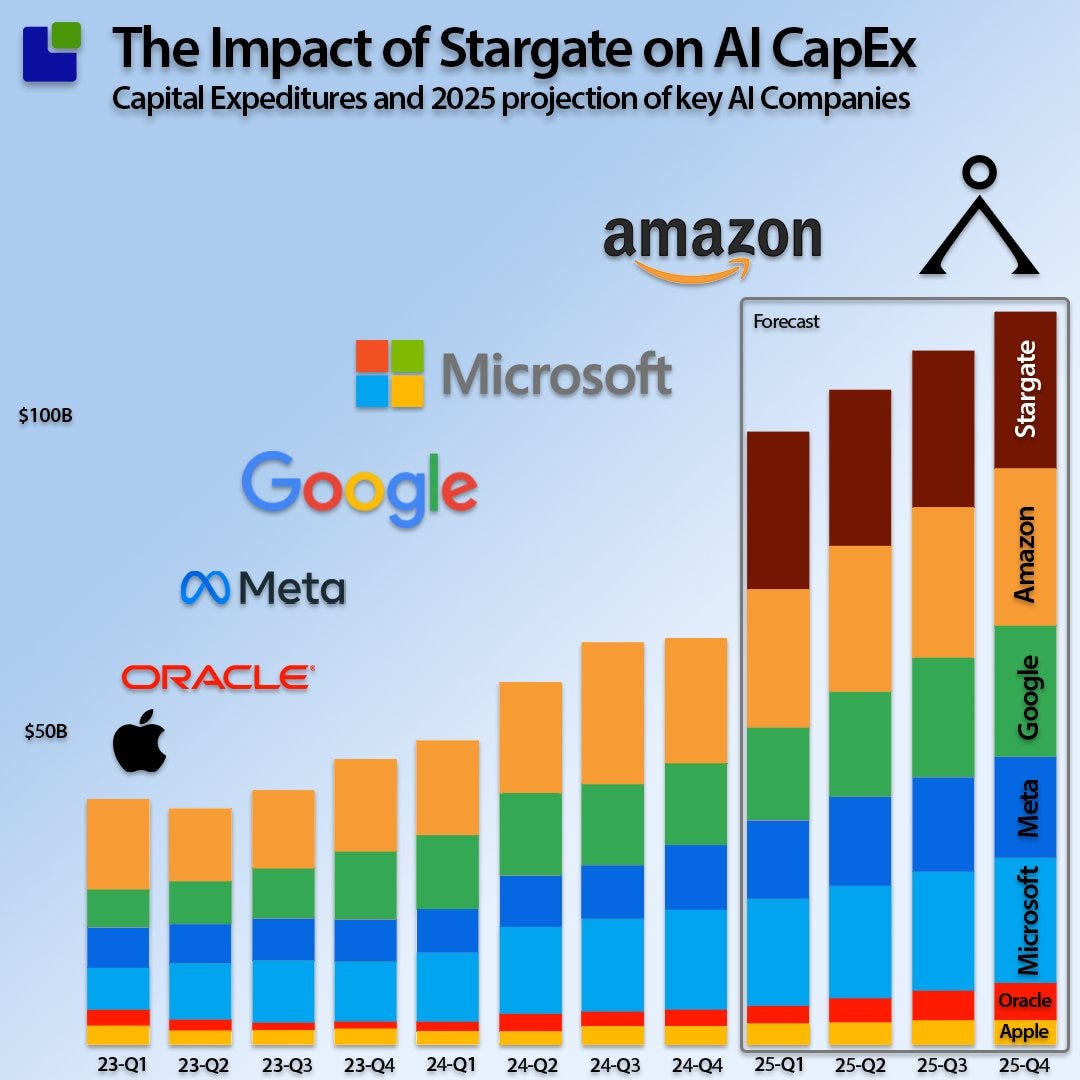

Data Centers drive record surge in tech investment

@bespokeinvest: Data center investment added a full percentage point to GDP in Q1; a record.

🙂

☑️ #62 Apr 29, 2025

Direct Connect 2025 Keynote

@Intel: Direct Connect 2025 will bring together executive leaders, customers, industry technologists, and ecosystem partners for an exclusive event focused on the future of technology and innovation on April 29th, 2025. Join us via livestream for the morning keynotes, starting with an opening address from our CEO, Lip-Bu Tan. This will be followed by a joint presentation from Naga Chandrasekaran, EVP, CTO, GM, and Ann Kelleher, EVP, Foundry Technology & Manufacturing. Kevin O’Buckley, SVP, GM, Foundry Services, will conclude the morning sessions with a summary of our progress over the past year. Direct Connect 2025 promises to be a pivotal gathering, showcasing how our solutions address complex challenges, drive innovation, and optimize operational efficiency. Don't miss this opportunity to gain valuable insights and be part of the conversation shaping the future.

🙂

☑️ #61 Apr 28, 2025

Huawei hopes the newest in the series of its Ascend Al processors, Ascend 910D, will be more powerful than Nvidia's H100

wsj.com: [Excerpt] China’s Huawei Develops New AI Chip, Seeking to Match Nvidia. Superpower rivalry over semiconductors heats up despite Washington’s attempts to block Beijing.

This year, Huawei is poised to ship more than 800,000 Ascend 910B and 910C chips to customers including state-owned telecommunications carriers and private AI developers such as TikTok parent ByteDance, people familiar with the matter said. Some buyers have already been in talks with Huawei to increase orders of the 910C after the Trump administration restricted the exports of Nvidia’s H20s, the people said.

Despite manufacturing bottlenecks, Huawei and several Chinese chip firms have already been able to deliver some products comparable to Nvidia chips, albeit with a lag of a few years. Chip makers have been turning to technologies that can pack several chips together to create more powerful processors, as it gets harder and more expensive to make the circuitry inside chips smaller.

Huawei hopes the newest in the series of its Ascend Al processors, Ascend 910D, will be more powerful than Nvidia's H100.

🙂

☑️ #60 Apr 24, 2025

How to power the explosive growth of artificial intelligence with reliable, scalable American energy

news.okstate.edu: [Excerpt] National leaders convene to address energy imperative behind America’s AI revolution.

In a landmark event, leaders from technology, energy and academia convened Thursday at Oklahoma State University’s Hamm Institute for American Energy for the Powering AI: Global Leadership Summit.

The one-day, high-level summit focused on solving one of the most pressing challenges of our time: how to power the explosive growth of artificial intelligence with reliable, scalable American energy.

Hosted by the Hamm Institute, the summit marks the first time these three critical industries have united to build a collaborative strategy for the AI age. In a rapidly evolving technological landscape, attendees focused on the infrastructure, power systems and policy innovation needed to support the U.S. as a global leader in artificial intelligence and big data.

“AI is pushing the boundaries of what’s possible—but without the infrastructure to scale it, we’re only scratching the surface,”

By building capacity now, we position the United States to lead in an era where computational intelligence becomes a defining element of national strength and societal wellbeing.”

Anthropic co-founder Jack Clark

"There's been no significant change" in the market. Kevin Miller, Amazon's Vice President of Global Data CentersThe company is experiencing "tremendous growth" in this sector. Josh Parker, Nvidia's Senior Director of Corporate Sustainability

🙂

☑️ #59 Apr 23, 2025

Moore's Law isn't dead

@wmhuo168 [Thread] BREAKING: China made 5nm chips without EUV.

Let that sink in.

No ASML.

No Nikon.

Just brute-force DUV, clever engineering, and sheer industrial will.

Moore's Law didn't die.

It moved to Shanghai.

Everyone said:

"No EUV, no 5nm."

But SMIC quietly shipped Kirin 9000S and 5G chips at 7nm, then improved yield and density with SAQP.

Now it's at 5nm-class using DUV only. 1/12

How?

By stacking multiple litho + etch steps:

Self-Aligned Quadruple Patterning (SAQP).

It's dirty, slow, expensive—but it works.

Moore's Law by force, not finesse. 2/12

This wasn't supposed to happen.

Washington said:

"Cut off ASML, and China's fabs will stagnate."

Instead, China pulled off the most complex DUV node ever fabricated.

With no help. 3/12

TSMC needs EUV for 5nm.

Samsung needs EUV for 5nm.

SMIC?

Just more DUV passes, tighter CD control, and god-tier etch precision from AMEC. 4/12

Yes, AMEC, not Lam.

China's etching tools are world-class.

And NAURA's cleaning gear now rivals TEL's.

These aren't knockoffs.

These are frontline fabs. 5/12

At this point, every SMIC chip that ships is a middle finger to the embargo.

They were supposed to collapse.

Instead they leveled up. 6/12

Let's talk performance.

DUV-based 5nm isn't bleeding-edge, but it's good enough for 90% of Al workloads, 5G, and high-end consumer silicon.

China doesn't need to match Apple M3.

Just outproduce Qualcomm. 7/12

And it's working.

Kirin 9000S, made by SMIC, powers Huawei's Mate 60.

That phone beat the iPhone 15 to satellite calls.

Under sanctions.

Without EUV. 8/12

So what's next?

Rumors swirl of a 3nm-class DUV node using SAOP (Self-Aligned Octuple Patterning).

Yes, it's insane.

But insanity is the new strategy. 9/12

The West still clings to its EUV monopoly.

But in the trenches, China is redefining Moore's Law with sandpaper and willpower.

Not elegance, just execution. 10/12

Next up:

Silicon Secrets Ep. 4 - "The Dragon's Die: How Chiplets Broke the Western Lock"

> Monolithic is dead.

China embraced chiplets before Intel did.

And it's scaling faster than you think. 11/12

Moore's Law isn't dead.

It defected.

And in a DUV-lit fab in Shanghai, it's alive and well.

You just weren't meant to see it. 12/12

🙂

☑️ #58 Apr 22, 2025

TSMC reports major losses in Arizona following strong performance in China

@BarrettYouTube: TSMC has racked up over US 1.2 billion in losses at its Arizona fabs over the past four years, even as its Nanjing plant in China turned a profit last year. Meanwhile, the company also lost billions in Japan and Europe.

Despite the mounting losses, TSMC just pledged another $100B investment in the U.S., in what’s being called the largest single foreign investment in American history. Trump even admitted he threatened 100% tariffs if TSMC didn’t build in the U.S.

Critics in Taiwan are calling it a repeat of how the U.S. crushed Japan’s chip industry in the ’80s, accusing local leaders of blindly handing over Taiwan’s tech advantage without negotiating returns.

Beijing weighed in too, with the Taiwan Affairs Office saying the DPP is using TSMC as a tool in its “rely on America for independence” play—and calling out Taiwan’s leadership for “selling out” the island’s semiconductor crown jewel.

+ Related content:

dimsumdaily.hk [Excerpt] According to the company’s latest annual shareholder report, TSMC’s plant in Arizona incurred a loss of 14.3 billion New Taiwan dollars (approximately 440 million US dollars) last year, marking the highest annual loss since the company began its investments in US manufacturing. Over the past four years, TSMC has accumulated losses exceeding 39.4 billion New Taiwan dollars at this site.

The company’s other overseas ventures also faced difficulties. Its advanced chip manufacturing facility in Japan recorded a loss of 4.38 billion New Taiwan dollars in 2024, while a joint venture in Germany reported a loss of 556 million New Taiwan dollars.

In stark contrast, TSMC’s operations on the Chinese mainland have performed well, with the Nanjing plant alone generating a profit of 25.95 billion New Taiwan dollars in 2024. Altogether, profits from mainland operations have surpassed 80 billion New Taiwan dollars over the past four years.

pr.tsmc.com/english/news/3228 (update; 4/23/25) > TSMC 2025 (North America Technology Symposium in Santa Clara, California)

TSMC Unveils Next-Generation A14 Process at North America Technology Symposium. Showcasing TSMC’s latest offerings for high performance computing, smartphone, automotive, and IoT applications Taiwan Semiconductor Manufacturing.

Company Limited (TSMC) unveiled on Wednesday its "next cutting-edge logic process technology, A14," at the North America Technology Symposium.

"Representing a significant advancement from TSMC's industry-leading N2 process, A14 is designed to drive Al transformation forward by delivering faster computing and greater power efficiency. It is also expected to enhance smartphones by improving their on-board Al capabilities, making them even smarter," TSMC detailed.

🙂

☑️ #57 Apr 17, 2025

World’s fastest memory writes 25 billion bits per sec, 10,000× faster than current tech

fudan.edu.cn: [Excerpt] Researchers develop flash memory device.

Researchers from Shanghai-based Fudan University have developed a picosecond-level flash memory device with an unprecedented program speed of 400 picoseconds, equivalent to operating 25 billion times per second, shattering the existing speed limits in information storage.

It became the fastest semiconductor charge storage device currently known to humanity, and scientists said such a breakthrough will have important application values to assist the ultra-fast operation of large artificial intelligence models.

+ Related content:

interestingengineering.com: [Excerpts] PoX could be the key to unlocking performance bottlenecks caused by storage limitations in AI hardware.

Industrial and strategic implications.

Flash memory remains a cornerstone of global semiconductor strategy thanks to its cost and scalability. Fudan’s advance, reviewers say, offers a “completely original mechanism” that may disrupt that landscape.

If mass‑produced, PoX‑style memory could eliminate separate high‑speed SRAM caches in AI chips, slashing area and energy. It can enable instant‑on, low‑power laptops and phones, and support database engines that hold entire working sets in persistent RAM.

🙂

☑️ #56 Apr 10, 2025

Crusoe Cloud AI origins

forbes.com: [Excerpt] Meet The Tiny Startup Building Stargate, OpenAI’s $500 Billion Data Center Moonshot. Crusoe Energy got its start harnessing oilfield flare gas to mine bitcoin. So how did cofounders Chase Lochmiller and Cully Cavness end up building the first phase of the world’s biggest AI datacenter – $500 billion Project Stargate – for OpenAI and Oracle?

That set up Crusoe for its leap to AI. In 2023, Crusoe borrowed $200 million to purchase coveted Nvidia H100 GPUs to expand its nascent cloud computing services and hired talent from the likes of datacenter giants 365 Main and Digital Realty. They topped off the year by partnering with Iceland’s AtNorth data center developer to set up their Crusoe Cloud services in the frigid country (making it easier to cool off GPUs). "Crusoe is building datacenters larger than Digital Realty ever did," marvels former CEO Bill Stein, now a Crusoe board member. Key to their longterm success, he says, will be "signing 15 year leases where counterparty risk is minimal," like with Stargate.

Crusoe’s shot at the big time came in the first quarter of 2024. Lochmiller had learned that some tech giants, believed to be OpenAI and Microsoft (he declines to say who) were shopping for contractors to build an AI-specialized, hyperscale datacenter, and do it fast. Other developers balked at the ludicrous timetable of less than two years. Land acquisition, permits, supply chain lead times, all looked impossible.

+ Related content:

crusoe.ai (3/18/25): [Excerpt] Crusoe Expands AI Data Center Campus In Abilene To 1.2 Gigawatts.

SAN FRANCISCO, CA / ABILENE, TX / March 18, 2025 / - Today Crusoe, the industry’s first vertically integrated AI infrastructure provider, announced construction has begun on the next phase of its AI data center at the Lancium Clean Campus in Abilene, Texas. The second phase of construction, expected to be completed in mid-2026, includes six additional buildings, bringing the total facility to eight buildings, approximately 4 million square feet, and a total power capacity of 1.2 gigawatts (GW). With this announcement, Crusoe's total data center footprint will reach more than 1.6 GW under operations and construction with an additional pipeline exceeding 10 GW in development.

Crusoe began constructing the AI data center in Abilene in June 2024. The initial phase, comprising two buildings at 980,000 square feet and 200+ megawatts, is expected to be energized in the first half of 2025. Designed and built by Crusoe at the Lancium Clean Campus, the project incorporates innovative data center design to support powerful AI workloads. Each building is designed to operate up to 50,000 NVIDIA GB200 NVL72s on a single integrated network fabric, advancing the frontier of data center design and scale for AI training and inference workloads. The construction site has approximately 2,000 people working on the project daily and is expected to reach nearly 5,000 as a result of the expansion. The Development Corporation of Abilene (DCOA) has previously estimated that the direct and indirect economic impact of the initial phase of the project is approximately $1 billion over 20 years. This expansion has the potential to scale that impact by many magnitudes.

🙂

☑️ #55 Apr 6, 2025

TSMC's potential historic deal with Intel Explained

AI Supremacy: Taiwan's biggest firm's investment in U.S. semiconductor industry continues to skyrocket. ✈️ Semiconductor giants Intel and TSMC are reportedly teaming up. 🚀

🙂